Paper:

Grid Map Correction for Fall Risk Alert System Using Smartphone

Daigo Katayama*, Kazuo Ishii*, Shinsuke Yasukawa*, Yuya Nishida*, Satoshi Nakadomari**

, Koichi Wada**, Akane Befu**, and Chikako Yamada**

, Koichi Wada**, Akane Befu**, and Chikako Yamada**

*Kyushu Institute of Technology

2-4 Hibikino, Wakamatsu-ku, Kitakyushu, Fukuoka 808-0196, Japan

**NEXT VISION

Kobe Eye Center 2F, 2-1-8 Minatojima-Minamimachi, Chuo-ku, Kobe, Hyogo 650-0047, Japan

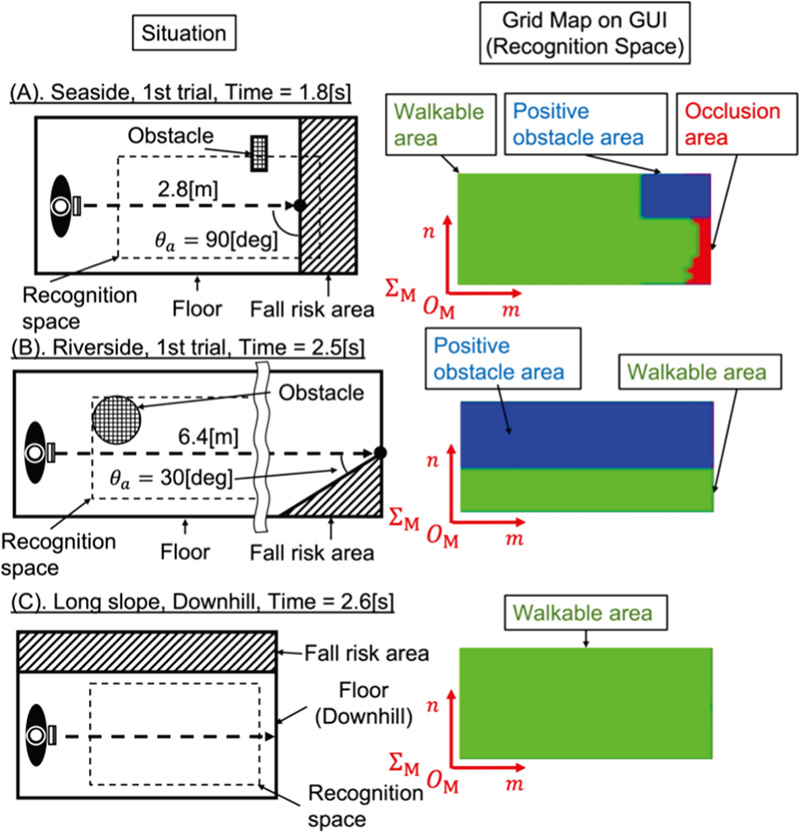

In this work, we have incorporated an electronic travel aid (ETA) as a smartphone application that alerts fall risks to the visually impaired. The application detects negative obstacles, such as platform edges and stairs, and occlusion using a grid map including height information to estimate fall risk based on the distance from an area’s edge to the user, and the area ratio. Here, we describe a grid map correction method based on the surrounding conditions of each cell to avoid area misclassification. The smartphone application incorporating this correction method was verified in environments similar to station platforms by evaluating its usefulness, robustness against environmental changes, and stability as a smartphone application. The verification results showed that the correction method is, in fact, useful in actual environments and can be implemented as a smartphone application.

The grid map corrected by the proposed method

- [1] N. S. Ahmad, N. L. Boon, and P. Goh, “Multi-Sensor Obstacle Detection System via Model-Based State-Feedback Control in Smart Cane Design for the Visually Challenged,” IEEE Access, Vol.6, pp. 64182-64192, 2018. https://doi.org/10.1109/ACCESS.2018.2878423

- [2] J.-R. Rizzo, K. Conti, T. Thomas et al., “A new primary mobility tool for the visually impaired: A white cane–adaptive mobility device hybrid,” Assist. Technol., Vol.30, No.5, pp. 219-225, 2018. https://doi.org/10.1080/10400435.2017.1312634

- [3] R. K. Katzschmann, B. Araki, and D. Rus, “Safe Local Navigation for Visually Impaired Users with a Time-of-Flight and Haptic Feedback Device,” IEEE Trans. Neural Syst. Rehabil. Eng., Vol.26, No.3, pp. 583-593, 2018. https://doi.org/10.1109/TNSRE.2018.2800665

- [4] E. J. A. Prada and L. M. S. Forero, “A belt-like assistive device for visually impaired people: Toward a more collaborative approach,” Cogent Engineering, Vol.9, No.1, Article No.2048440, 2022. https://doi.org/10.1080/23311916.2022.2048440

- [5] C. Feltner, J. Guilbe, S. Zehtabian et al., “Smart Walker for the Visually Impaired,” 2019 IEEE Int. Conf. on Communications (ICC 2019), pp. 1-6, 2019. https://doi.org/10.1109/ICC.2019.8762081

- [6] K. Tobita, K. Sagayama, M. Mori, and A. Tabuchi, “Structure and Examiation of the Guidance Robot LIGHBOT for Visually Impaired and Elderly People,” J. Robot. Mechatron., Vol.30, No.1, pp. 86-92, 2018. https://doi.org/10.20965/jrm.2018.p0086

- [7] S. Alghamdi, R. v. Schyndel, and I. Khalil, “Accurate positioning using long range active RFID technology to assist visually impaired people,” J. of Network and Computer Applications, Vol.41, pp. 135-147, 2014. https://doi.org/10.1016/j.jnca.2013.10.015

- [8] D. Sato, U. Oh, J. Guerreiro et al., “NavCog3 in the Wild: Large-scale Blind Indoor Navigation Assistant with Semantic Features,” ACM Trans. Access. Comput., Vol.12, No.3, Article No.14, 2019. https://doi.org/10.1145/3340319

- [9] M. Kuribayashi, S. Kayukawa, H. Takagi et al., “LineChaser: A Smartphone-Based Navigation System for Blind People to Stand in Lines,” Proc. of the 2021 CHI Conf. on Human Factors in Computing Systems, Article No.33, 2021. https://doi.org/10.1145/3411764.3445451

- [10] M. Kuribayashi, S. Kayukawa, J. Vongkulbhisal et al., “Corridor-Walker: Mobile Indoor Walking Assistance for Blind People to Avoid Obstacles and Recognize Intersections,” Proc. ACM Hum.-Comput. Interact., Vol.6, No.MHCI, Article No.179, 2022. https://doi.org/10.1145/3546714

- [11] N. D. T. Aldas, S. Lee, C. Lee et al., “AIGuide: An Augmented Reality Hand Guidance Application for People with Visual Impairments,” The 22nd Int. ACM SIGACCESS Conf. on Computers and Accessibility, Article No.2, 2020. https://doi.org/10.1145/3373625.3417028

- [12] Z. Zhong, Z. Wang, L. Lin et al., “Robust Negative Obstacle Detection in Off-Road Environments Using Multiple LiDARs,” 2020 6th Int. Conf. on Control, Automation and Robotics (ICCAR), pp. 700-705, 2020. https://doi.org/10.1109/ICCAR49639.2020.9108058

- [13] L. Chen, J. Yang, and H. Kong, “Lidar-histogram for fast road and obstacle detection,” 2017 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1343-1348, 2017. https://doi.org/10.1109/ICRA.2017.7989159

- [14] F. Xu, L. Chen, J. Lou, and M. Ren, “A real-time road detection method based on reorganized lidar data,” PLoS One, Vol.14, No.4, Article No.e0215159, 2019. https://doi.org/10.1371/journal.pone.0215159

- [15] R. D. Morton and E. Olson, “Positive and negative obstacle detection using the HLD classifier,” 2011 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1579-1584, 2011. https://doi.org/10.1109/IROS.2011.6095142

- [16] T. Hines, K. Stepanas, F. Talbot et al., “Virtual Surfaces and Attitude Aware Planning and Behaviours for Negative Obstacle Navigation,” IEEE Robotics and Automation Letters, Vol.6, No.2, pp. 4048-4055, 2021. https://doi.org/10.1109/LRA.2021.3065302

- [17] T. Overbye and S. Saripalli, “G-VOM: A GPU Accelerated Voxel Off-Road Mapping System,” 2022 IEEE Intelligent Vehicles Symp. (IV), pp. 1480-1486, 2022. https://doi.org/10.1109/IV51971.2022.9827107

- [18] M. Bajracharya, J. Ma, M. Malchano et al., “High fidelity day/night stereo mapping with vegetation and negative obstacle detection for vision-in-the-loop walking,” 2013 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3663-3670, 2013. https://doi.org/10.1109/IROS.2013.6696879

- [19] D. Kim, D. Carballo, J. D. Calro et al., “Vision Aided Dynamic Exploration of Unstructured Terrain with a Small-Scale Quadruped Robot,” 2020 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 2464-2470, 2020. https://doi.org/10.1109/ICRA40945.2020.9196777

- [20] F. Jenelten, R. Grandia, F. Farshidian, and M. Hutter, “TAMOLS: Terrain-Aware Motion Optimization for Legged Systems,” IEEE Trans. Rob., Vol.38, No.6, pp. 3395-3413, 2022. https://doi.org/10.1109/TRO.2022.3186804

- [21] T. Sasaki and T. Fujita, “Gap Traversing Motion via a Hexapod Tracked Mobile Robot Based on Gap Width Detection,” J. Robot. Mechatron., Vol.33, No.3, pp. 665-675, 2021. https://doi.org/10.20965/jrm.2021.p0665

- [22] D. Katayama, K. Ishii, S. Yasukawa et al., “Fall Risk Estimation for Visually Impaired Using iPhone with LiDAR,” J. of Robotics, Networking and Artificial Life, Vol.9, No.4, pp. 349-357, 2023.

- [23] Y. Wang, W.-L. Chao, D. Garg et al., “Pseudo-LiDAR from Visual Depth Estimation: Bridging the Gap in 3D Object Detection for Autonomous Driving,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 8437-8445, 2019. https://doi.org/10.1109/CVPR.2019.00864

- [24] D. Ternes and K. E. MacLean, “Designing Large Sets of Haptic Icons with Rhythm,” Haptics: Perception, Devices and Scenarios, pp. 199-208, 2008. https://doi.org/10.1007/978-3-540-69057-3_24

- [25] M. Tauchi and M. Ohkura, “A Present Situation and Problems of Independent Travel of Blind and Visual Impaired,” J. of the Society of Instrument and Control Engineers, Vol.34, No.2, pp. 140-146, 1995 (in Japanese). https://doi.org/10.11499/sicejl1962.34.140

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.