Paper:

Telerehabilitation System Based on OpenPose and 3D Reconstruction with Monocular Camera

Keisuke Osawa*

, Yu You*, Yi Sun*, Tai-Qi Wang*

, Yu You*, Yi Sun*, Tai-Qi Wang*

, Shun Zhang*, Megumi Shimodozono**, and Eiichiro Tanaka*

, Shun Zhang*, Megumi Shimodozono**, and Eiichiro Tanaka*

*Graduate School of Information, Production and Systems, Waseda University

2-7 Hibikino, Wakamatsu-ku, Kitakyushu, Fukuoka 808-0135, Japan

**Graduate School of Medical and Dental Sciences, Kagoshima University

8-35-1 Sakuragaoka, Kagoshima, Kagoshima 890-8544, Japan

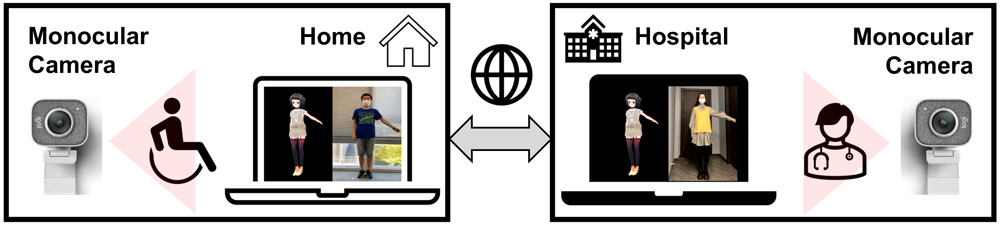

Owing to aging populations, the number of elderly people with limb dysfunction affecting their daily lives will continue to increase. These populations have a great need for rehabilitation training to restore limb functions. However, the current numbers of rehabilitation hospitals and doctors are limited. Moreover, people often cannot go to a hospital owing to external conditions (e.g., the impacts of COVID-19). Thus, an urgent need exists for telerehabilitation system for allowing patients to have training at home. The purpose of this study is to develop an easy-to-use system for allowing target users to experience rehabilitation training at home and to remotely receive real-time guidance from doctors. The proposed system only needs a monocular camera to capture 3D motions. First, the 2D key joints of the human body are detected; then, a simple baseline network is used to reconstruct 3D key joints from the 2D key joints. The 2D detection only has an average angle error of 1.7% compared to that of a professional motion capture system. In addition, the 3D reconstruction has a mean per-joint position error of only 67.9 mm compared to the real coordinates. After acquiring the user’s 3D motions, the system synchronizes the 3D motions to a virtual human model in Unity, providing the user with a more intuitive and interactive experience. Generally, many telerehabilitation systems require professional motion capture cameras and wearable equipment, and the training target is a single body part. In contrast, the proposed system is low-cost and easier to use and only requires a monocular camera and computer to achieve real-time and intuitive telerehabilitation (even though the training target is the entire body). Furthermore, the system provides a similarity evaluation of the motions based on the dynamic time warping; this can provide more accurate and direct feedback to users. In addition, a series of evaluation experiments verify the system’s usability, convenience, feasibility, and accuracy, with the ultimate conclusion that the system can be used in practical rehabilitation applications.

Overview image of telerehabilitation system based on OpenPose

- [1] D. K. Shaw, “Overview of Telehealth and Its Application to Cardiopulmonary Physical Therapy,” Cardiopulmonary Physical Therapy J., Vol.20, No.2, pp. 13-18, 2009.

- [2] T. Takebayashi, K. Takahashi, S. Amano, Y. Uchiyama, M. Gosho, K. Domen, and K. Hachisuka, “Assessment of the Efficacy of ReoGo-J Robotic Training Against Other Rehabilitation Therapies for Upper-Limb Hemiplegia After Stroke: Protocol for a Randomized Controlled Trial,” Frontiers in Neurology, Vol.9, Article No.730, 2018. https://doi.org/10.3389/fneur.2018.00730

- [3] Y. T. Liao, H. Yang, H. H. Lee, and E. Tanaka, “Development and Evaluation of a Kinect-Based Motion Recognition System based on Kalman Filter for Upper-Limb Assistive Device,” Proc. of The SICE Annual Conf., pp. 1621-1626, 2019. https://doi.org/10.23919/SICE.2019.8859744

- [4] E. Tanaka, W. L. Lian, Y. T. Liao, H. Yang, L. N. Li, H. H. Lee, and M. Shimodozono, “Development of a Tele-Rehabilitation System Using an Upper Limb Assistive Device,” J. Robot. Mechatron., Vol.33, No.4, pp. 877-886, 2021. https://doi.org/10.20965/jrm.2021.p0877

- [5] D. P. Marcos, O. Chevalley, T. Schmidlin, G. Garipelli, A. Serino, P. Vuadens, T. Tadi, O. Blanke, and J. D. R. Millan, “Increasing upper limb training intensity in chronic stroke using embodied virtual reality: a pilot study,” J. of NeuroEngineering and Rehabilitation, Vol.14, No.1, Article No.119, 2017. https://doi.org/10.1186/s12984-017-0328-9

- [6] M. Ma, R. Proffitt, and M. Skubic, “Validation of a Kinect V2 based rehabilitation game,” PLOS ONE, Vol.13, No.8, Article No.e0202338, 2018. https://doi.org/10.1371/journal.pone.0202338

- [7] Y. You, T. Q. Wang, K. Osawa, M. Shimodozono, and E. Tanaka, “Kinect-based 3D Human Motion Acquisition and Evaluation System for Remote Rehabilitation and Exercise,” Proc. of 2022 IEEE/ASME Int. Conf. on Advanced Intelligent Mechatronics (AIM 2022), pp. 1213-1218, 2022. https://doi.org/10.1109/AIM52237.2022.9863318

- [8] T. Q. Wang, Y. You, K. Osawa, M. Shimodozono, and E. Tanaka, “A Remote Rehabilitation and Evaluation System Based on Azure Kinect,” J. Robot. Mechatron., Vol.34, No.6, pp. 1371-1382, 2022. https://doi.org/10.20965/jrm.2022.p1371

- [9] A. Toshev and C. Szegedy, “DeepPose: Human Pose Estimation via Deep Neural Networks,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1653-1660, 2014. https://doi.org/10.48550/arXiv.1312.4659

- [10] A. Newell, K. Yang, and J. Deng, “Stacked Hourglass Networks for Human Pose Estimation,” Proc. of 2016 European Conf. on Computer Vision (ECCV2016), pp. 483-499, 2016. https://doi.org/10.48550/arXiv.1603.06937

- [11] Z. Cao, G. Hidalgo, T. Simon, S. E. Wei, and Y. Sheikh, “OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.43, No.1, pp. 172-186, 2021. https://doi.org/10.48550/arXiv.1812.08008

- [12] L. Pishchulin, E. Insafutdinov, S. Tang, B. Andres, M. Andriluka, P. Gehler, and B. Schiele, “DeepCut: Joint Subset Partition and Labeling for Multi Person Pose Estimation,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 4929-4937, 2016. https://doi.org/10.48550/arXiv.1511.06645

- [13] H. Yang, N. Kita, and Y. Kita, “Position and Pose Estimation of Camera-Head with Foveated Wide Angle Lens,” J. Robot. Mechatron., Vol.15, No.3, pp. 293-303, 2003. https://doi.org/10.20965/jrm.2003.p0293

- [14] S. Hu, M. Jiang, T. Takaki, and I. Ishii, “Real-Time Monocular Three-Dimensional Motion Tracking Using a Multithread Active Vision System,” J. Robot. Mechatron., Vol.30, No.3, pp. 453-466, 2018. https://doi.org/10.20965/jrm.2018.p0453

- [15] W. Li, H. Liu, H. Tang, P. Wang, and L. Van Gool, “MHFormer: Multi-Hypothesis Transformer for 3D Human Pose Estimation,” 2022 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 13137-13146, 2022. https://doi.org/10.48550/arXiv.2111.12707

- [16] G. Rogez, P. Weinzaepfel, and C. Schmid, “LCR-Net: Localization-Classification-Regression for Human Pose,” 2017 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 3433-3441, 2017. https://doi.org/10.1109/CVPR.2017.134

- [17] D. L. Luo, S. L. Du, and T. Ikenaga, “Multi-task neural network with physical constraint for real-time multi-person 3D pose estimation from monocular camera,” Multimedia Tools and Applications, Vol.80, pp. 27223-27244, 2021. https://doi.org/10.1007/s11042-021-10982-1

- [18] T. Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollar, and C. L. Zitnick, “Microsoft COCO: Common Objects in Context,” Proc. of 2014 European Conf. on Computer Vision (ECCV2014), pp. 740-755, 2014. https://doi.org/10.48550/arXiv.1405.0312

- [19] J. Martinez, R. Hossain, J. Romero, and J. J. Little, “A Simple Yet Effective Baseline for 3D Human Pose Estimation,” Proc. of the IEEE Int. Conf. on Computer Vision (ICCV), pp. 2659-2668, 2017. https://doi.org/10.48550/arXiv.1705.03098

- [20] C. Ionescu, F. Li, and C. Sminchisescu, “Latent Structured Models for Human Pose Estimation,” Proc. of the IEEE Int. Conf. on Computer Vision (ICCV), pp. 2220-2227, 2011. https://doi.org/10.1109/ICCV.2011.6126500

- [21] C. Ionescu, D. Papava, V. Olaru, and C. Sminchisescu, “Human3.6M: Large Scale Datasets and Predictive Methods for 3D Human Sensing in Natural Environments,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.36, No.7, pp. 1325-1339, 2014. https://doi.org/10.1109/TPAMI.2013.248

- [22] K. Takimoto, K. Omon, Y. Murakawa, and H. Ishikawa, “Case of cerebellar ataxia successfully treated by virtual reality-guided rehabilitation,” BMJ Case Reports, Vol.14, No.5, Article No.e242287, 2021. https://doi.org/10.1136/bcr-2021-242287

- [23] K. Omon, M. Hara, and H. Ishikawa, “Virtual Reality-guided, Dual-task, Body Trunk Balance Training in the Sitting Position Improved Walking Ability without Improving Leg Strength,” Progress in Rehabilitation Medicine, Vol.4, Article No.20190011, 2019. https://doi.org/10.2490/prm.20190011

- [24] D. Y. Kwon and M. Gross, “Combining Body Sensors and Visual Sensors for Motion Training,” Proc. of 2005 ACM SIGCHI Int. Conf. on Advances in Computer Entertainment Technology (ACE), pp. 94-101, 2005. https://doi.org/10.1145/1178477.1178490

- [25] D. J. Berndt and J. Clifford, “Using dynamic time warping to find patterns in time series,” Proc. of the 3rd Int. Conf. on Knowledge Discovery and Data Mining, pp. 359-370, 1994.

- [26] X. Yu and S. Xiong, “A Dynamic Time Warping Based Algorithm to Evaluate Kinect-Enabled Home-Based Physical Rehabilitation Exercises for Older People,” Sensors, Vol.19, No.13, Article No.2882, 2019. https://doi.org/10.3390/s19132882

- [27] C. Lugaresi, J. Tang, H. Nash, C. McClanahan, E. Uboweja, M. Hays, F. Zhang, C. L. Chang, M. G. Yong, J. Lee, W. T. Chang, W. Hua, M. Georg, and M. Grundmann, “MediaPipe: A Framework for Building Perception Pipelines,” arXiv Preprint, arXiv:1906.08172, 2019. https://doi.org/10.48550/arXiv.1906.08172

- [28] W. O. de Morais and N. Wickstrom, “A Serious Computer Game to Assist Tai Chi Training for the Elderly,” Proc. of 2011 IEEE 1st Int. Conf. on Serious Games and Applications for Health (SeGAH), pp. 1-8, 2011. https://doi.org/10.1109/SeGAH.2011.6165450

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.