Paper:

Welding Line Detection Using Point Clouds from Optimal Shooting Position

Tomohito Takubo, Erika Miyake, Atsushi Ueno, and Masaki Kubo

Osaka Metropolitan University

3-3-138 Sugimoto, Sumiyoshi-ku, Osaka 558-8585, Japan

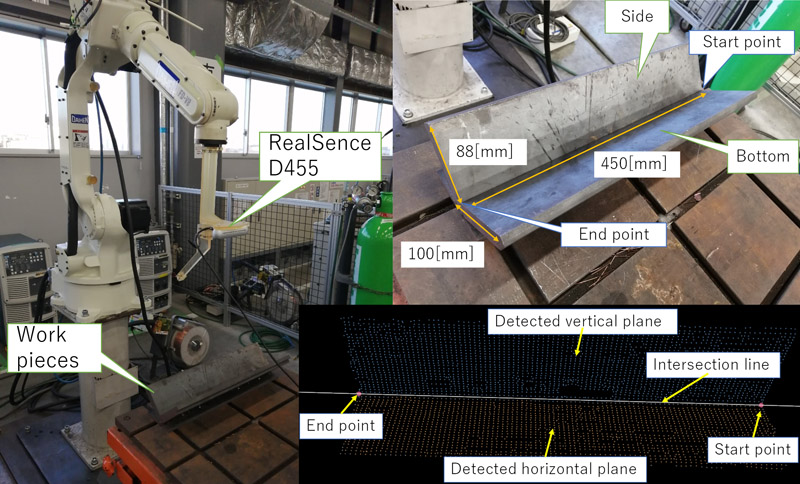

A method for welding line detection using point cloud data is proposed to automate welding operations combined with a contact sensor. The proposed system targets a fillet weld, in which the joint line between two metal plates attached vertically is welded. In the proposed method, after detecting the position and orientation of two flat plates regarding a single viewpoint as a rough measurement, the flat plates are measured from the optimal shooting position in each plane in detail to detect a precise weld line. When measuring a flat plate from an angle, the 3D point cloud obtained by a depth camera contains measurement errors. For example, a point cloud measuring a plane has a wavy shape or void owing to light reflection. However, by shooting the plane vertically, the point cloud has fewer errors. Using these characteristics, a two-step measurement algorithm for determining weld lines was proposed. The weld line detection results show an improvement of 5 mm compared with the rough and precise measurements. Furthermore, the average measurement error was less than 2.5 mm, and it is possible to narrow the range of the search object contact sensor for welding automation.

Weld line detection for fillet welding using point cloud data

- [1] A. Matsushita, M. Yamanaka, S. Kaneko, H. Ohfuji, and K. Fukuda, “Basic Image Measurement for Laser Welding Robot Motion Control,” Int. J. Automation Technol., Vol.3, No.2, pp. 136-143, 2009.

- [2] A. Matsushita, T. Morishita, S. Kaneko, H. Ohfuji, and K. Fukuda, “Image Detection of Seam Line for Laser Welding Robot,” J. Robot. Mechatron., Vol.23, No.6, pp. 919-925, 2011.

- [3] A. Suyama and Y. Aiyama, “Development of new teaching method for industrial robot using visual information,” Trans. of the Japan Society of Mechanical Engineers, Vol.84, No.865, Article No.18-00153, 2018 (in Japanease).

- [4] S. M. Ahmed, Y. Z. Tan et al., “Edge and corner detection for unorganized 3d point clouds with application to robotic welding,” IEEE/RSJ Int. Conf. on Robots and Intelligent Systems (IROS2028), pp. 7350-7355, 2018.

- [5] B. Hong, A. Jia, Y. Hong, X. Li, J. Gao, and Y. Qu, “Online Extraction of Pose Information of 3D Zigzag-Line Welding Seams for Welding Seam Tracking,” Sensors, Vol.21, Issue 2, Article No.375, 2021.

- [6] B. Zhou, Y. R. Liu, Y. Xiao, R. Zhou, Y. H. Gan, and F. Fang, “Intelligent Guidance Programming of Welding Robot for 3D Curved Welding Seam,” IEEE Access, Vol.9, pp. 42345-42357, 2021.

- [7] H. Ni, X. Lin, X. Ning, and J. Zhang, “Edge detection and feature line tracing in 3d-point clouds by analyzing geometric properties of neighborhoods,” Remote Sensing, Vol.8, No.9, Article No.710, 2016.

- [8] C. Choi, A. J. Trevor, and H. I. Christensen, “RGB-D edge detection and edge-based registration,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS2013), pp. 1568-1575, 2013.

- [9] S. M. Abid Hasan and K. Kon, “Depth edge detection by image-based smoothing and morphological operations,” J. of Computational Design and Engineering, pp. 191-197, 2016.

- [10] L. A. F. Fernandes and M. M. Oliveira, “Real-time line detection through an improved Hough transform voting scheme,” Pattern Recognition, Vol.41, Issue 1, pp. 299-314, 2008.

- [11] R. Bormann, J. Hampp, M. Hägele, and M. Vincze, “Fast and accurate normal estimation by efficient 3d edge detection,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS2015), pp. 3930-3937, 2015.

- [12] A. G. Buch, J. B. Jessen, D. Kraft, T. R. Savarimuthu, and N. Kruger, “Extended 3D Line Segments from RGB-D Data for Pose Estimation,” Image Analysis, Springer Berlin Heidelberg, pp. 54-65, 2013.

- [13] M. Brown, D. Windridge, and J.-Y. Guillemaut, “Globally optimal 2d-3d registration from points or lines without correspondences,” 2015 IEEE Int. Conf. on Computer Vision (ICCV), pp. 2111-2119, 2015.

- [14] A. M. Fischler and C. R. Bolles, “Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography,” Communications of the ACM, Vol.24, No.6, pp. 381-395, 1981.

- [15] R. B. Rusu, Z. C. Marton, N. Blodow, M. Dolha, and M. Beetz, “Towards 3D Point Cloud Based Object Maps for Household Environments,” Robotics and Autonomous Systems J., Vol.56, Issue 11, pp. 927-941, 2008.

- [16] R. B. Rusu and S. Cousins, “3D is here: Point Cloud Library (PCL),” IEEE Int. Conf. on Robotics and Automation (ICRA2011), pp. 1-4, 2011.

- [17] S. Chitta, I. Sucan, and S. Cousins, “Moveit! [ROS Topics],” IEEE Robotics and Automation Magazine, Vol.19, No.1, pp. 18-19, 2012.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.