Paper:

Azimuth Angle Detection Method Combining AKAZE Features and Optical Flow for Measuring Movement Accuracy

Kazuteru Tobita and Kazuhiro Mima

Shizuoka Institute of Science and Technology

2200-2 Toyosawa, Fukuroishi, Shizuoka 437-8555, Japan

In recent years, autonomous robots have been practically used outdoors for transportation, delivery, and other applications. To ensure safety and reliability, it is necessary to measure and evaluate the accuracy of a robot’s movement from the outside. In this study, we develop a device to externally measure the coordinates and azimuth angle of a robot by combining image processing and distance measurement. In this study, an azimuth angle detection method that combines coarse-angle detection using AKAZE feature detection and small-angle detection using optical flow is proposed. The experimental results show that the azimuth angle can be detected with a standard deviation of σ=0.70° indoors and σ=0.99° outdoors.

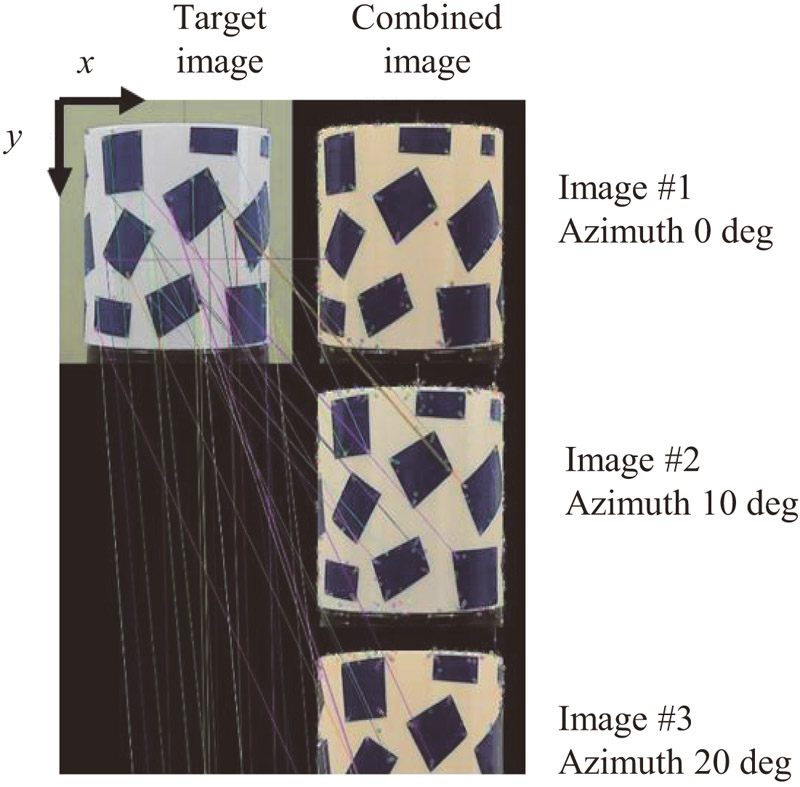

Example of tracking based on AKAZE features

- [1] ISO 13482:2014, “Robots and robotic devices – Safety requirements for personal care robots, 5.16 Hazards due to localization and navigation errors,” 2014.

- [2] K. Yamada, S. Koga, T. Shimoda, and K. Sato, “Autonomous Path Travel Control of Mobile Robot Using Internal and External Camera Images in GPS-Denied Environments,” J. Robot. Mechatron., Vol.33, No.6, pp. 1284-1293, 2021. https://doi.org/10.20965/jrm.2021.p1284

- [3] T. Doi, R. Hodoshima, Y. Fukuda, S. Hirose, T. Okamoto, and J. Mori, “Development of Quadruped Walking Robot TITAN XI for Steep Slopes – Slope Map Generation and Map Information Application –,” J. Robot. Mechatron., Vol.18, No.3, pp. 318-324, 2006. https://doi.org/10.20965/jrm.2006.p0318

- [4] F. Inoue, S. Doi, X. Huang, H. Tamura, T. Sasaki, and H. Hashimoto, “A study on Position Measurement System Using Laser Range Finder and Its Application for Construction Work,” The 5th Int. Conf. on the Advanced Mechatronics (ICAM2010), pp. 361-366, 2010. https://doi.org/10.1299/jsmeicam.2010.5.361

- [5] S. Tanaka and Y. Inoue, “Outdoor Human Detection with Stereo Omnidirectional Cameras,” J. Robot. Mechatron., Vol.32, No.6, pp. 1193-1199, 2020. https://doi.org/10.20965/jrm.2020.p1193

- [6] F. Inoue and E. Ohmoto, “High Accuracy Position Marking System Applying Mobile Robot in Construction Site,” J. Robot. Mechatron., Vol.24, No.6, pp. 985-991, 2012. https://doi.org/10.20965/jrm.2012.p0985

- [7] P. F. Alcantarilla, J. Nuevo, and A. Bartoli, “Fast Explicit Diffusion for Accelerated Features in Nonlinear Scale Spaces,” British Machine Vision Conference (BMVC), 2013. https://doi.org/10.5244/C.27.13

- [8] P. F. Alcantarilla, A. Bartoli, and A. J. Davison, “KAZE features,” Proc. of the 12th European Conf. on Computer Vision (ECCV), pp. 214-227, 2012. https://doi.org/10.1007/978-3-642-33783-3_16

- [9] D. G. Lowe, “Object recognition from local scale-invariant features,” Proc. of the Int. Conf. on Computer Vision. Vol.2. pp. 1150-1157, 1999. https://doi.org/10.1109/ICCV.1999.790410

- [10] R. Funayama, H. Yanagihara, L. V. Gool, T. Tuytelaars, and H. Bay, “Robust interest point detector and descriptor,” US2009238460, 2009.

- [11] B. K. P. Horn and B. G. Schunck, “Determining optical flow,” Artificial Intelligence, Vol.17, Issues 1-3, pp. 185-203, 1981. https://doi.org/10.1016/0004-3702(81)90024-2

- [12] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “ORB: An efficient alternative to SIFT or SURF,” Int. Conf. on Computer Vision, pp. 2564-2571, 2011. https://doi.org/10.1109/ICCV.2011.6126544

- [13] E. Rosten, and T. Drummond, “Machine Learning for High-Speed Corner Detection,” 9th European Conf. on Computer Vision (ECCV 2006), Vol.3951, pp. 2564-2571, 2006. https://doi.org/10.1007/11744023_34

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.