Paper:

Gesture Interface and Transfer Method for AMR by Using Recognition of Pointing Direction and Object Recognition

Takahiro Ikeda

, Naoki Noda, Satoshi Ueki, and Hironao Yamada

, Naoki Noda, Satoshi Ueki, and Hironao Yamada

Faculty of Engineering, Gifu University

1-1 Yanagido, Gifu 501-1193, Japan

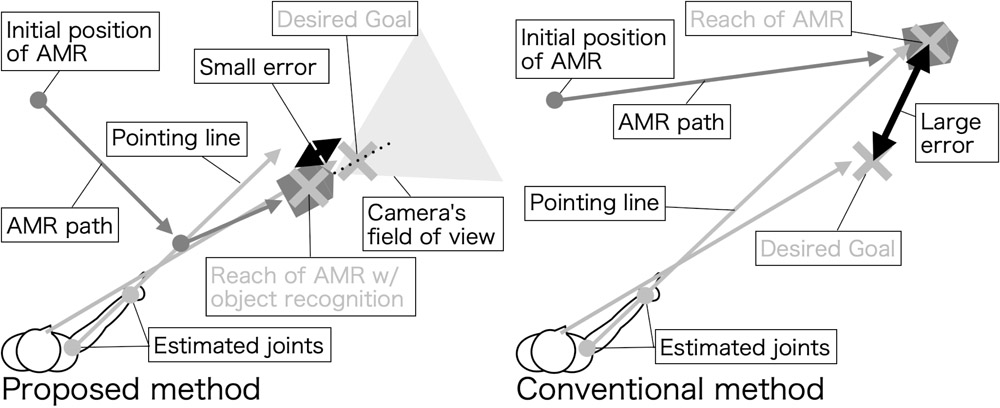

This paper describes a gesture interface for a factory transfer robot. Our proposed interface used gesture recognition to recognize the pointing direction, instead of estimating the point as in conventional pointing gesture estimation. When the autonomous mobile robot (AMR) recognized the pointing direction, it performed position control based on the object recognition. The AMR traveled along our unique path to ensure that its camera detected the object to be referenced for position control. The experimental results confirmed that the position and angular errors of the AMR controlled with our interface were 0.058 m and 4.7° averaged over five subjects and two conditions, which were sufficiently accurate for transportation. A questionnaire showed that our interface was user-friendly compared with manual operation with a commercially available controller.

AMR control using gesture interface

- [1] M. A. Goodrich and A. C. Schultz, “Human-robot interaction: a survey,” Now Publishers Inc., 2008.

- [2] K. Nakadai, K. Hidai, H. Mizoguchi, H. G. Okuno, and H. Kitano, “Real-time auditory and visual multiple-object tracking for humanoids,” Int. Joint Conf. on Artificial Intelligence, Vol.17, pp. 1425-1436, 2001.

- [3] O. Sugiyama, T. Kanda, M. Imai, H. Ishiguro, N. Hagita, and Y. Anzai, “Three-layered draw-attention model for communication robots with pointing gesture and verbal cues,” J. of the Robotics Society of Japan, Vol.24, No.8, pp. 964-975, 2006.

- [4] J. Berg and S. Lu, “Review of interfaces for industrial human-robot interaction,” Current Robotics Reports, Vol.1, No.2, pp. 27-34, 2020.

- [5] H. Liu and L. Wang, “Gesture recognition for human-robot collaboration: A review,” Int. J. of Industrial Ergonomics, Vol.68, pp. 355-367, 2018.

- [6] A. A. Badr and A. K. Abdul-Hassan, “A review on voice-based interface for human-robot interaction,” Iraqi J. for Electrical And Electronic Engineering, Vol.16, No.2, pp. 91-102, 2020.

- [7] Y. Tamura, M. Sugi, T. Arai, and J. Ota, “Target identification through human pointing gesture based on human-adaptive approach,” J. Robot. Mechatron., Vol.20, No.4, pp. 515-525, 2008.

- [8] M. Sugi, H. Nakanishi, M. Nishino, Y. Tamura, T. Arai, and J. Ota, “Development of deskwork support system using pointing gesture interface,” J. Robot. Mechatron., Vol.22, No.4, pp. 430-438, 2010.

- [9] E. Tamura, Y. Yamashita, T. Yamashita, E. Sato-Shimokawara, and T. Yamaguchi, “Movement operation interaction system for mobility robot using finger-pointing recognition,” J. Adv. Comput. Intell. Intell. Inform., Vol.21, No.4, pp. 709-715, 2017.

- [10] K. Yoshida, F. Hibino, Y. Takahashi, and Y. Maeda, “Evaluation of pointing navigation interface for mobile robot with spherical vision system,” 2011 IEEE Int. Conf. on Fuzzy Systems (FUZZ-IEEE 2011), pp. 721-726, 2011.

- [11] Y. Takahashi, K. Yoshida, F. Hibino, and Y. Maeda, “Human pointing navigation interface for mobile robot with spherical vision system,” J. Adv. Comput. Intell. Intell. Inform., Vol.15, No.7, pp. 869-877, 2011.

- [12] N. Dhingra, E. Valli, and A. Kunz, “Recognition and localisation of pointing gestures using a rgb-d camera,” Int. Conf. on Human-Computer Interaction, pp. 205-212, 2020.

- [13] M. Tölgyessy, M. Dekan, F. Duchoň, J. Rodina, P. Hubinský, and L. Chovanec, “Foundations of visual linear human-robot interaction via pointing gesture navigation,” Int. J. of Social Robotics, Vol.9, No.4, pp. 509-523, 2017.

- [14] Z. Cao, T. Simon, S.-E. Wei, and Y. Sheikh, “Realtime multi-person 2d pose estimation using part affinity fields,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 7291-7299, 2017.

- [15] J. Redmon and A. Farhadi, “Yolo9000: better, faster, stronger,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 7263-7271, 2017.

- [16] E. T. Hall and E. T. Hall, “The hidden dimension,” Doubleday Anchor Books, Vol.609, Anchor, 1966.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.