Development Report:

Method to Achieve High Speed and High Recognition Rate of Goal from Long Distance for CanSat

Miho Akiyama*, Hiroshi Ninomiya**, and Takuya Saito***

*Graduate School of Electrical and Information Engineering, Shonan Institute of Technology

1-1-25 Tsujido-nishikaigan, Fujisawa, Kanagawa 251-8511, Japan

**Department of Information Science, Faculty of Engineering, Shonan Institute of Technology

1-1-25 Tsujido-nishikaigan, Fujisawa, Kanagawa 251-8511, Japan

***Department of Informatics, Faculty of Informatics, Tokyo University of Information Sciences

4-1 Onaridai, Wakaba-ku, Chiba-city, Chiba 265-8501, Japan

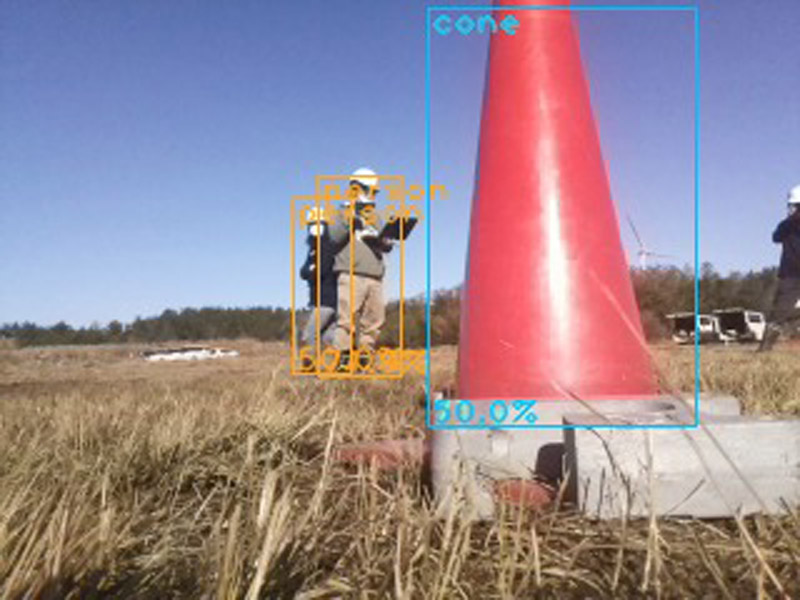

A structure is proposed for high-speed goal recognition by SSD MobileNet using the Coral USB Accelerator. It was confirmed that the goal recognition rate from a long distance is equal to or better than that of conventional methods. Using this method, two CanSat contests were won. The aim of the CanSat competitions is to guide the CanSat to a distance of 0 m from the goal. It is initially guided by GPS but must eventually employ an image recognition model to identify the nearby goal. Zero-meter guidance to the goal was achieved in the Tanegashima Rocket Contest 2018 using a method that recognized the color of the goal by the color of the image, but it was vulnerable to changes in lighting conditions, such as weather changes. Therefore, a deep-learning method for CanSat goal recognition was applied for the first time at ARLISS 2019, and the zero-distance goal was achieved, winning the competition. However, it took more than 10 s for recognition owing to the CPU calculations, making it time consuming to reach the goal. The conventional method uses image classification to recognize the location of a goal by preparing multiple regions of interest (ROIs) in the image and repeating the recognition operations for each ROI. However, this method has a complex algorithm and requires the recognition of more ROIs to recognize goals over long distances, which is computationally time consuming. Object detection is an effective method to identify the location of the target object in an image. However, even if the lightest SSD MobileNet V1 and V2 and a hardware accelerator are used, the computation time may not be short enough because the computer is a Raspberry Pi Zero, the weakest class of Linux computers. In addition, if SSD MobileNet V1 and V2 do not have a sufficiently high recognition rate at long distances from the goal compared with conventional methods, it will be difficult to adapt them to a CanSat. To clarify this, SSD MobileNet V1 and V2 were applied to a Raspberry Pi Zero connected to a Coral USB Accelerator, and the recognition rate and recognition time were investigated at long distances from the goal. It was found that the recognition rate was equivalent to or better than that of the conventional method, even at long distances from the goal, and that the recognition time was sufficiently short (approximately 0.2 s). The effectiveness of the proposed method was evaluated at the Noshiro Space Event 2021 and Asagiri CanSat Drop Test (ACTS) 2021, and the 0-m goal was achieved at both events.

Goal recognition with SSD MobileNet using accelerator

- [1] T. Saito and M. Akiyama, “Analysis of Log Data in ARLISS 2016 of a Planetary Exploration Rover,” Bulletin of Aichi University of Technology, Vol.15, pp. 19-25, 2018.

- [2] N. Sako, Y. Tsuda, S. Nakasuka et al., “CanSat Suborbital Launch Experiment – University Educational Space Program Using Can Sized Pico-Satellite,” Acta Astronautica, Vol.48, Issues 5-12, pp. 767-776, 2001.

- [3] S. Nakasuka, “Students’ Challenges towards New Frontier-Enlarging Activities of UNISEC and Japanese Universities,” Trans. of The Japan Society for Aeronautical and Space Sciences, Space Technology Japan, Vol.7 No.ists26, 2009.

- [4] T. Saito and M. Akiyama, “Development of Rover with ARLISS Requirements and the Examination of the Rate of Acceleration that Causes Damages During a Rocket Launch,” J. Robot. Mechatron., Vol.31, No.6, pp. 913-925, 2019.

- [5] M. Akiyama and T. Saito, “A Novel CanSat-Based Implementation of the Guidance Control Mechanism Using Goal-Image Recognition,” 2020 IEEE 9th Global Conf. on Consumer Electronics (GCCE), pp. 580-581, 2020.

- [6] M. Akiyama and T. Saito, “Study on a Method to Guide Cansat to the Goal at a Distance of 0m Using Deep Learning,” The IEICE Trans. on Information and Systems (Japanese Edition), Vol.J104-D, No.7, pp. 540-550, 2021.

- [7] M. Akiyama and T. Saito, “A Novel Method for Goal Recognition from 10 m Distance Using Deep Learning in CanSat,” J. Robot. Mechatron., Vol.33, No.6, pp. 1359-1372, 2021.

- [8] M. Akiyama, H. Ninomiya, and T. Saito, “Initial Study of the Effectiveness by Using Coral USB Accelerator to Accelerate Goal Recognition on CanSat,” Trans. on Computational Science and Computational Intelligence, Springer, Cham (in press).

- [9] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” Proc. of Int. Conf. on Learning Representations, pp. 1-14, 2015.

- [10] Y. LeCun and Y. Bengio, “Convolutional Networks for Images, Speech, and Time-Series,” the Handbook of Brain Theory and Neural Networks, Vol.3361, 1995.

- [11] T. Saito and M. Akiyama, “A Study of Image Recognition Rate with and without Padding of 2D Convolutional Layer for Deep Learning in CanSat,” 2021 IEEE 10th Global Conf. on Consumer Electronics (GCCE), pp. 816-817, 2021. https://doi.org/10.1109/GCCE53005.2021.9621978

- [12] N. Ketkar, “Introduction to keras,” Deep Learning with Python, Apress, pp. 97-111, 2017.

- [13] W. Liu et al., “SSD: Single Shot MultiBox Detector,” B. Leibe, J. Matas, N. Sebe, and M. Welling (Eds.), “Computer Vision – ECCV 2016,” Lecture Notes in Computer Science (LNCS, Vol.9905), Springer, Cham., 2016. https://doi.org/10.1007/978-3-319-46448-0_2

- [14] A. G. Howard et al., “Mobilenets: Efficient convolutional neural networks for mobile vision applications,” CoRR, vol.abs/1704.04861, 2017.

- [15] M. Sandler et al., “Mobilenetv2: Inverted residuals and linear bottlenecks,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 4510-4520, 2018.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.