Paper:

Projection Mapping-Based Interactive Gimmick Picture Book with Visual Illusion Effects

Sayaka Toda and Hiromitsu Fujii

Chiba Institute of Technology

2-17-1 Tsudanuma, Narashino, Chiba 275-0016, Japan

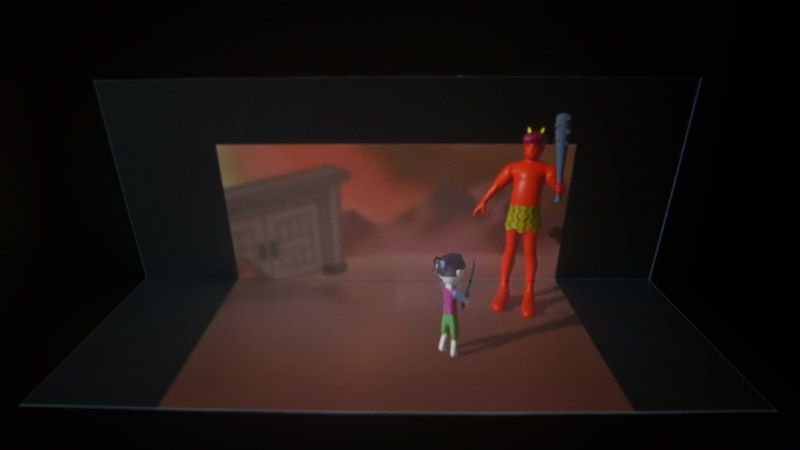

In this study, we proposed an electronic gimmick picture book system based on projection mapping for early childhood education in ordinary households. In our system, the visual effects of pop-up gimmicks in books are electronically reproduced by combining projection mapping, three-dimensional expression, and visual illusion effects. In addition, the proposed projector-camera system can provide children effective interaction experiences, which will have positive effects on their early childhood education. The performance of the proposed system was validated by projection experiments.

Gimmick picture book by projection mapping

- [1] P. K. Smith et al., “Learning Through Play,” Encyclopedia on Early Childhood Development, pp. 1-6, 2008.

- [2] S. Reed et al., “Shaping Watersheds Exhibit: An Interactive, Augmented Reality Sandbox for Advancing Earth Science Education,” American Geophysical Union, Fall Meeting 2014, ED34A-01, 2014.

- [3] Y. Guo et al., “A Real-time Interactive System of Surface Reconstruction and Dynamic Projection Mapping with RGB-depth Sensor and Projector,” Int. J. of Distributed Sensor Networks, Vol.14, No.7, 2018. https://doi.org/10.1177/1550147718790853

- [4] S. K. Rushton et al., “Developing visual systems and exposure to virtual reality and stereo displays: some concerns and speculations about the demands on accommodation and vergence,” Applied Ergonomics 1998, Vol.30, pp. 69-87, 1998. https://doi.org/10.1016/S0003-6870(98)00044-1

- [5] M. Attamimi, M, Miyata, T. Yamada, T. Omori, and R. Hida, “Attention Estimation for Child-Robot Interaction,” Proc. of the Fourth Int. Conf. on Human Agent Interaction (HAI’16), Association for Computing Machinery, pp. 267-271, 2016. https://doi.org/10.1145/2974804.2980510

- [6] R. Raskar et al., “The Office of the Future: A Unified Approach to Image-Based Modeling and Spatially Immersive Displays,” Computer Graphics Proc.: Annual Conf. Series 1998, pp. 179-188, 1998. https://doi.org/10.1145/280814.280861

- [7] R. Sukthankar et al., “Smarter Presentations: Exploiting Homography in Camera-Projector Systems,” Proc. 8th IEEE Int. Conf. on Computer Vision, pp. 247-253, 2001. https://doi.org/10.1109/ICCV.2001.937525

- [8] T. Okatani and K. Deguchi, “Autocalibration of A Projector-Camera System,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.27, No.12, pp. 1845-1855, 2005. https://doi.org/10.1109/TPAMI.2005.235

- [9] K. Tanaka et al., “Geometric Correction Method Applying the Holographic Ray Direction Control Technology,” J. Robot. Mechatron., Vol.33, No.5, pp. 1155-1168, 2021. https://doi.org/10.20965/jrm.2021.p1155

- [10] S. Toda and H. Fujii, “AR-Based Gimmick Picture Book for Household Use by Projection Mapping,” 2021 IEEE 10th Global Conf. on Consumer Electronics (GCCE), pp. 540-544, 2021. https://doi.org/10.1109/GCCE53005.2021.9622010

- [11] S. Toda and H. Fujii, “Projection Mapped Gimmick Picture Book by Optical Illusion-Based Stereoscopic Vision,” The 13th ACM SIGGRAPH Conf. and Exhibition on Computer Graphics & Interactive Technique in Asia, Article No.34, 2020. https://doi.org/10.1145/3415264.3425470

- [12] D. Topper, “On Anamorphosis: Setting Some Things Straight,” Leonardo, Vol.33, No.2, pp. 115-124, 2000. https://doi.org/10.1162/002409400552379

- [13] J. Lee et al., “Anamorphosis Projection by Ubiquitous Display in Intelligent Space,” Int. Conf. on Universal Access in Human-Computer Interaction, Springer, pp. 209-2017, 2009. https://doi.org/10.1007/978-3-642-02710-9_24

- [14] R. Ravnik et al., “Dynamic Anamorphosis as a Special,Computer-Generated User Interface,” Interactive with Computers, Vol.26, No.1, pp. 46-62, 2014. https://doi.org/10.1093/iwc/iwt027

- [15] T. Isaka and I. Fujishiro, “Naked-Eye 3D Imaging Through Optical Illusion Using L-Shaped Display Surfaces,” J. of the Institute of Image Information and Television Engineers, Vol.70, No.6, pp. J142-J145, 2016. https://doi.org/10.3169/itej.70.J142

- [16] Y. Kushihashi and S. Mizumura, “Development of Teaching Material for Robot Mechanisms Applying Projection Mapping Technology,” J. Robot. Mechatron., Vol.29, No.6, pp. 1014-1024, 2017. https://doi.org/10.20965/jrm.2017.p1014

- [17] J. Y. C. Chen and J. E. Thropp, “Review of Low Frame Rate Effects on Human Performance,” IEEE Trans. on Systems, Man and Cybernetics, Part A: Systems and Humans, Vol.37, No.6, pp. 1063-1076, 2007. https://doi.org/10.1109/TSMCA.2007.904779

- [18] L. Lillakas et al., “On the Definition of Motion Parallax,” VISION, Vol.16, No.2, pp. 83-92, 2004. https://doi.org/10.24636/vision.16.2_83

- [19] M. Mansour et al., “Relative Importance of Binocular Disparity and Motion Parallax for Depth Estimation: A Computer Vision Approach,” Remote Sensing, Vol.11, No.17, 2019. https://doi.org/10.3390/rs11171990

- [20] H. Mizushina et al., “Importance of Continuous Motion Parallax in Monocular and Binocular 3D Perception,” Proc. of the Int. Display Workshops, Vol.26, pp. 978-981, 2019. https://doi.org/10.36463/IDW.2019.3D6_3DSA6-1

- [21] D. Osokin, “Real-time 2D Multi-Person Pose Estimation on CPU: Lightweight OpenPose,” arXiv:1811.12004, 2018. https://doi.org/10.5220/0007555407440748

- [22] Z. Zhang, “A Flexible New Technique for Camera Calibration,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.22, No.11, pp. 1330-1334, 2000. https://doi.org/10.1109/34.888718

- [23] S. G. Jurado, R. M. Salinas, F. M. Cuevas, and M. M. Jiménez, “Automatic Generation and Detection of Highly Reliable Fiducial Markers Under Occlusion,” Pattern Recognition, Vol.47, pp. 2280-2292, 2014. https://doi.org/10.1016/j.patcog.2014.01.005

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.