Paper:

Basic Experiments Toward Mixed Reality Dynamic Navigation for Laparoscopic Surgery

Xiaoshuai Chen*1, Daisuke Sakai*1, Hiroaki Fukuoka*1, Ryosuke Shirai*2, Koki Ebina*2, Sayaka Shibuya*2, Kazuya Sase*3, Teppei Tsujita*4, Takashige Abe*5, Kazuhiko Oka*1, and Atsushi Konno*2

*1Graduate School of Science and Technology, Hirosaki University

3 Bunkyo-cho, Hirosaki, Aomori 036-8561, Japan

*2Graduate School of Information Science and Technology, Hokkaido University

Kita 14, Nishi 9, Kita-ku, Sapporo, Hokkaido 060-0814, Japan

*3Faculty of Engineering, Tohoku Gakuin University

1-13-1 Chuo, Tagajo, Miyagi 980-8511, Japan

*4Department of Mechanical Engineering, National Defense Academy of Japan

1-10-20 Hashirimizu, Yokosuka, Kanagawa 239-8686, Japan

*5Graduate School of Medicine, Hokkaido University

Kita 15, Nishi 7, Kita-ku, Sapporo, Hokkaido 060-8638, Japan

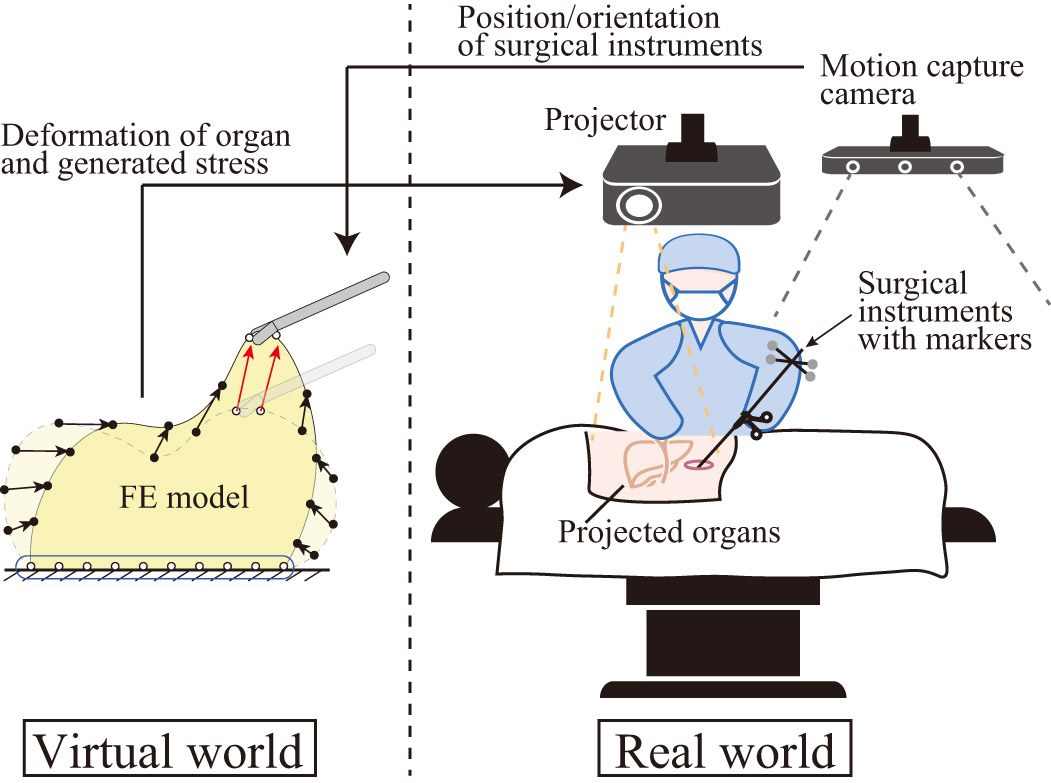

Laparoscopic surgery is a minimally invasive procedure that is performed by viewing endoscopic camera images. However, the limited field of view of endoscopic cameras makes laparoscopic surgery difficult. To provide more visual information during laparoscopic surgeries, augmented reality (AR) surgical navigation systems have been developed to visualize the positional relationship between the surgical field and organs based on preoperative medical images of a patient. However, since earlier studies used preoperative medical images, the navigation became inaccurate as the surgery progressed because the organs were displaced and deformed during surgery. To solve this problem, we propose a mixed reality (MR) surgery navigation system in which surgical instruments are tracked by a motion capture (Mocap) system; we also evaluated the contact between the instruments and organs and simulated and visualized the deformation of the organ caused by the contact. This paper describes a method for the numerical calculation of the deformation of a soft body. Then, the basic technology of MR and projection mapping is presented for MR surgical navigation. The accuracy of the simulated and visualized deformations is evaluated through basic experiments using a soft rectangular cuboid object.

Concept of the proposed MR navigation system

- [1] J. Hallet, L. Soler, M. Diana, D. Mutter, T. F. Baumert, F. Habersetzer, J. Marescaux, and P. Pessaux, “Trans-thoracic minimally invasive liver resection guided by augmented reality,” J. of the American College of Surgeons, Vol.220, No.5, pp. e55-e60, 2015.

- [2] V. Asfour, E. Smythe, and R. Attia, “Vascular injury at laparoscopy: a guide to management,” J. of Obstetrics and Gynaecology, Vol.38, No.5, pp. 598-606, 2018.

- [3] T. Tokuyasu, K. Motodoi, Y. Endo, Y. Iwashita, T. Etoh, and M. Inomata, “Training System for Endoscopic Surgery Aiming to Provide the Sensation of Forceps Operation,” J. Robot. Mechatron., Vol.30, No.5, pp. 772-780, 2018.

- [4] I. Murasawa, S. Murofushi, C. Ishii, and H. Kawamura, “Development of a Robotic Laparoscope for Laparoscopic Surgery and its Control,” J. Robot. Mechatron., Vol.29, No.3, pp. 580-590, 2017.

- [5] M. Osaki, T. Omata, and T. Takayama, “Assemblable Hand for Laparoscopic Surgery with Phased Array and Single-Element Ultrasound Probes,” J. Robot. Mechatron., Vol.25, No.5, pp. 863-870, 2013.

- [6] M. Sugimoto, H. Yasuda, K. Koda, M. Suzuki, M. Yamazaki, T. Tezuka, C. Kosugi, R. Higuchi, Y. Watayo, Y. Yagawa, S. Uemura, H. Tsuchiya, and T. Azuma, “Image overlay navigation by markerless surface registration in gastrointestinal, hepatobiliary and pancreatic surgery,” J. of Hepato-Biliary-Pancreatic Sciences, Vol.17, No.5, pp. 629-636, 2010.

- [7] F. Volonté, F. Pugin, P. Bucher, M. Sugimoto, O. Ratib, and P. Morel, “Augmented reality and image overlay navigation with OsiriX in laparoscopic and robotic surgery: not only a matter of fashion,” J. of Hepato-Biliary-Pancreatic Sciences, Vol.18, No.4, pp. 506-509, 2011.

- [8] T. Okamoto, S. Onda, M. Matsumoto, T. Gocho, Y. Futagawa, S. Fujioka, K. Yanaga, N. Suzuki, and A. Hattori, “Utility of augmented reality system in hepatobiliary surgery,” J. of Hepato-Biliary-Pancreatic Sciences, Vol.20, No.2, pp. 249-253, 2013.

- [9] R. Tang, L.-F. Ma, Z.-X. Rong, M.-D. Li, J.-P. Zeng, X.-D. Wang, H.-E. Liao, and J.-H. Dong, “Augmented reality technology for preoperative planning and intraoperative navigation during hepatobiliary surgery: a review of current methods,” Hepatobiliary & Pancreatic Diseases Int., Vol.17, No.2, pp. 101-112, 2018.

- [10] P. Pessaux, M. Diana, L. Soler, T. Piardi, D. Mutter, and J. Marescaux, “Towards cybernetic surgery: robotic and augmented reality-assisted liver segmentectomy,” Langenbeck’s Archives of Surgery, Vol.400, No.3, pp. 381-385, 2015.

- [11] W. Zhang, W. Zhu, J. Yang, N. Xiang, N. Zeng, H. Hu, F. Jia, and C. Fang, “Augmented reality navigation for stereoscopic laparoscopic anatomical hepatectomy of primary liver cancer: preliminary experience,” Frontiers in Oncology, Vol.11, Article No.663236, 2021.

- [12] S. Thompson, C. Schneider, M. Bosi, K. Gurusamy, S. Ourselin, B. Davidson, D. Hawkes, and M. J. Clarkson, “In vivo estimation of target registration errors during augmented reality laparoscopic surgery,” Int. J. of Computer Assisted Radiology and Surgery, Vol.13, No.6, pp. 865-874, 2018.

- [13] A. Teatini, E. Pelanis, D. L. Aghayan, R. P. Kumar, R. Palomar, Å. A. Fretland, B. Edwin, and O. J. Elle, “The effect of intraoperative imaging on surgical navigation for laparoscopic liver resection surgery,” Scientific Reports, Vol.9, No.1, Article No.18687, 2019.

- [14] W. Y. George and H. C. Miller, “Critical operative maneuvers in urologic surgery,” Mosby Elsevier Health Science, 1996.

- [15] Y. Adagolodjo, N. Golse, E. Vibert, M. De Mathelin, S. Cotin, and H. Courtecuisse, “Marker-based registration for large deformations-Application to open liver surgery,” 2018 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 4007-4012, 2018.

- [16] N. Golse, A. Petit, M. Lewin, E. Vibert, and S. Cotin, “Augmented reality during open liver surgery using a markerless non-rigid registration system,” J. of Gastrointestinal Surgery, Vol.25, No.3, pp. 662-671, 2021.

- [17] N. Haouchine, J. Dequidt, M.-O. Berger, and S. Cotin, “Deformation-based Augmented Reality for Hepatic Surgery,” Studies in Health Technology and Informatics, Vol.184, pp. 182-188, 2013.

- [18] M. Nakayama, S. Abiko, X. Jiang, A. Konno, and M. Uchiyama, “Stable Soft-Tissue Fracture Simulation for Surgery Simulator,” J. Robot. Mechatron., Vol.23, No.4, pp. 589-597, 2011.

- [19] X. Chen, K. Sase, A. Konno, T. Tsujita, and S. Komizunai, “A simple damage and fracture model of brain parenchyma for haptic brain surgery simulations,” J. of Biomechanical Science and Engineering, Vol.11, No.4, Article No.16-00323, 2016.

- [20] X. Chen, K. Sase, T. Tsujita, and A. Konno, “A Nonlinear and Failure Numerical Calculation Method for Vessel Preservation Simulations based on Subarachnoid Space Structure Considerations,” IEEE Trans. on Medical Robotics and Bionics, Vol.2, No.3, pp. 356-363, 2020.

- [21] H. Ghaednia, M. S. Fourman, A. Lans, K. Detels, H. Dijkstra, S. Lloyd, A. Sweeney, J. H. Oosterhoff, and J. H. Schwab, “Augmented and virtual reality in spine surgery, current applications and future potentials,” The Spine J., Vol.21, No.10, pp. 1617-1625, 2021.

- [22] O. Zienkiewicz, R. Taylor, and J. Zhu, “The Finite Element Method: Its Basis and Fundamentals,” Elsevier Science, 2005.

- [23] M. M. Müller, J. Dorsey, L. McMillan, R. Jagnow, and B. Cutler, “Stable Real-Time Deformations,” Proc. of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation, pp. 49-54, 2002.

- [24] K. Sase, A. Fukuhara, T. Tsujita, and A. Konno, “GPU-accelerated surgery simulation for opening a brain fissure,” ROBOMECH J., Vol.2, No.1, Article No.17, 2015.

- [25] N. M. Newmark, “A method of computation for structural dynamics,” Proc. of ASCE, Vol.85, pp. 67-94, 1959.

- [26] R. Shirai, X. Chen, K. Sase, S. Komizunai, T. Tsujita, and A. Konno, “AR brain-shift display for computer-assisted neurosurgery,” 2019 58th Annual Conf. of the Society of Instrument and Control Engineers of Japan (SICE), pp. 1113-1118, 2019.

- [27] K. Ebina, T. Abe, S. Komizunai, T. Tsujita, K. Sase, X. Chen, M. Higuchi, J. Furumido, N. Iwahara, Y. Kurashima, N. Shinohara, and A. Konno, “Development and validation of a measurement system for laparoscopic surgical procedures,” SICE J. of Control, Measurement, and System Integration, Vol.13, No.4, pp. 191-200, 2020.

- [28] A. Konno, N. Shido, K. Sase, X. Chen, and T. Tsujita, “A Hepato-Biliary-Pancreatic Deformable Model for a Simulation-Based Laparoscopic Surgery Navigation,” 2020 IEEE/SICE Int. Symposium on System Integration (SII), pp. 39-44, 2020.

- [29] S. Shibuya, K. Sase, X. Chen, S. Komizunai, T. Tsujita, and A. Konno, “Development and verification of a laparoscopic surgery retraction simulator system linked with real space,” Proc. of JSME Annual Conf. on Robotics and Mechatronics, 1P3-C05, 2021 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.