Paper:

Clear Fundus Images Through High-Speed Tracking Using Glare-Free IR Color Technology

Motoshi Sobue*1, Hirokazu Takata*2, Hironari Takehara*1, Makito Haruta*1, Hiroyuki Tashiro*3, Kiyotaka Sasagawa*1, Ryo Kawasaki*4, and Jun Ohta*1

*1Division of Materials Science, Nara Institute of Science and Technology

8916-5 Takayama, Ikoma, Nara 630-0192, Japan

*2TakumiVision Co. Ltd.

Kotani Building 3F, 686-3 Ebisuno-cho, Shimokyo-ku, Kyoto 600-8310, Japan

*3Faculty of Medical Sciences, Kyushu University

3-1-1 Maidashi, Higashi-ku, Fukuoka 812-8582, Japan

*4Graduate School of Medicine, Department of Vision Informatics, Osaka University

2-2 Yamadaoka, Suita, Osaka 565-0871, Japan

Fundus images contain extensive health information. However, patients hardly obtain their fundus images by themselves. Although glare-free infrared (IR) imaging enables easy acquisition of fundus images, it is monographic and challenging to process in real-time in response to high-speed and involuntary fixational eye movement and in vivo blurring. Therefore, we propose applying our IR color technology and providing clear fundus images by high-speed tracking of involuntary fixational eye movements and eliminating in vivo blurs by deconvolution. We tested whether the proposed camera system was applicable in medical practice and capable of medical examination. We verified the IR color fundus camera system could detect ophthalmological and lifestyle-related diseases.

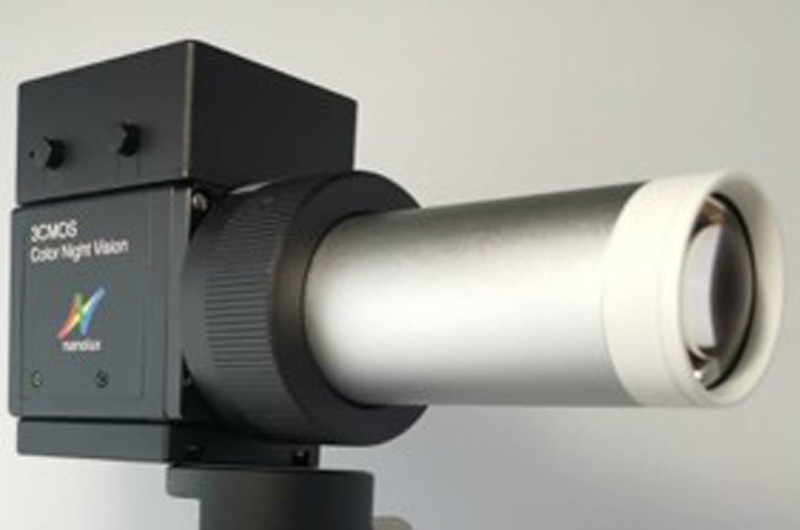

Glare-free IR color fundus camera

- [1] C. B. Roberts, “Economic Cost of Visual Impairment in Japan,” Archives of Ophthalmology, Vol.128, No.6, pp. 766-771, doi: 10.1001/archophthalmol.2010.86, 2010.

- [2] Z. Wang et al., “Near-infrared fundus camera with a patterned interference filter for the retinal scattering detection,” Japanese J. of Applied Physics, Vol.60, No.SB, SBBL07, doi: 10.35848/1347-4065/abea4c, 2021.

- [3] A. Kifley, J. J. Wang, S. Cugati, T. Wong, and P. Mitchell, “Retinal vascular caliber and the long-term risk of diabetes and impaired fasting glucose: The blue mountains eye study,” Microcirculation, Vol.15, No.5, pp. 373-377, doi: 10.1080/10739680701812220, 2008.

- [4] R. Poplin et al., “Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning,” Nature Biomedical Engineering, Vol.2, No.3, pp. 158-164, doi: 10.1038/s41551-018-0195-0, 2018.

- [5] B. C. Bucca et al., “Dynamic changes in retinal vessel diameter during acute hyperglycemia in type 1 diabetes,” J. of Diabetes and its Complications, Vol.32, No.2, pp. 234-239, doi: 10.1016/j.jdiacomp.2017.10.001, 2018.

- [6] Y. He et al., “Segmenting Diabetic Retinopathy Lesions in Multispectral Images Using Low-Dimensional Spatial-Spectral Matrix Representation,” IEEE J. of Biomedical and Health Informatics, Vol.24, No.2, pp. 493-502, doi: 10.1109/JBHI.2019.2912668, 2020.

- [7] X. Hadoux et al., “Non-invasive in vivo hyperspectral imaging of the retina for potential biomarker use in Alzheimer’s disease,” Nature Communications, Vol.10, No.1, doi: 10.1038/s41467-019-12242-1, 2019.

- [8] T. T. Nguyen et al., “Flicker Light-Induced Retinal Vasodilation in Diabetes and Diabetic Retinopathy,” doi: 10.2337/dc09-0075, 2009.

- [9] L. G. Jansen, P. Shah, B. Wabbels, F. G. Holz, R. P. Finger, and M. W. M. Wintergerst, “Learning curve evaluation upskilling retinal imaging using smartphones,” Scientific Reports, Vol.11, No.1, doi: 10.1038/s41598-021-92232-w, 2021.

- [10] A. Pujari et al., “Clinical Role of Smartphone Fundus Imaging in Diabetic Retinopathy and Other Neuro-retinal Diseases,” Current Eye Research, Vol.46, No.11, pp. 1605-1613, doi: 10.1080/02713683.2021.1958347, 2021.

- [11] C. H. Tan, B. M. Kyaw, H. Smith, C. S. Tan, and L. Tudor Car, “Use of Smartphones to Detect Diabetic Retinopathy: Scoping Review and Meta-Analysis of Diagnostic Test Accuracy Studies,” J. of Medical Internet Research, Vol.22, No.5, e16658, doi: 10.2196/16658, 2020.

- [12] M. W. M. Wintergerst, L. G. Jansen, F. G. Holz, and R. P. Finger, “Smartphone-Based Fundus Imaging – Where Are We Now?,” Asia-Pacific J. of Ophthalmology, Vol.9, No.4, pp. 308-314, doi: 10.1097/APO.0000000000000303, 2020.

- [13] D. Toslak, A. Ayata, C. Liu, M. K. Erol, and X. Yao, “Wide-Field Smartphone Fundus Video Camera Based on Miniaturized Indirect Ophthalmoscopy,” Retina, Vol.38, No.2, pp. 438-441, doi: 10.1097/IAE.0000000000001888, 2018.

- [14] R. N. Maamari, J. D. Keenan, D. A. Fletcher, and T. P. Margolis, “A mobile phone-based retinal camera for portable wide field imaging,” British J. of Ophthalmology, Vol.98, No.4, pp. 438-441, doi: 10.1136/bjophthalmol-2013-303797, 2014.

- [15] H. Takehara et al., “Multispectral Near-infrared Imaging Technologies for Nonmydriatic Fundus Camera,” 2019 IEEE Biomedical Circuits and Systems Conf. (BioCAS), pp. 1-4, doi: 10.1109/BIOCAS.2019.8918695, 2019.

- [16] H. Takehara et al., “Near-infrared colorized imaging technologies and their fundus camera applications,” ITE Trans. on Media Technology and Applications, Vol.10, No.2, 2022.

- [17] X. Hadoux et al., “Non-invasive in vivo hyperspectral imaging of the retina for potential biomarker use in Alzheimer’s disease,” Nature Communications, Vol.10, No.1, doi: 10.1038/s41467-019-12242-1, 2019.

- [18] H. Sumi et al., “Next-generation Fundus Camera with Full Color Image Acquisition in 0-lx Visible Light by 1.12-micron Square Pixel, 4K, 30-fps BSI CMOS Image Sensor with Advanced NIR Multi-spectral Imaging System,” 2018 IEEE Symp. on VLSI Technology, pp. 163-164, 2018.

- [19] Y. Nagamune, “Imagine Capturing Device and Image Capturing Method,” US Patent 8854472, 2014.

- [20] Y. Nagamune, “Image capturing device and image capturing method,” US Patent 8836795, 2014.

- [21] S. Martinez-Conde, S. L. Macknik, and D. H. Hubel, “The role of fixational eye movements in visual perception,” Nature Reviews Neuroscience, Vol.5, No.3, pp. 229-240, doi: 10.1038/nrn1348, 2004.

- [22] S. Martinez-Conde, J. Otero-Millan, and S. L. Macknik, “The impact of microsaccades on vision: towards a unified theory of saccadic function,” Nature Reviews Neuroscience, Vol.14, No.2, pp. 83-96, doi: 10.1038/nrn3405, 2013.

- [23] K. Matsumoto and H. Wu, “Tracking Irises with Variable Circular Sector Separability Filter,” IPSJ SIG Technical Report, Vol.2012-CVIM-182, No.29, pp. 1-7, 2012 (in Japanese).

- [24] O. J. Knight, “Effect of Race, Age, and Axial Length on Optic Nerve Head Parameters and Retinal Nerve Fiber Layer Thickness Measured by Cirrus HD-OCT,” Archives of Ophthalmology, Vol.130, No.3, pp. 312-318, doi: 10.1001/archopthalmol.2011.1576, 2012.

- [25] W. H. Richardson, “Bayesian-Based Iterative Method of Image Restoration,” J. Opt. Soc. Am., Vol.62, No.1, pp. 55-59, doi: 10.1364/JOSA.62.000055, 1972.

- [26] L. B. Lucy, “An iterative technique for the rectification of observed distributions,” Astron. J., 1974.

- [27] E. R. Crouch, E. R. Crouch, and T. R. Grant, “Ophthalmology,” Textbook of Family Medicine, Elsevier, pp. 928-958, doi: 10.1016/B978-1-4377-1160-8.10041-7, 2011.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.