Paper:

Evaluation of Perceptual Difference in Dynamic Projection Mapping with and without Movement of the Target Surface

Shunya Fukuda, Shingo Kagami, and Koichi Hashimoto

Graduate School of Information Sciences, Tohoku University

6-6-01 Aramaki Aza Aoba, Aoba-ku, Sendai 980-8579, Japan

High-frame-rate visual feedback has been proven effective for projection mapping on quickly moving targets. However, the quantitative conditions of frame rates have not been elucidated. In particular, considering that the human dynamic visual acuity generally declines as the moving target velocity increases, it may be argued that the tracking accuracy required for projection mapping on moving targets can be lower than that required for fixed targets. To examine the above-mentioned conditions of frame rates, this study compares them based on two types of projection mapping systems having equivalent relative movements to each other: projection mapping from a fixed projector/camera system to a moving target and projection mapping from a moving projector/camera system to a fixed target. We examined the effects of the target movements by having stationary observers visually recognize and evaluate them. Using the weighted up-down method, we measured the frame time that provides a just noticeable difference (JND) to the projection mapping. We found that when the visual feedback is generated at a frame time of 2 ms, the frame time that provides a JND is 3.72 ms on average for fixed targets and 3.94 ms on average for moving targets: a 1%-level significant difference. Taking the individual differences and experimental errors into account, this is not a very large variation. This suggests that, in projection mapping to moving targets, we should realize a tracking accuracy as precise as that in projection mapping to fixed targets.

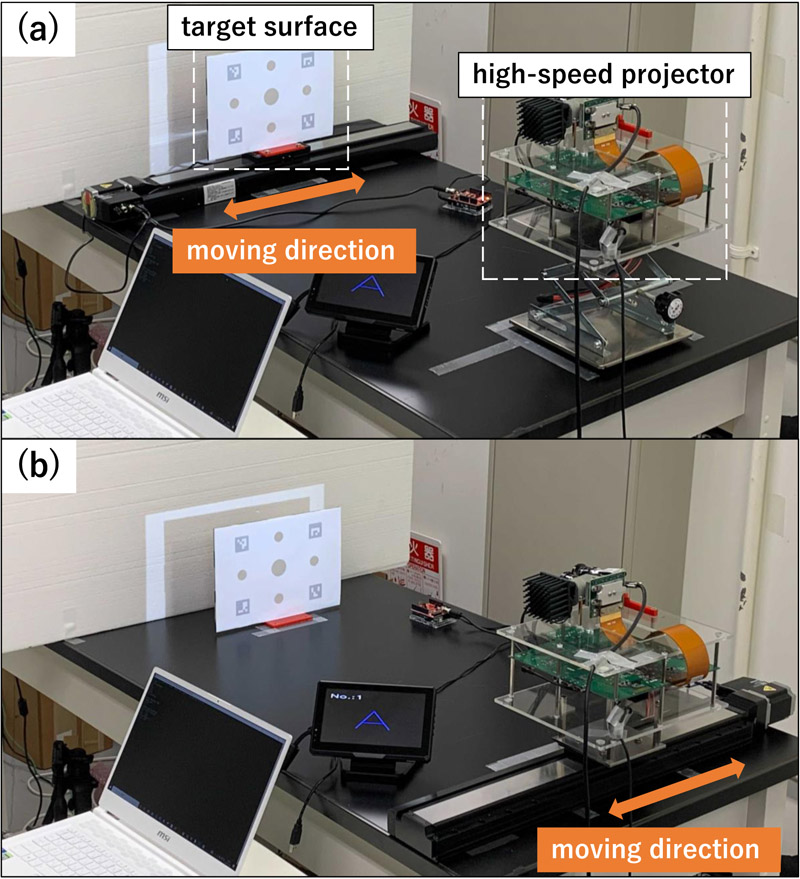

Setup with (a) moving surface and (b) moving projector

- [1] M. Mine, D. Rose, B. Yang, J. van Baar, and A. Grundhöfer, “Projection-based augmented reality in disney theme parks. Computer,” Computer, Vol.45, pp. 32-40, doi: 10.1109/MC.2012.154, 2012.

- [2] X. Cao, C. Forlines, and R. Balakrishnan, “Multi-User Interaction using Handheld Projectors,” Proc. of the 20th Annual ACM Symposium on User Interface Software and Technology, pp. 43-52, doi: 10.1145/1294211.1294220, 2007.

- [3] K. D. D. Willis, T. Shiratori, and M. Mahler, “HideOut: mobile projector interaction with tangible objects and surfaces,” Proc. of the 7th Int. Conf. on Tangible, Embedded and Embodied Interaction, pp. 331-338, doi: 10.1145/2460625.2460682, 2013.

- [4] L. Zhang and T. Matsumaru, “Near-Field Touch Interface Using Time-of-Flight Camera,” J. Robot. Mechatron., Vol.28, No.5, pp. 759-775, doi: 10.20965/jrm.2016.p0759, 2016.

- [5] S. Kagami and K. Hashimoto, “Sticky Projection Mapping: 450-fps Tracking Projection onto a Moving Planar Surface,” ACM SIGGRAPH Int. Conf. and Exhibition on Computer Graphics and Interactive Techniques in Asia 2015, Emerging Technologies, No.23, pp. 1-3, doi: 10.1145/2818466.2818485, 2015.

- [6] S. Kagami and K. Hashimoto, “Animated Stickies: Fast Video Projection Mapping onto a Markerless Plane through a Direct Closed-Loop Alignment,” IEEE Trans. on Visualization and Computer Graphics, Vol.25, No.11, pp. 3094-3104, doi: 10.1109/TVCG.2019.2932248, 2019.

- [7] G. Narita, Y. Watanabe, and M. Ishikawa, “Dynamic Projection Mapping onto Deforming Non-Rigid Surface Using Deformable Dot Cluster Marker,” IEEE Trans. on Visualization and Computer Graphics, Vol.23, No.3, pp. 1235-1248, doi: 10.1109/TVCG.2016.2592910, 2017.

- [8] L. Miyashita, Y. Watanabe, and M. Ishikawa, “MIDAS Projection: Markerless and Modelless Dynamic Projection Mapping for Material Representation,” ACM Trans. on Graphics, Vol.37, No.196, pp. 1-12, doi: 10.1145/3272127.3275045, 2018.

- [9] S. Narita, S. Kagami, and K. Hashimoto, “Vision-Based Finger Tapping Detection Without Fingertip Observation,” J. Robot. Mechatron., Vol.33, No.3, pp. 484-493, doi: 10.20965/jrm.2021.p0484, 2021.

- [10] T. Kikkawa, S. Kagami, and K. Hashimoto, “Low latency interaction with large screen display using a handheld high-speed projector,” Entertainment Computing Symposium 2019, pp. 434-437, 2019 (in Japanese)

- [11] S. Weissman and C. M. Freeburne, “Relationship between static and dynamic visual acuity,” J. of Experimental Psychology, Vol.70, No.2, pp. 141-146, doi: 10.1037/h0022214, 1965.

- [12] J. Deber, R. Jota, C. Forlines, and D. Wigdor, “How Much Faster is Fast Enough? User Perception of Latency & Latency Improvements in Direct and Indirect Touch,” Proc. of the 33rd Annual ACM Conf. on Human Factors in Computing Systems, pp. 1827-1836, doi: 10.1145/2702123.2702300, 2015.

- [13] A. Ng, M. Annett, P. Dietz, A. Gupta, and W. F. Bischof, “In the blink of an eye: investigating latency perception during stylus interaction,” Proc. of the SIGCHI Conf. on Human Factors in Computing Systems, pp. 1103-1112, doi: 10.1145/2556288.2557037, 2014.

- [14] J. Spjut, B. Boudaoud, K. Binaee, J. Kim, A. Majercik, M. McGuire, D. Luebke, and J. Kim, “Latency of 30 ms Benefits First Person Targeting Tasks More Than Refresh Rate Above 60 Hz,” SIGGRAPH Asia 2019 Technical Briefs, pp. 110-113, doi: 10.1145/3355088.3365170, 2019.

- [15] W. Oshiro, S. Kagami, and K. Hashimoto, “Perception of Motion-Adaptive Color Images Displayed by a High-Speed DMD Projector,” IEEE VR Workshop 2019, Vol.25, No.2, pp. 108-116, doi: 10.1109/VR.2019.8797850, 2020.

- [16] S. Garrido-Jurado, R. Muñoz-Salinas, F. J. Madrid-Cuevas, and M. J. Marín-Jiménez, “Automatic generation and detection of highly reliable fiducial markers under occlusion,” Pattern Recognition, Vol.47, No.6, pp. 2280-2292, doi: 10.1016/j.patcog.2014.01.005, 2014.

- [17] S. Kagami and K. Hashimoto, “A Full-color single-chip-DLP Projector with an Embedded 2400-fps Homography Warping Engine,” ACM SIGGRAPH Int. Conf. and Exhibition on Computer Graphics and Interactive Techniques 2018, Emerging Technologies, No.1, pp. 1-2, doi: 10.1145/3214907.3214927, 2018.

- [18] M. Yasui, M. S. Alvissalim, H. Yamamoto, and M. Ishikawa, “Immersive 3D Environment by Floating Display and High-speed Gesture UI Integration,” Trans. of the Society of Instrument and Control Engineers, Vol.52, No.3, pp. 134-140, doi: 10.9746/sicetr.52.134, 2016 (in Japanese).

- [19] N. Otsu, “A Threshold Selection Method from Gray-Level Histograms,” IEEE Trans. on Systems, Man and Cybernetics, Vol.9, No.1, pp. 62-66, doi: 10.1109/TSMC.1979.4310076, 1979.

- [20] S. Hecht and S. Shlaer, “Intermittent stimulation by light: V. the relation between intensity and critical frequency for different parts of the spectrum,” The J. of General Physiology, Vol.19, No.6, pp. 965-977, doi: 10.1085/jgp.19.6.965, 1936.

- [21] C. Kaernbach, “Simple adaptive testing with the weighted up-down method,” Perception & Psychophysics, Vol.49, No.3, pp. 227-229, doi: 10.3758/BF03214307, 1991.

- [22] E. R. Wist, M. Schrauf, and W. H. Ehrenstein, “Dynamic vision based on motion-contrast: changes with age in adults,” Experimental Brain Research, Vol.134, No.3, pp. 295-300, doi: 10.1007/s002210000466, 2000.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.