Paper:

EmnDash: A Robust High-Speed Spatial Tracking System Using a Vector-Graphics Laser Display with M-Sequence Dashed Markers

Tomohiro Sueishi*, Ryota Nishizono*, and Masatoshi Ishikawa*,**

*The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

**Tokyo University of Science

1-3 Kagurazaka, Shinjuku-ku, Tokyo 162-8601, Japan

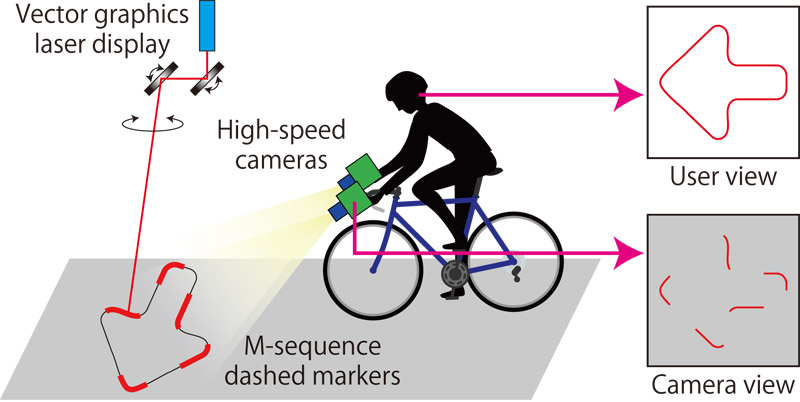

Camera-based wide-area self-posture estimation is an effective method to understand and learn about human motion, especially in sports. However, although rapid spatial tracking typically requires markers, prepositioned markers require extensive preparation in advance, and area projection markers exhibit problems in bright environments. In this study, we propose a system for spatial tracking and graphics display using vector-based laser projection embedded with M-sequence dashed line markers. The proposed approach is fast, wide-area, and can operate in bright environments. The system enables embedding and calibration of M-sequence codes in non-circular vector shapes, as well as rapid image processing recognition. We verified that the accuracy and speed of the proposed approach sufficed through static and dynamic tracking evaluations. We also demonstrate a practical application.

A conceptual overview of the proposed system, EmnDash

- [1] D. Schmalstieg and T. Hollerer, “Augmented Reality: Principles and Practice,” Addison-Wesley Professional, 2016.

- [2] G. Bertasius, A. Chan, and J. Shi, “Egocentric basketball motion planning from a single first-person image,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 5889-5898, 2018.

- [3] A. Nose, T. Yamazaki, H. Katayama, S. Uehara, M. Kobayashi, S. Shida, M. Odahara, K. Takamiya, S. Matsumoto, L. Miyashita, Y. Watanabe, T. Izawa, Y. Muramatsu, Y. Nitta, and M. Ishikawa, “Design and performance of a 1 ms high-speed vision chip with 3d-stacked 140 gops column-parallel pes,” Sensors, Vol.18, No.5, Article No.1313, 2018.

- [4] R. Sigrist, G. Rauter, R. Riener, and P. Wolf, “Augmented visual, auditory, haptic, and multimodal feedback in motor learning: A review,” Psychonomic Bulletin & Review, Vol.20, pp. 21-53, 2013.

- [5] T. Taketomi, H. Uchiyama, and S. Ikeda, “Visual SLAM algorithms: a survey from 2010 to 2016,” IPSJ Trans. on Computer Vision and Applications, Vol.9, Article No.16, 2017.

- [6] S. Garrido-Jurado, R. Muñoz-Salinas, F. J. Madrid-Cuevas, and M. J. Marín-Jiménez, “Automatic generation and detection of highly reliable fiducial markers under occlusion,” Pattern Recognition, Vol.47, No.6, pp. 2280-2292, 2014.

- [7] A. Grundhofer, M. Seeger, F. Hantsch, and O. Bimber, “Dynamic adaptation of projected imperceptible codes,” 2007 6th IEEE and ACM Int. Symposium on Mixed and Augmented Reality, pp. 181-190, 2007.

- [8] O. Halabi and N. Chiba, “Efficient vector-oriented graphic drawing method for laser-scanned display,” Displays, Vol.30, No.3, pp. 97-106, 2009.

- [9] F. J. MacWilliams and N. J. A. Sloane, “Pseudo-random sequences and arrays,” Proc. of the IEEE, Vol.64, No.12, pp. 1715-1729, 1976.

- [10] T. B. Moeslund, A. Hilton, and V. Krüger, “A survey of advances in vision-based human motion capture and analysis,” Computer Vision and Image Understanding, Vol.104, No.2-3, pp. 90-126, 2006.

- [11] F. Bergamasco, A. Albarelli, L. Cosmo, E. Rodolà, and A. Torsello, “An accurate and robust artificial marker based on cyclic codes,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.38, No.12, pp. 2359-2373, 2016.

- [12] G. Welch, G. Bishop, L. Vicci, S. Brumback, K. Keller, and D. Colucci, “High-performance wide-area optical tracking: The hiball tracking system,” Presence, Vol.10, No.1, pp. 1-21, 2001.

- [13] Y. Kitajima, D. Iwai, and K. Sato, “Simultaneous projection and positioning of laser projector pixels,” IEEE Trans. on Visualization and Computer Graphics, Vol.23, No.11, pp. 2419-2429, 2017.

- [14] T. Kusanagi, S. Kagami, and K. Hashimoto, “Lightning markers: Synchronization-free single-shot detection of imperceptible AR markers embedded in a high-speed video display,” 2017 IEEE Int. Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), pp. 229-234, 2017.

- [15] R. Xiao, C. Harrison, K. D. D. Willis, I. Poupyrev, and S. E. Hudson, “Lumitrack: low cost, high precision, high speed tracking with projected m-sequences,” Proc. of the 26th Annual ACM Symposium on User Interface Software and Technology, pp. 3-12, 2013.

- [16] S. Tabata, S. Noguchi, Y. Watanabe, and M. Ishikawa, “High-speed 3D sensing with three-view geometry using a segmented pattern,” 2015 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 3900-3907, 2015.

- [17] M. Ikura, L. Miyashita, and M. Ishikawa, “Stabilization system for UAV landing on rough ground by adaptive 3D sensing and high-speed landing gear adjustment,” J. Robot. Mechatron., Vol.33, No.1, pp. 108-118, 2021.

- [18] S. T. S. Holmström, U. Baran, and H. Urey, “MEMS laser scanners: a review,” J. of Microelectromechanical Systems, Vol.23, No.2, pp. 259-275, 2014.

- [19] S. Willi and A. Grundhöfer, “Spatio-temporal point path analysis and optimization of a galvanoscopic scanning laser projector,” IEEE Trans. on Visualization and Computer Graphics, Vol.22, No.11, pp. 2377-2384, 2016.

- [20] S. Hecht and S. Shlaer, “Intermittent stimulation by light V. the relation between intensity and critical frequency for different parts of the spectrum,” J. of General Physiology, Vol.19, No.6, pp. 965-977, 1936.

- [21] T. Yoshida, Y. Watanabe, and M. Ishikawa, “Phyxel: Realistic display of shape and appearance using physical objects with high-speed pixelated lighting,” Proc. of the 29th Annual Symposium on User Interface Software and Technology, pp. 453-460, 2016.

- [22] R. Nishizono, T. Sueishi, and M. Ishikawa, “EmnDash: M-sequence dashed markers on vector-based laser projection for robust high-speed spatial tracking,” 2020 IEEE Int. Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), pp. 195-200, 2020.

- [23] M. Okuyama, Y. Matoba, and I. Siio, “Cylindrical M-sequence markers and its application to AR fitting system for kimono obi,” Proc. of the 23rd Int. Conf. on Intelligent User Interfaces Companion, pp. 1-2, 2018.

- [24] R. Gold, “Characteristic linear sequences and their coset functions,” SIAM J. on Applied Mathematics, Vol.14, No.5, pp. 980-985, 1966.

- [25] I. Ishii, Y. Nakabo, and M. Ishikawa, “Target tracking algorithm for 1 ms visual feedback system using massively parallel processing,” Proc. of IEEE Int. Conf. on Robotics and Automation, Vol.3, pp. 2309-2314, 1996.

- [26] D. Dori, L. Wenyin, and M. Peleg, “How to win a dashed line detection contest,” Int. Workshop on Graphics Recognition, pp. 286-300, 1995.

- [27] D. Scharstein and R. Szeliski, “High-accuracy stereo depth maps using structured light,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 195-202, 2003.

- [28] R. Hartley and A. Zisserman, “Multiple View Geometry in Computer Vision,” Cambridge University Press, 2004.

- [29] P.-E. Forssén and E. Ringaby, “Rectifying rolling shutter video from hand-held devices,” 2010 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, pp. 507-514, 2010.

- [30] H. Tanaka, Y. Sumi, and Y. Matsumoto, “A high-accuracy visual marker based on a microlens array,” 2012 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 4192-4197, 2012.

- [31] M. Hirano, Y. Watanabe, and M. Ishikawa, “Rapid blending of closed curves based on curvature flow,” Computer Aided Geometric Design, Vol.52-53, pp. 217-230, 2017.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.