Paper:

Multiple High-Speed Vision for Identical Objects Tracking

Masahiro Hirano*, Keigo Iwakuma**, and Yuji Yamakawa***

*Institute of Industrial Science, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

**GA technologies

3-2-1 Roppongi, Minato-ku, Tokyo 106-6290, Japan

***Interfaculty Initiative in Information Studies, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

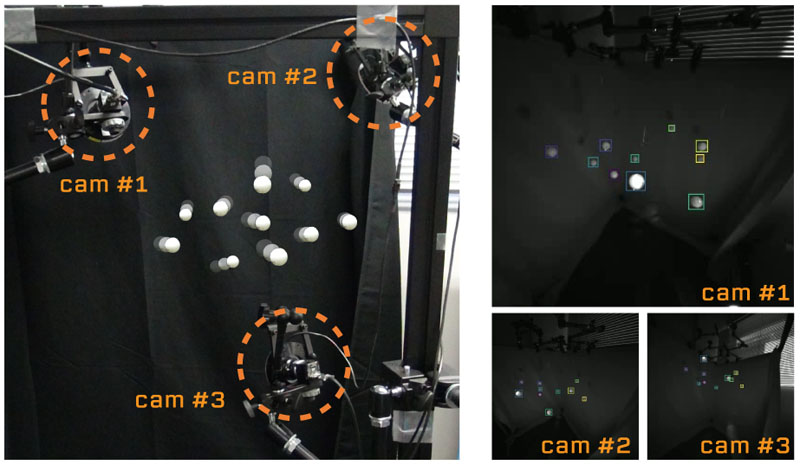

In multi-object tracking of identical objects, it is difficult to return to tracking after occlusions occur due to three-dimensional intersections between objects because the objects cannot be distinguished by their appearances. In this paper, we propose a method for multi-object tracking of identical objects using multiple high-speed vision systems. By using high-speed vision, we take advantage of the fact that tracking information, such as the position of each object in each camera and the presence or absence of occlusion, can be obtained with high time density. Furthermore, we perform multi-object tracking of identical objects by efficiently performing occlusion handling using geometric constraints satisfied by multiple high-speed vision systems; these can be used by appropriately positioning them with respect to the moving region of the object. Through experiments using table-tennis balls as identical objects, this study shows that stable multi-object tracking can be performed in real time, even when frequent occlusions occur.

Identical objects tracking

- [1] Y. Yamakawa, Y. Matsui, and M. Ishikawa, “Development of a Real-Time Human-Robot Collaborative System Based on 1 kHz Visual Feedback Control and Its Application to a Peg-in-Hole Task,” Sensors, Vol.21, No.2, Article No.663, 2021.

- [2] P. Shah, A. Garg, and V. Gajjar, “PeR-ViS: Person Retrieval in Video Surveillance using Semantic Description,” IEEE Winter Conf. on Applications of Computer Vision Workshops (WACVW), pp. 41-50, 2021.

- [3] R. Voeikov, N. Falaleev, and R. Baikulov, “TTNet: Real-time temporal and spatial video analysis of table tennis,” IEEE/CVF Conf. on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 3866-3874, 2020.

- [4] T. Ohashi, Y. Ikegami, K. Yamamoto, W. Takano, and Y. Nakamura, “Video Motion Capture from the Part Confidence Maps of Multi-Camera Images by Spatiotemporal Filtering Using the Human Skeletal Model,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, 2018.

- [5] P. Dendorfer, H. Rezatofighi, A. Milan, J. Shi, D. Cremers, I. Reid, S. Roth, K. Schindler, and L. Leal-Taixé, “MOT20: A benchmark for multi object tracking in crowded scenes,” CoRR, arXiv:2003.09003, 2020.

- [6] C. Dicle, O. I. Camps, and M. Sznaier, “The Way They Move: Tracking Multiple Targets with Similar Appearance,” IEEE/CVF Int. Conf. on Computer Vision, pp. 5304-2311, 2013.

- [7] G. Wang, R. Gu, Z. Liu, W. Hu, M. Song, and J.-N. Hwang, “Track without Appearance: Learn Box and Tracklet Embedding with Local and Global Motion Patterns for Vehicle Tracking,” IEEE/CVF Int. Conf. on Computer Vision, pp. 9856-9866, 2021.

- [8] J. Black, T. Ellis, and P. Rosin, “Multi view image surveillance and tracking,” Workshop on Motion and Video Computing, pp. 169-174, 2002.

- [9] Z. Wu, N. I. Hristov, T. L. Hedrick, T. H. Kunz, and M. Betke, “Tracking a large number of objects from multiple views,” IEEE/CVF Int. Conf. on Computer Vision, pp. 1546-1553, 2009.

- [10] M. Hofmann, D. Wolf, and G. Rigoll, “Hypergraphs for Joint Multi-view Reconstruction and Multi-object Tracking,” IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 3650-3657, 2013.

- [11] W. Liu, O. Camps, and M. Sznaier, “Multi-camera Multi-Object Tracking,” CoRR, arXiv:1709.07065, 2017.

- [12] F. Previtali, D. D. Bloisi, and L. Iocchi, “A distributed approach for real-time multi-camera multiple object tracking.” Machine Vision and Applications, Vol.28, pp. 421-430, 2017.

- [13] T. Senoo, Y. Yamakawa, S. Huang, K. Koyama, M. Shimojo, Y. Watanabe, L. Miyashita, M. Hirano, T. Sueishi, and M. Ishikawa, “Dynamic Intelligent Systems Based on High-Speed Vision,” J. Robot. Mechatron., Vol.31, No.1, pp. 45-56, 2019.

- [14] T. Sueishi, T. Ogawa, S. Yachida, Y. Watanabe, and M. Ishikawa, “High-resolution Observation Method for Freely Swimming Medaka Using High-speed Optical Tracking with Ellipse Self-window,” 40th Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society, 2018.

- [15] M. Jiang, Y. Gu, T. Takaki, and I. Ishii, “High-frame-rate Target Tracking with CNN-based Object Recognition,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 599-606, 2018.

- [16] P. Rahimian and J. K. Kearney, “Optimal Camera Placement for Motion Capture Systems,” IEEE Trans. on Visualization and Computer Graphics, Vol.23, No.3, pp. 1209-1221, 2017.

- [17] G. Olague and R. Mohr, “Optimal Camera Placement for Accurate Reconstruction,” Pattern Recognition, Vol.35, No.4, pp. 927-944, 2002.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.