Paper:

Self-Localization of the Autonomous Robot for View-Based Navigation Using Street View Images

Nobuhiko Matsuzaki and Sadayoshi Mikami

Future University Hakodate

116-2 Kamedanakano-cho, Hakodate, Hokkaido 041-8655, Japan

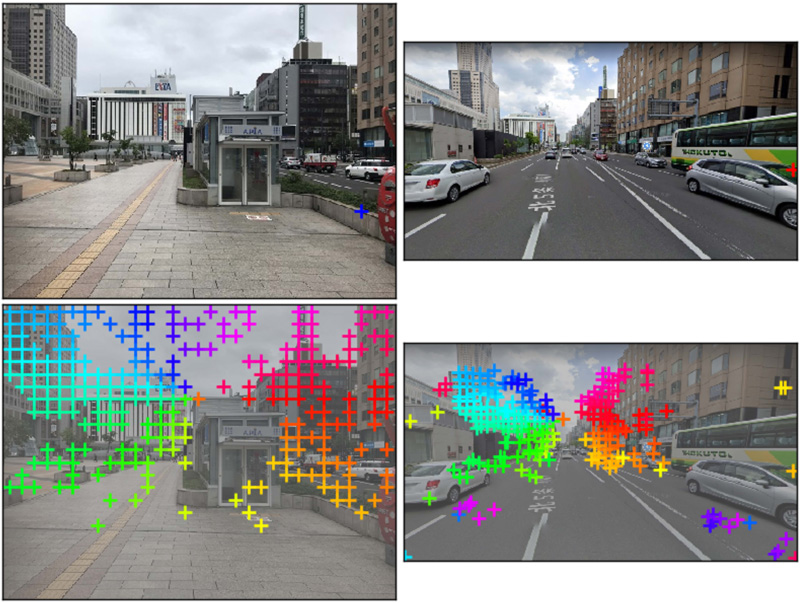

Navigation requires self-localization, which GPS typically performs outdoors. However, GPS frequently introduces significant errors in environments where radio reflection occurs, such as in urban areas, which makes precise self-localization occasionally impossible. Meanwhile, a human can use street view images to understand their surroundings and their current location. To implement this, we must solve image matching between the current scene and the images in a street view database. However, because of the wide variations in the field angle, time, and season between images, standard pattern matching by feature is difficult. DeepMatching can precisely match images with various lighting and field angles. Nevertheless, DeepMatching tends to misinterpret street images because it may find unnecessary feature points in the road and sky. This study proposes several methods to reduce misjudgment: (1) gaining image similarity with features such as buildings by excluding the road and sky, and (2) splitting the panoramic image into four directions and matching in each. This study shows the results of each method and summarizes their performance using various images and resolutions.

Result of matching with street view image

- [1] J. Revaud, P. Weinzaepfel, Z. Harchaoui, and C. Schmid, “DeepMatching: Hierarchical Deformable Dense Matching,” Int. J. Comput. Vis., Vol.120, No.3, pp. 300-323, doi: 10.1007/s11263-016-0908-3, Dec. 2016.

- [2] P. Thorndyke and B. Hayes-Roth, “Differences in spatial knowledge acquired from maps and navigation,” Cognitive Psychology, Vol.14, pp. 560-589, doi: 10.1016/0010-0285(82)90019-6, Nov. 1982.

- [3] Y. Matsumoto, M. Inaba, and H. Inoue, “View-based navigation using an omniview sequence in a corridor environment,” Machine Vision and Applications, Vol.14, No.2, pp. 121-128, doi: 10.1007/s00138-002-0104-z, Jun. 2003.

- [4] M. Tabuse, T. Kitaoka, and D. Nakai, “Outdoor autonomous navigation using SURF features,” Artif. Life Robotics, Vol.16, No.3, pp. 356-360, doi: 10.1007/s10015-011-0950-8, Dec. 2011.

- [5] C. Shen et al., “Brain-Like Navigation Scheme based on MEMS-INS and Place Recognition,” Applied Sciences, Vol.9, No.8, Art. No.8, doi: 10.3390/app9081708, Jan. 2019.

- [6] H. Morita, M. Hild, J. Miura, and Y. Shirai, “View-based localization in outdoor environments based on support vector learning,” pp. 2965-2970, doi: 10.1109/IROS.2005.1545445, Sep. 2005.

- [7] C. Valgren and A. Lilienthal, “SIFT, SURF and seasons: Long-term outdoor localization using local features,” Proc. of 3rd European Conf. on Mobile Robots, Sep. 2007.

- [8] Y. Yamagi, J. Ido, K. Takemura, Y. Matsumoto, J. Takamatsu, and T. Ogasawara, “View-sequence based indoor/outdoor navigation robust to illumination changes,” 2009 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1229-1234, doi: 10.1109/IROS.2009.5354713, Oct. 2009.

- [9] C. Weiss, H. Tamimi, A. Masselli, and A. Zell, “A hybrid approach for vision-based outdoor robot localization using global and local image features,” 2007 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, doi: 10.1109/IROS.2007.4398959, pp. 1047-1052, Oct. 2007.

- [10] S. Oishi, Y. Inoue, J. Miura, and S. Tanaka, “SeqSLAM++: View-based robot localization and navigation,” Robotics and Autonomous Systems, Vol.112, pp. 13-21, doi: 10.1016/j.robot.2018.10.014, Feb. 2019.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.