Paper:

Localization Method Using Camera and LiDAR and its Application to Autonomous Mowing in Orchards

Hiroki Kurita*, Masaki Oku*, Takeshi Nakamura**, Takeshi Yoshida*, and Takanori Fukao*

*Ritsumeikan University

1-1-1 Noji-higashi, Kusatsu, Shiga 525-8577, Japan

**OREC Co., Ltd.

548-22 Hiyoshi, Hirokawa-cho, Yame-gun, Fukuoka 834-0195, Japan

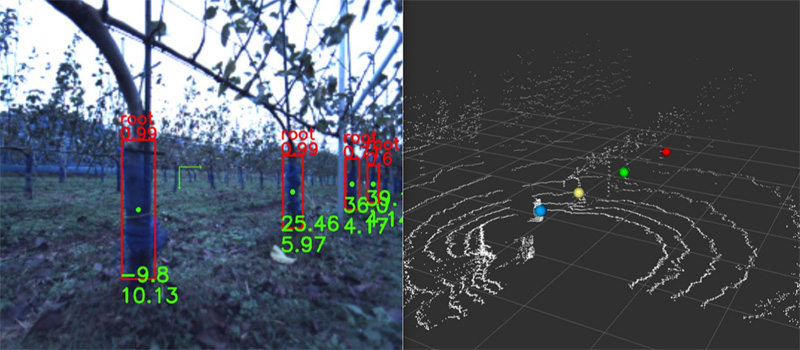

This paper presents a localization method using deep learning and light detection and ranging (LiDAR) for unmanned ground vehicle (UGV) in field environment. We develop a sensor fusion algorithm that UGV recognizes natural objects from RGB camera using deep learning and measures the distance to the recognized objects with LiDAR. UGV calculates its position relative to the objects, creates a reference path, and then executes path following control. The method is applied to autonomous mowing operation in orchard. A mower is tracked by UGV. UGV needs to run along a tree row keeping an appropriate distance from tree trunks, by which the mowing arm of the tracked mower properly touches the trunks. Field experiments are conducted in pear and apple orchards. UGV localizes self position relative to trees and performs autonomous mowing successfully. The results show that the presented method is effective.

Identified pear trunks

- [1] United Nations, Department of Economic and Social Affairs, Population Division, “World Population Prospects 2019 Highlights,” 2019.

- [2] Y. Nagasaka, N. Umeda, Y. Kanetani, K. Taniwaki, and Y. Sasaki, “Automated rice transplanter using global positioning and gyroscopes,” Computers and Electronics in Agriculture, Vol.43, No.3, pp. 222-234, 2004.

- [3] R. Takai, O. Barawid Jr., K. Ishii, and N. Noguchi, “Development of crawler-type robot tractor based on GPS and IMU,” Proc. of the 3rd IFAC Int. Conf. AGRICONTROL 2010, A3-5, 2010.

- [4] H. Kurita, M. Iida, W. Cho, and M. Suguri, “Rice autonomous Harvesting: Operation Framework,” J. of Field Robotics, Vol.34, No.6, pp. 1084-1099, 2017.

- [5] Q. Wang, J. Zhang, Y. Liu, and X. Zhang, “High-Precision and Fast LiDAR Odometry and Mapping Algorithm,” J. Adv. Comput. Intell. Intell. Inform., Vol.26, No.2, pp. 206-216, 2022.

- [6] L. Sun, R. P. Singh, and F. Kanehiro, “Visual SLAM Framework Based on Segmentation with the Improvement of Loop Closure Detection in Dynamic Environments,” J. Robot. Mechatron., Vol.33, No.6, pp. 1385-1397, 2021.

- [7] A. Sujiwo, T. Ando, E. Takeuchi, Y. Ninomiya, and M. Edahiro, “Monocular Vision-Based Localization Using ORB-SLAM with LIDAR-Aided Mapping in Real-World Robot Challenge,” J. Robot. Mechatron., Vol.28, No.4, pp. 479-490, 2016.

- [8] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards real-time object detection with region proposal network,” Proc. of the 2016 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1-9, 2016.

- [9] J. Redmon, S. Diwala, R. Girshick, and A. Farhadi, “You Only Look Once: Unified, real-time object detection,” Proc. of the 2016 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 779-788, 2016.

- [10] J. Redmon and A. Farhadi, “YOLO9000: Better, faster, stronger,” Proc. of the 2017 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 6517-6525, 2017.

- [11] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C. Y. Fu, and A. C. Berg, “SSD: Single shot multibox detector,” Proc. of the European Conf. on Computer Vision, pp. 21-37, 2016.

- [12] S. Kato, S. Tokunaga, Y. Maruyama, S. Maeda, M. Hirabayashi, Y. Kitsukawa, A. Monrroy, T. Ando, Y. Fujii, and T. Azumi, “Autoware on Board: Enabling Autonomous Vehicles with Embedded Systems,” Proc. of the 9th ACM/IEEE Int. Conf. on Cyber-Physical Systems (ICCPS2018), pp. 287-296, 2018.

- [13] J. Yoshida, T. Sugimachi, and T. Fukao, “Autonomous Driving fo a Truck Based on Path Following Control,” Trans. of the JSME, C, Vol.77, pp. 4125-4135, 2011.

- [14] Y. Kanayama, Y. Kimura, F. Miyazaki, and T. Noguchi, “A stable tracking control method for an autonomous mobile robot,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 384-389, 1990.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.