Paper:

Development of an Automatic Tracking Camera System Integrating Image Processing and Machine Learning

Masato Fujitake*, Makito Inoue*, and Takashi Yoshimi**

*Graduate School of Engineering and Science, Shibaura Institute of Technology

3-7-5 Toyosu, Koto-ku, Tokyo 135-8548, Japan

**College of Engineering, Shibaura Institute of Technology

3-7-5 Toyosu, Koto-ku, Tokyo 135-8548, Japan

This paper describes the development of a robust object tracking system that combines detection methods based on image processing and machine learning for automatic construction machine tracking cameras at unmanned construction sites. In recent years, unmanned construction technology has been developed to prevent secondary disasters from harming workers in hazardous areas. There are surveillance cameras on disaster sites that monitor the environment and movements of construction machines. By watching footage from the surveillance cameras, machine operators can control the construction machines from a safe remote site. However, to control surveillance cameras to follow the target machines, camera operators are also required to work next to machine operators. To improve efficiency, an automatic tracking camera system for construction machines is required. We propose a robust and scalable object tracking system and robust object detection algorithm, and present an accurate and robust tracking system for construction machines by integrating these two methods. Our proposed image-processing algorithm is able to continue tracking for a longer period than previous methods, and the proposed object detection method using machine learning detects machines robustly by focusing on their component parts of the target objects. Evaluations in real-world field scenarios demonstrate that our methods are more accurate and robust than existing off-the-shelf object tracking algorithms while maintaining practical real-time processing performance.

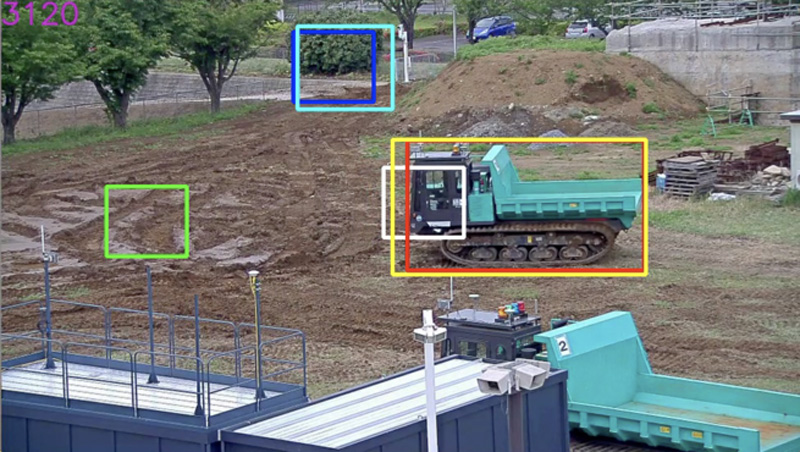

Tracking result of ATCS (yellow)

- [1] Unmanned Construction Association, “Trends and prospects for unmanned construction,” Construction Project Planning, Vol.681, pp. 6-12, 2006 (in Japanese).

- [2] K. Chayama et al., “Technology of Unmanned Construction System in Japan,” J. Robot. Mechatron., Vol.26, No.4, pp. 403-417, doi: 10.20965/jrm.2014.p.0403, 2014.

- [3] T. Bock, “Construction Robotics,” J. Robot. Mechatron., Vol.28, No.2, pp. 116-122, doi: 10.20965/jrm.2016.p0116, 2016.

- [4] K. Tateyama, “Achievement and Future Prospects of ICT Construction in Japan,” J. Robot. Mechatron., Vol.28, No.2, pp. 123-128, doi: 10.20965/jrm.2016.p0123, 2016.

- [5] T. Tanimoto et al., “Research on Superimposed Terrain Model for Teleoperation Work Efficiency,” J. Robot. Mechatron., Vol.28, No.2, pp. 173-184, doi: 10.20965/jrm.2016.p0173, 2016.

- [6] T. Nagano et al., “Arbitrary Viewpoint Visualization for Teleoperated Hydraulic Excavators,” J. Robot. Mechatron., Vol.32, No.6, pp. 1223-1243, doi: 10.20965/jrm.2020.p1233, 2020.

- [7] M. Ito, Y. Funahara, S. Saiki, Y. Yamazaki, and Y. Kurita, “Development of a Cross-Platform Cockpit for Simulated and Tele-Operated Excavators,” J. Robot. Mechatron., Vol.31, No.2, pp. 231-239, doi: 10.20965/jrm.2019.p0231, 2019.

- [8] G. Cheng and J. Han, “A survey on object detection in optical remote sensing images,” ISPRS J. of Photogrammetry and Remote Sensing, Vol.117, pp. 11-28, doi: 10.1016/j.isprsjprs.2016.03.014, 2016.

- [9] R. Brunelli, “Template Matching Techniques in Computer Vision: Theory and Practice,” John Wiley and Sons, 2009.

- [10] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” Communications of the ACM, Vol.60, Issue 6, 2012.

- [11] Y. LeCun, B. Boser, J. S. Denker et al., “Backpropagation applied to handwritten zip code recognition,” J. of Neural Computation, Vol.1, Issue 4, pp. 541-551, doi: 10.1162/neco.1989.1.4.541, 1989.

- [12] J. Redmon, S. Divvala, R. Girshick et al., “You only look once: Unified, real-time object detection,” Proc. of 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 779-788, 2016.

- [13] J. Redmon and A. Farhadi, “Yolo9000: Better, faster, stronger,” Proc. of 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 6517-6525, 2017.

- [14] M. Inoue and T. Yoshimi, “Automatic Tracking Camera System for Construction Machines by Combined Image Processing,” Proc. of the 35th Int. Symp. on Automation and Robotics in Construction (ISARC 2018), pp. 630-636, doi: 10.22260/ISARC2018/0086, 2018.

- [15] A. A. Malik, A. Khalil, and H. U. Khan, “Object Detection and Tracking using Background Subtraction and Connected Component Labelling,” Int. J. of Computer Applications, Vol.75, No.13, doi: 10.5120/13168-0421, 2013.

- [16] D. Erhan, C. Szegedy, A. Toshev, and D. Anguelov, “Scalable object detection using deep neural networks,” Proc. of 2014 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2155-2162, 2014.

- [17] M. Fujitake and T. Yoshimi, “Estimation System of Construction Equipment from Field Image by Combination Learning of Its Parts,” Proc. of the 11th Asian Control Conf. (ASCC), pp. 1672-1676, doi: 10.1109/ASCC.2017.8287425, 2017.

- [18] W. Liu, D. Anguelov, D. Erhan et al., “SSD: single shot multibox detector,” Proc. of the 14th European Conf. on Computer Vision (ECCV), B. Leibe, J. Matas, N. Sebe, and M. Welling (Eds.), “Computer Vision – ECCV 2016,” Springer, pp. 21-37, doi: 10.1007/978-3-319-46448-0_2, 2016.

- [19] R. Girshick, J. Donahue, T. Darrell, and J. Malik, “Rich feature hierarchies for accurate object detection and semantic segmentation,” Proc. of 2014 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 580-587, 2014.

- [20] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards real time object detection with region proposal networks,” Proc. of IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.39, No.6, pp. 1137-1149, doi: 10.1109/TPAMI.2016.2577031, 2015.

- [21] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” Proc. of 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 770-778, 2016.

- [22] M. Everingham, S. M. A. Eslami, L. V. Gool et al., “The Pascal Visual Object Classes Challenge: A Retrospective,” Int. J. of Computer Vision, Vol.111, Issue 1, pp. 98-136, doi: 10.1007/s11263-014-0733-5, 2015.

- [23] O. Russakovsky, J. Deng, H. Su et al., “ImageNet Large Scale Visual Recognition Challenge,” Int. J. of Computer Vision, Vol.115, Issue 3, pp. 211-252, doi: 10.1007/s11263-015-0816-y, 2015.

- [24] Z. Kalal, K. Mikolajczyk, and J. Matas, “Forward-Backward Error: Automatic Detection of Tracking Failures,” Proc. of the 20th Int. Conf. on Pattern Recognition, doi: 10.1109/ICPR.2010.675, pp. 2756-2759, 2010.

- [25] J. F. Henriques, R. Caseiro, P. Martins, and J. Batista, “High-Speed Tracking with Kernelized Correlation Filters,” Proc. of IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.37, No.3, pp. 583-596, doi: 10.1109/TPAMI.2014.2345390, 2015.

- [26] B. Babenko, M.-H. Yang, and S. Belongie, “Visual Tracking with Online Multiple Instance Learning,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), doi: 10.1109/CVPR.2009.5206737, pp. 983-990, 2009.

- [27] H. Grabner, M. Grabner, and H. Bischof, “Real-time tracking via on-line boosting,” Proc. of the 17th British Machine Vision Association, pp. 6.1-6.10, doi: 10.5244/C.20.6, 2006.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.