Paper:

Leveraging Motor Babbling for Efficient Robot Learning

Kei Kase*,**, Noboru Matsumoto*,**, and Tetsuya Ogata*,**

*Waseda University

3-4-1 Okubo, Shinjuku-ku, Tokyo 169-8555, Japan

**National Institute of Advanced Industrial Science and Technology (AIST)

2-4-7 Aomi, Koto-ku, Tokyo 135-0064, Japan

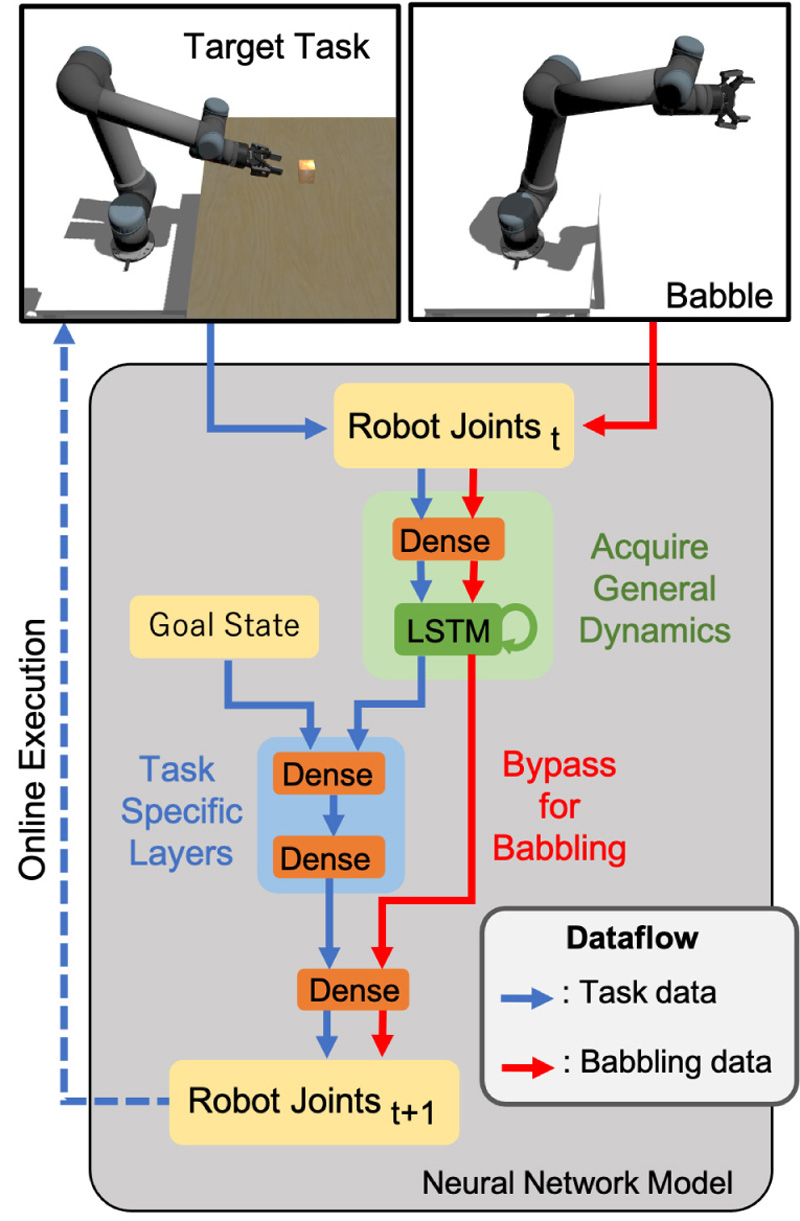

Deep robotic learning by learning from demonstration allows robots to mimic a given demonstration and generalize their performance to unknown task setups. However, this generalization ability is heavily affected by the number of demonstrations, which can be costly to manually generate. Without sufficient demonstrations, robots tend to overfit to the available demonstrations and lose the robustness offered by deep learning. Applying the concept of motor babbling – a process similar to that by which human infants move their bodies randomly to obtain proprioception – is also effective for allowing robots to enhance their generalization ability. Furthermore, the generation of babbling data is simpler than task-oriented demonstrations. Previous researches use motor babbling in the concept of pre-training and fine-tuning but have the problem of the babbling data being overwritten by the task data. In this work, we propose an RNN-based robot-control framework capable of leveraging targetless babbling data to aid the robot in acquiring proprioception and increasing the generalization ability of the learned task data by learning both babbling and task data simultaneously. Through simultaneous learning, our framework can use the dynamics obtained from babbling data to learn the target task efficiently. In the experiment, we prepare demonstrations of a block-picking task and aimless-babbling data. With our framework, the robot can learn tasks faster and show greater generalization ability when blocks are at unknown positions or move during execution.

Simultaneous learning of task and babble for robust generation

- [1] T. Yu, P. Abbeel, S. Levine, and C. Finn, “One-Shot Composition of Vision-Based Skills from Demonstration,” Proc. of 2019 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2019), pp. 2643-2650, 2019.

- [2] T. Zhang, Z. McCarthy, O. Jow, D. Lee, X. Chen, K. Goldberg, and P. Abbeel, “Deep imitation learning for complex manipulation tasks from virtual reality teleoperation,” 2018 IEEE Int. Conf. on Robotics and Automation (ICRA 2018), pp. 5628-5635, 2018.

- [3] A. K. Tanwani, P. Sermanet, A. Yan, R. Anand, M. Phielipp, and K. Goldberg, “Motion2vec: Semi-supervised representation learning from surgical videos,” 2020 IEEE Int. Conf. on Robotics and Automation (ICRA 2020), pp. 2174-2181, 2020.

- [4] A. Singh, L. Yang, K. Hartikainen, C. Finn, and S. Levine, “End-to-end robotic reinforcement learning without reward engineering,” Robotics: Science and Systems, 2019.

- [5] S. Nishide, T. Ogata, J. Tani, K. Komatani, and H. G. Okuno, “Autonomous motion generation based on reliable predictability,” J. Robot. Mechatron., Vol.21, No.4, pp. 478-488, 2009.

- [6] Y. Domae, “Recent trends in the research of industrial robots and future outlook,” J. Robot. Mechatron., Vol.31, No.1, pp. 57-62, 2019.

- [7] S. Levine, C. Finn, T. Darrell, and P. Abbeel, “End-to-end training of deep visuomotor policies,” The J. of Machine Learning Research, Vol.17, No.1, pp. 1334-1373, 2016.

- [8] D. Xu, S. Nair, Y. Zhu, J. Gao, A. Garg, L. Fei-Fei, and S. Savarese, “Neural task programming: Learning to generalize across hierarchical tasks,” 2018 IEEE Int. Conf. on Robotics and Automation (ICRA 2018), pp. 3795-3802, 2018.

- [9] S. Dasari, F. Ebert, S. Tian, S. Nair, B. Bucher, K. Schmeckpeper, S. Singh, S. Levine, and C. Finn, “Robonet: Large-scale multi-robot learning,” Proc. of the Conf. on Robot Learning, Vol.100, pp. 885-897, 2020.

- [10] A. Mandlekar, J. Booher, M. Spero, A. Tung, A. Gupta, Y. Zhu, A. Garg, S. Savarese, and L. Fei-Fei, “Scaling Robot Supervision to Hundreds of Hours with RoboTurk: Robotic Manipulation Dataset through Human Reasoning and Dexterity,” Proc. of 2019 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2019), pp. 1048-1055, 2019.

- [11] P. Yang, K. Sasaki, K. Suzuki, K. Kase, S. Sugano, and T. Ogata, “Repeatable folding task by humanoid robot worker using deep learning,” IEEE Robotics and Automation Letters, Vol.2, No.2, pp. 397-403, 2017.

- [12] L. Pinto and A. Gupta, “Learning to push by grasping: Using multiple tasks for effective learning,” 2017 IEEE Int. Conf. on Robotics and Automation (ICRA 2017), pp. 2161-2168, 2017.

- [13] C. Lynch, M. Khansari, T. Xiao, V. Kumar, J. Tompson, S. Levine, and P. Sermanet, “Learning latent plans from play,” Proc. of Machine Learning Research, Vol.100, pp. 1113-1132, 2020.

- [14] J. Sturm, C. Plagemann, and W. Burgard, “Body scheme learning and life-long adaptation for robotic manipulation,” Proc. of Robot Manipulation: Intelligence in Human Environments (RSS 2008), 2008.

- [15] R. Saegusa, G. Metta, G. Sandini, and S. Sakka, “Active motor babbling for sensorimotor learning,” Proc. of 2008 IEEE Int. Conf. on Robotics and Biomimetics (ROBIO 2008), pp. 794-799, 2009.

- [16] K. Takahashi, T. Ogata, J. Nakanishi, G. Cheng, and S. Sugano, “Dynamic motion learning for multi-DOF flexible-joint robots using active-passive motor babbling through deep learning,” Advanced Robotics, Vol.31, Issue 18, pp. 1002-1015, 2017.

- [17] K. He, R. Girshick, and P. Dollar, “Rethinking imagenet pre-training,” Proc. of the IEEE/CVF Int. Conf. on Computer Vision (ICCV 2019), 2019.

- [18] B. Zoph, G. Ghiasi, T.-Y. Lin, Y. Cui, H. Liu, E. D. Cubuk, and Q. V. Le, “Rethinking pre-training and self-training,” Proc. of Advances in Neural Information Processing Systems (NeurIPS 2020), Vol.33, pp. 3833-3845, 2020.

- [19] R. Rahmatizadeh, P. Abolghasemi, L. Boloni, and S. Levine, “Vision-based multi-task manipulation for inexpensive robots using end-to-end learning from demonstration,” 2018 IEEE Int. Conf. on Robotics and Automation (ICRA 2018), pp. 3758-3765, 2018.

- [20] J. Liu and Y. Li, “Visual servoing with deep learning and data augmentation for robotic manipulation,” J. Adv. Comput. Intell. Intell. Inform., Vol.24, No.7, pp. 953-962, 2020.

- [21] C. Finn, I. Goodfellow, and S. Levine, “Unsupervised learning for physical interaction through video prediction,” Proc. of Advances in Neural Information Processing Systems (NIPS 2016), Vol.29, pp. 64-72, 2016.

- [22] K. Yu, M. Bauza, N. Fazeli, and A. Rodriguez, “More than a million ways to be pushed. a high-fidelity experimental dataset of planar pushing,” 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2016), pp. 30-37, 2016.

- [23] A. Gupta, A. Murali, D. Gandhi, and L. Pinto, “Robot learning in homes: Improving generalization and reducing dataset bias,” Proc. of Advances in Neural Information Processing Systems (NeurIPS 2018), Vol.31, pp. 9094-9104, 2018.

- [24] J. Tremblay, A. Prakash, D. Acuna, M. Brophy, V. Jampani, C. Anil, T. To, E. Cameracci, S. Boochoon, and S. Birchfield, “Training deep networks with synthetic data: Bridging the reality gap by domain randomization,” Proc. of 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR 2018) Workshops, pp. 969-977, 2018.

- [25] S. James, P. Wohlhart, M. Kalakrishnan, D. Kalashnikov, A. Irpan, J. Ibarz, S. Levine, R. Hadsell, and K. Bousmalis, “Sim-to-real via sim-to-sim: Data-efficient robotic grasping via randomized-to-canonical adaptation networks,” Proc. of 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR 2019), pp. 12627-12637, 2019.

- [26] A. Zeng, S. Song, S. Welker, J. Lee, A. Rodriguez, and T. Funkhouser, “Learning synergies between pushing and grasping with self-supervised deep reinforcement learning,” 2018 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2018), pp. 4238-4245, 2018.

- [27] Y. Yamashita and J. Tani, “Emergence of functional hierarchy in a multiple timescale neural network model: A humanoid robot experiment,” PLoS Computational Biology, Vol.4, Issue 11, e1000220, 2008.

- [28] K. Noda, H. Arie, Y. Suga, and T. Ogata, “Multimodal integration learning of robot behavior using deep neural networks,” Robotics and Autonomous Systems, Vol.62, Issue 6, pp. 721-736, 2014.

- [29] K. Sasaki, K. Noda, and T. Ogata, “Visual motor integration of robot’s drawing behavior using recurrent neural network,” Robotics and Autonomous Systems, Vol.86, pp. 184-195, 2016.

- [30] M. Lukoševičius and H. Jaeger, “Reservoir computing approaches to recurrent neural network training,” Computer Science Review, Vol.3, Issue 3, pp. 127-149, 2009.

- [31] Z. Mahoor, B. J. MacLennan, and A. C. McBride, “Neurally plausible motor babbling in robot reaching,” 2016 Joint IEEE Int. Conf. on Development and Learning and Epigenetic Robotics (ICDL-EpiRob 2016), pp. 9-14, 2016.

- [32] T. Aoki, T. Nakamura, and T. Nagai, “Learning of motor control from motor babbling,” IFAC-PapersOnLine, Vol.49, Issue 19, pp. 154-158, 2016.

- [33] M. J. A. Zeestraten, I. Havoutis, J. Silvério, S. Calinon, and D. G. Caldwell, “An approach for imitation learning on riemannian manifolds,” IEEE Robotics and Automation Letters, Vol.2, No.3, pp. 1240-1247, 2017.

- [34] B. D. Ziebart, A. Maas, J. A. Bagnell, and A. K. Dey, “Maximum entropy inverse reinforcement learning,” Proc. of the 23rd AAAI Conf. on Artificial Intelligence, pp. 1433-1438, 2008.

- [35] A. J. Ijspeert, J. Nakanishi, H. Hoffmann, P. Pastor, and S. Schaal, “Dynamical movement primitives: Learning attractor models for motor behaviors,” Neural Computation, Vol.25, Issue 2, pp. 328-373, 2013.

- [36] A. Ahmadi and J. Tani, “A novel predictive-coding-inspired variational rnn model for online prediction and recognition,” Neural Computation, Vol.31, Issue 11, pp. 2025-2074, 2019.

- [37] S. Murata, Y. Yamashita, H. Arie, T. Ogata, S. Sugano, and J. Tani, “Learning to perceive the world as probabilistic or deterministic via interaction with others: A neuro-robotics experiment,” IEEE Trans. on Neural Networks and Learning Systems, Vol.28, No.4, pp. 830-848, 2017.

- [38] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: towards real-time object detection with region proposal networks,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.39, No.6, pp. 1137-1149, 2016.

- [39] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” Proc. of 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR 2016), pp. 779-788, 2016.

- [40] I. Loshchilov and F. Hutter, “Decoupled weight decay regularization,” Proc. of Int. Conf. on Learning Representations (ICLR), 2018.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.