Paper:

Manipulating Sense of Participation in Multipartite Conversations by Manipulating Head Attitude and Gaze Direction

Kenta Higashi*, Naoya Isoyama*, Nobuchika Sakata**, and Kiyoshi Kiyokawa*

*Nara Institute of Science and Technology

8916-5 Takayama-cho, Ikoma, Nara 630-0192, Japan

1-5 Yokotani, Setaoe-cho, Otsu, Shiga 520-2194, Japan

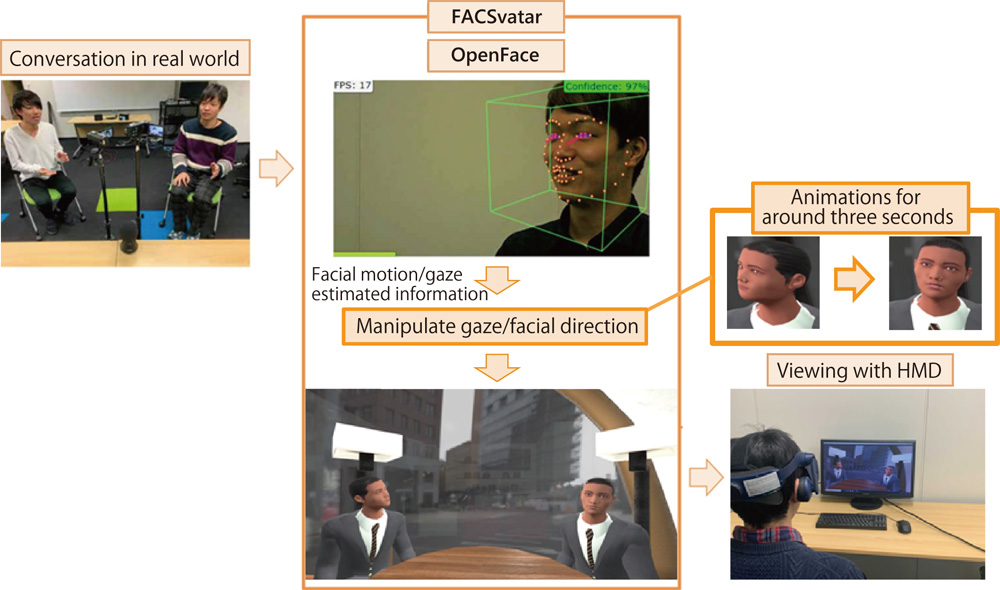

Interpersonal communication is so important in everyday life that it is desirable everyone who participates in the conversation is satisfied. However, every participant of the conversation cannot be satisfied in such cases as those wherein only one person cannot keep up with the conversation and feels alienated, or wherein someone cannot communicate non-verbal expressions with his/her conversation partner adequately. In this study, we have focused on facial direction and gaze among the various factors that are said to affect conversational satisfaction. We have attempted to lessen any sense of non-participation in the conversation and increase the conversational satisfaction of the non-participant in a tripartite conversation by modulating the visual information in such a way that the remaining two parties turn toward the non-participating party. In the experiments we have conducted in VR environments, we have reproduced a conversation of two male adults recorded in actual environments using two avatars. The experimental subjects have watched this over their HMDs. The experiments have found that visually modulating the avatars’ faces and gazes such that they appear to turn toward the subjects has increased the subjects’ sense of participation in the conversation. Nevertheless, the experiments have not increased the subjects’ conversational enjoyment, a component of the factors for conversational satisfaction.

Reproducing the conversations and speakers" motions with avatars in VR environments

- [1] Y. Tsuchiya, “Study of gaze behavior, satisfaction and Impressions in the conversation,” J. of the Studies of Cross-cultural Communication and Business, Vol.21, No.1, pp. 153-162, 2016.

- [2] M. Kimura, Y. Iso, A. Sakuragi, and I. Daibo, “The role of visual media in triadic communication: The expressions of smile and nodding, and their behavior matching,” Japanese J. of Interpersonal and Social Psychology, Vol.5, pp. 39-47, 2005.

- [3] M. Kimura, Y. Iso, and I. Daibo, “The effects of expectancy of an ongoing relationship on interpersonal communication,” IEICE Technical Report, Human Communication Science, Vol.104, No.198, pp. 1-6, 2004.

- [4] K. Ishii and K. Arai, “Construction of scales for listening and speaking skills and their relation to external and internal adaptation,” Bulletin of Clinical Psychology, Tokyo Seitoku University, Vol.17, pp. 68-77, 2017.

- [5] A. Murayama, H. Simizu, and I. Daibo, “Analyzing the Interdependence of Group Communication (2): Factors that would influence on the degree of satisfaction in triadic communication,” IEICE Technical Report, Vol.106, No.146, pp. 7-12, 2006.

- [6] A. Murayama and A. Miura, “Intragroup conflict and subjective performance within group discussion – A multiphasic examination using a hierarchical linear model –, The Japanese J. of Experimental Social Psychology, Vol.53, No.2, pp. 81-92, 2014.

- [7] J. Hietanen, “Affective Eye Contact: An Integrative Review,” Frontiers in Psychology, Vol.9, 2018.

- [8] A. Gupta, F. Strivens, B. Tag, K. Kunze, and J. Ward, “Blink as You Sync: Uncovering Eye and Nod Synchrony in Conversation Using Wearable Sensing,” Proc. of the 23rd Int. Symp. on Wearable Computers (ISWC ’19), pp. 66-71, 2019.

- [9] W. Tschacher, G. Rees, and F. Ramseyer, “Nonverbal Synchrony and Affect in Dyadic Interactions,” Frontiers in psychology, Vol.5, 2014.

- [10] K. Fujiwara and I. Daibo, “The influence of affect on satisfaction with conversations and interpersonal impressions from the perspective of dyadic affective combinations,” The Japanese J. of Psychology, Vol.84, No.5, pp. 522-528, 2013.

- [11] S. Chiller-Glaus, A. Schwaninger, F. Hofer, M. Kleiner, and B. Knappmeyer, “Recognition of Emotion in Moving and Static Composite Faces,” Swiss J. Psychol., Vol.70, No.4, pp. 233-240, 2011.

- [12] J. Katsyri, V. Klucharev, M. Frydrych, and M. Sams, “Identification of synthetic and natural emotional facial expressions,” Paper presented at the Int. Conf. on Audio-Visual Speech Processing, St. Jorioz, France, 2003.

- [13] J. Spencer-Smith, H. Wild, A. Innes-Ker, J. Townsend, C. Duffy, and C. Edwards, “Making faces: creating three-dimensional parameterized models of facial expression,” Behav. Res. Methods Instrum. Comput., Vol.33, No.2, pp. 115-123, 2001.

- [14] T. Wehrle, S. Kaiser, S. Schmidt, and K. Scherer, “Studying the dynamics of emotional expression using synthesized facial muscle movements,” J. Pers. Soc. Psychol, Vol.78, No.1, pp. 105-119, 2000.

- [15] A. Muhlberger, A. Kund, P. Pauli, and P. Weyers, “Modulation of facial reactions to avatar emotional faces by nonconscious competition priming,” J. Psychophysiol., Vol.20, No.2, p. 143, 2006.

- [16] A. Mojzisch, L. Schilbach, J. Helmert, S. Pannasch, B. Velichkovsky, and K. Vogeley, “The effects of self-involvement on attention, arousal, and facial expression during social interaction with virtual others: a Psychophysiological study,” Soc. Neurosci., Vol.1, pp. 184-195, 2006.

- [17] T. Osugi and J. Kawahara, “Effects of Head Nodding and Shaking Motions on Perceptions of Likeability and Approachability,” Perception, Vol.47, pp. 16-29, 2018.

- [18] T. Randhavane, A. Bera, K. Kapsaskis, K. Gray, and D. Menocha, “FVA: Modeling Perceived Friendliness of Virtual Agents Using Movement Characteristics,” IEEE Tran. on Visualization and Computer Graphics, Vol.25, No.11, pp. 3135-3145, 2019.

- [19] H. Adachi, S. Myojin, and N. Shimada, “ScoringTalk: a tablet system scoring and visualizing conversation for balancing of participation,” SIGGRAPH Asia 2015 Mobile Graphics and Interactive Applications (SA ’15), Vol.9, pp. 1-5, 2015.

- [20] H. Tsujita and J. Rekimoto, “BrightFace: Facial Expression Enhancement System by Projection,” Workshop on Interactive Systems and Software (WISS2013), 2013.

- [21] K. Higashi, N. Isoyama, N. Sakata, and K. Kiyokawa, “A Method for Improving Conversation Satisfaction by Recognizing and Enhancing Facial Expressions,” Ubiquitous Wearable Workshop 2019 (UWW 2019), 2019.

- [22] K. Suzuki, M. Yokoyama, S. Yoshida, T. Mochizuki, T. Nunobiki, T. Narumi, T. Tanikawa, and H. Michitaka, “Augmentation of Remote Communication Using Congruent Facial Deformation Technique,” J. of Information Processing Society of Japan, Vol.59, No.1, pp. 52-60, 2017.

- [23] N. Jinnai, H. Sumioka, T. Minato, and H. Ishiguro, “Multi-Modal Interaction Through Anthropomorphically Designed Communication Medium to Enhance the Self-Disclosures of Personal Information,” J. Robot. Mechatron, Vol.32, No.1, pp. 76-85, 2020.

- [24] S. Struijk, H. Huang, M. Mirzaei, and T. Nishida, “FACSvatar: An Open Source Modular Framework for Real-Time FACS based Facial Animation,” Proc. of the 18th Int. Conf. on Intelligent Virtual Agents (IVA ’18), pp. 159-164, 2018.

- [25] T. Baltrusaitis, P. Robinson, and L.-P. Morency, “Open-Face: an open source facial behavior analysis toolkit,” 2016 IEEE Winter Conf. on Applications of Computer Vision (WACV 2016), 2016.

- [26] R. Hage, F. Buisseret, L. Pitance, J. Brisméee, C. Detrembleur, and F. Dierick, “Head-neck Rotational Movements Using DidRenLaser Test Indicate Children and Seniors’ Lower Performance,” PLOS ONE, Vol.14, No.7, e0219515, 2019.

- [27] S. Masuko and J. Hoshino, “Head-eye Animation Corresponding to a Conversation for CG Characters,” Computer Graphics Forum, Vol.26, No.3, pp. 303-312, 2007.

- [28] S. Duncan, “Some Signals and Rules for Taking Speaking Turns in Conversations,” J. of Personality and Social Psychology, Vol.23, No.2, pp. 283-292, 1972.

- [29] A. Kendon, “Some Functions of Gaze-direction in Social Interaction,” Acta Psychologica, Vol.26, pp. 22-63, 1967.

- [30] M. Argyle and J. Dean, “Eye-contact, Distance and Affiliation,” Sociometry, Vol.28, No.3, pp. 289-304, 1965.

- [31] J. Sehulster, “Things we talk about, how frequently, and to whom: Frequency of topics in everyday conversation as a function of gender, age, and marital status,” The American J. of Psychology, Vol.119, No.3, pp. 407-432, 2006.

- [32] T. Mochizuki, M. Inoue, H. Sato, K. Otsuka, K. Kubo, Y. Fujino, K. Hatae, and J. Fukushige, “Evaluation of the appearance of avatars in an always-on communications system,” ITE Technical Report, Vol.26, No.55, pp. 33-38, 2002.

- [33] A. Hessel, J. Schumacher, M. Geyer, and E. Brähler, “Symptom Checkliste SCL-90-R: Testtheoretishe Uberprüfung und Normierung an eienr bevölkerungssensitiven Stichprobe [Symptom-Checklist SCL-90-R: Validation and standardization based on a representative sample of the German population],” Diagnostica, Vol.47, 2001.

- [34] K. Petrowski, B. Schmalbach, S. Kliem, A. Hinz, and E. Brähler, “Symptom-Checklist-K-9: Norm Values and Factorial Structure in a Representative German Sample,” PLOS ONE, Vol.14, No.4, 2019.

- [35] L. Horowitz, S. Rosenberg, B. Baer, G. Ureño, and V. Villaseñor, “Inventory of Interpersonal Problems: Psychometric Properties and Clinical Applications,” J. of Consulting and Clinical Psychology, Vol.56, No.5, pp. 885-892, 1988.

- [36] Y. Shimonaka, K. Nakazato, Y. Gondou, and T. Midori, “Japanese version NEO-PI-R, NEO-FFI User’s Manual,” Revised and Expanded Edition, Tokyo Psychology Co., 2011.

- [37] Y. Shimonaka, K. Nakazato, Y. Gondou, and T. Midori, “Construction and factorial validity of the Japanese NEO-PI-R,” Japan Society of Personality Psychology, Vol.6, No.2, pp. 138-147, 1998.

- [38] M. Hecht, “The Conceptualization and Measurement of Interpersonal Communication Satisfaction,” Human Communication Research, Vol.4, No.3, pp. 253-264, 1978.

- [39] J. Kawahito, Y. Otsuka, K. Kaida, and A. Nakata, “Reliability and validity of the Japanese version of 20-item Positive and Negative Affect Schedule,” Research in Psychology, Hiroshima University, Vol.11, pp. 225-240, 2011.

- [40] E. Brunner and U. Munzel, “The nonparametric Behrens-Fisher problem: asymptotic theory and a small-sample approximation,” Biometrical J.: J. of Mathematical Methods in Biosciences, Vol.42, No.1, pp. 17-25, 2000.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.