Paper:

Object Grasping Instructions to Support Robot by Laser Beam One Drag Operations

Momonosuke Shintani*, Yuta Fukui*, Kosuke Morioka*, Kenji Ishihata*, Satoshi Iwaki*, Tetsushi Ikeda*, and Tim C. Lüth**

*Hiroshima City University

3-4-1 Ozukahigashi, Asaminami, Hiroshima, Hiroshima 731-3194, Japan

**Technical University of Munich (TUM)

Boltzmannstrasse 15, Garching 85748, Germany

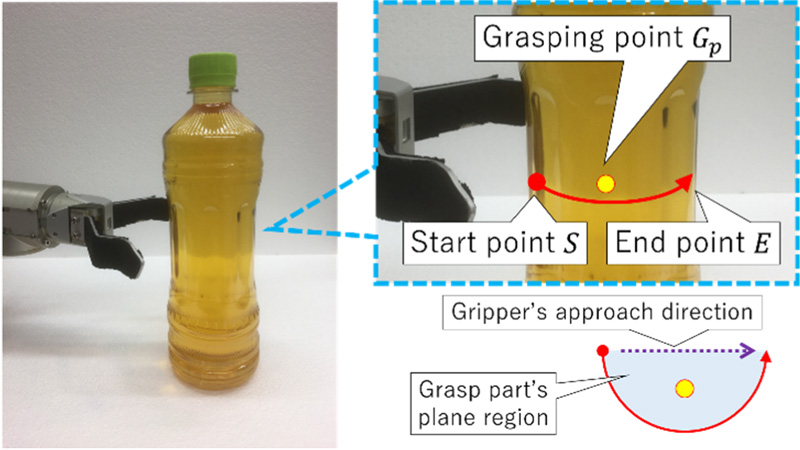

We propose a system in which users can intuitively instruct the robot gripper’s positions and attitudes simply by tracing the object’s grasp part surface with one stroke (one drag) of the laser beam. The proposed system makes use of the “real world clicker (RWC)” we have developed earlier, a system capable of obtaining with high accuracy the three-dimensional coordinate values of laser spots on a real object by mouse-operating the time-of-flight (TOF) laser sensor installed on the pan-tilt actuator. The grasping point is specified as the centroid of the grasp part’s plane region by the laser drag trajectory. The gripper attitude is specified by selecting the left and right drag modes that correspond to the PC mouse’s left and right click buttons. By doing so, we realize a grasping instruction interface where users can take into account various physical conditions for the objects, environments, and grippers. We experimentally evaluated the proposed system by measuring the grasping instruction time of multiple test subjects for various daily use items.

Grasp PET bottle from left side

- [1] Y. Abiko, S. Nakasako, Y. Hidaka, S. Iwaki, and K. Taniguchi, “Linkage of Virtual Object and Physical Object for Teaching to Caregiver-Robot,” Proc. of the 24th Int. Conf. on Artificial Reality and Telexistence, doi: 10.2312/ve.20141373, 2014.

- [2] Y. Abiko, Y. Hidaka, and S. Iwaki, “Fundamental Study on Head-motion Pointing System for Seamless Access to Objects in both PC and Real World,” Trans. of the Society of Instrument and Control Engineers, Vol.52, No.2, pp. 77-85, 2016.

- [3] Y. Abiko, Y. Hidaka, K. Sato, S. Iwaki, and T. Ikeda, “Real World Click with a TOF Laser Sensor Set on a Pan-tilt Actuator and Its Application for Teaching a Life Support Robot,” Trans. of the Society of Instrument and Control Engineers, Vol.52, No.11, pp. 614-624, 2016 (in Japanese).

- [4] K. Sato, Y. Hidaka, S. Iwaki, and T. Ikeda, “Pointing Performance Improvement of Real World Clicker System with a TOF Laser Sensor Set on a Pan-tilt Actuator – Proposal of Laser Spot Marker in the Viewing Window –,” Trans. of the Society of Instrument and Control Engineers, Vol.54, No.2, pp. 290-297, 2018.

- [5] Q. Bai, S. Li, J. Yang, Q. Song, Z. Li, and X. Zhang, “Object Detection Recognition and Robot Grasping Based on Machine Learning: A Survey,” IEEE Access, Vol.8, pp. 181855-181879, 2020.

- [6] J. Hatori, Y. Kikuchi, S. Kobayashi, K. Takahashi, Y. Tsuboi, Y. Unno, W. Ko, and J. Tan, “Interactively Picking Real-World Objects with Unconstrained Spoken Language Instructions,” Proc. of 2018 IEEE Int. Conf. on Robotics and Automation (ICRA 2018), pp. 3774-3781, 2018.

- [7] S. Levine, P. Pastor, A. Krizhevsky, J. Ibarz, and D. Quillen, “Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection,” Int. J. of Robotics Research, Vol.37, Nos.4-5, pp. 421-436, 2018.

- [8] H. O. Song, M. Fritz, D. Goehring, and T. Darrell, “Learning to detect visual grasp affordance,” IEEE Trans. Autom. Sci. Eng., Vol.13, No.2, pp. 798-809, 2016.

- [9] V. Satish, J. Mahler, and K. Goldberg, “On-Policy Dataset Synthesis for Learning Robot Grasping Policies Using Fully Convolutional Deep Networks,” IEEE Robotics and Automation Letters, Vol.4, No.2, pp. 1357-1364, 2019.

- [10] Y. Chao, X. Chen, and N. Xiao, “Deep learning-based grasp-detection method for a five-fingered industrial robot hand,” IET Comput. Vis., Vol.13, No.1, pp. 61-70, 2019.

- [11] Y. Xu, L. Wang, A. Yang, and L. Chen, “GraspCNN: Real-time grasp detection using a new oriented diameter circle representation,” IEEE Access, Vol.7, pp. 159322-159331, 2019.

- [12] C. M. O. Valente, A. Schammass, A. F. R. Araujo, and G. A. P. Caurin, “Intelligent Grasping Using Neural Modules,” Proc. of 1999 IEEE Int. Conf. on Systems, Man, and Cybernetics (IEEE SMC’99), pp. 780-785, 1999.

- [13] K. Harada, “Manipulation Research,” J. of the Robotics Society of Japan, Vol.31, No.4, pp. 320-325, 2013.

- [14] C. C. Kemp, C. D. Anderson, H. Nguyen, A. J. Trevor, and Z. Xu, “A Point-and-Click Interface for the Real World: Laser Designation of Objects for Mobile Manipulation,” Proc. of the 3rd ACM/IEEE Int. Conf. on Human-Robot Interaction (HRI), pp. 241-248, 2008.

- [15] H. Nguyen, A. Jain, C. Anderson, and C. C. Kemp, “A Clickable World: Behavior Selection Through Pointing and Context for Mobile Manipulation,” Proc. of 2008 IEEE/RJS Int. Conf. on Intelligent Robots and Systems, pp. 787-793, 2008.

- [16] K. Ishii, S. Zhao, M. Inami, T. Igarashi, and M. Imai, “Designing Laser Gesture Interface for Robot Control,” Proc. of the 12th IFIP Conf. on Human-Computer Interaction, (INTERACT 2009), T. Gross et al. (Eds.), “Human-Computer Interaction – INTERACT 2009,” Springer, pp. 479-492, 2009.

- [17] G. Hirzinger, “The space and telerobotic concepts of the DFVLR ROTEX,” Proc. of 1987 IEEE Int. Conf. on Robotics and Automation, pp. 443-449, 1987.

- [18] T. Nunogaki and L. Joo-Ho, “Graspable area presentation by monocular camera based simple modeling for supporting manipulator teleoperation,” Proc. of JSME Annual Conf. on Robotics and Mechatronics (ROBOMEC 2013), 2A1-F03, 2013 (in Japanese).

- [19] R. Balasubramanian, L. Xu, P. D. Brook, J. R. Smith, and Y. Matsuoka, “Physical Human Interactive Guidance: Identifying Grasping Principles From Human-Planned Grasps,” IEEE Trans. on Robotics, Vol.28, No.4, pp. 899-910, 2012.

- [20] K. Nagata, T. Miyasaka, Y. Kanamiya, N. Yamanobe, K. Maruyama, S. Kawabata, and Y. Kawai, “Grasping an Indicated Object in a Complex Environment,” Trans. of the Japan Society of Mechanical Engineers, Series C, Vol.79, No.797, pp. 27-42, 2013.

- [21] P. Michelman and P. Allen, “Shared autonomy in a robot hand teleoperation system,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS’94), Vol.1, pp. 253-259, 1994.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.