Paper:

Vision-Based Sensing Systems for Autonomous Driving: Centralized or Decentralized?

Manato Hirabayashi*, Yukihiro Saito**, Kosuke Murakami**, Akihito Ohsato**, Shinpei Kato**,***, and Masato Edahiro*

*Graduate School of Information Science, Nagoya University

Furo-cho, Chikusa-ku, Nagoya, Aichi 464-8603, Japan

**Tier IV, Inc.

Jacom Building, 1-12-10 Kitashinagawa, Shinagawa-ku, Tokyo 140-0001, Japan

***Graduate School of Information Science and Technology, The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-0033, Japan

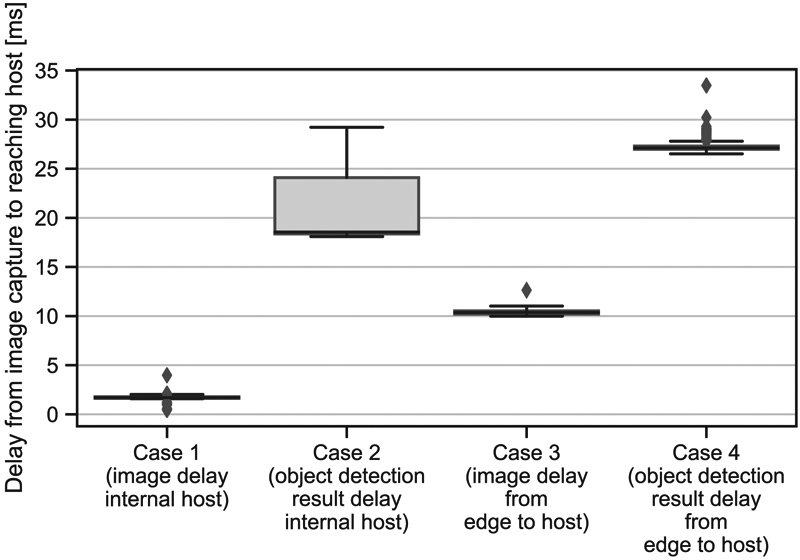

The perception of the surrounding circumstances is an essential task for fully autonomous driving systems, but its high computational and network loads typically impede a single host machine from taking charge of the systems. Decentralized processing is a candidate to decrease such loads; however, it has not been clear that this approach fulfills the requirements of onboard systems, including low latency and low power consumption. Embedded oriented graphics processing units (GPUs) are attracting great interest because they provide massively parallel computation capacity with lower power consumption compared to traditional GPUs. This study explored the effects of decentralized processing on autonomous driving using embedded oriented GPUs as decentralized units. We implemented a prototype system that off-loaded image-based object detection tasks onto embedded oriented GPUs to clarify the effects of decentralized processing. The results of experimental evaluation demonstrated that decentralized processing and network quantization achieved approximately 27 ms delay between the feeding of an image and the arrival of detection results to the host as well as approximately 7 W power consumption on each GPU and network load degradation in orders of magnitude. Judging from these results, we concluded that decentralized processing could be a promising approach to decrease processing latency, network load, and power consumption toward the deployment of autonomous driving systems.

Delay comparison between w/ and w/o decentralized processing

- [1] SAE On-Road Automated Driving Committee and others, “SAE J3016. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles,” Technical Report, SAE Int., 2016.

- [2] JSAE Standardization Board, “JASO TP 18004. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles,” Technical Report, Society of Automotive Engineers of Japan, Inc., 2018.

- [3] S. Mittal, “A Survey on optimized implementation of deep learning models on the NVIDIA Jetson platform,” J. of Systems Architecture, 2019.

- [4] M. Eisenbach, R. Stricker, D. Seichter, A. Vorndran, T. Wengefeld, and H.-M. Gross, “Speeding up deep neural networks on the jetson tx1,” Proc. of the Workshop on Computational Aspects of Pattern Recognition and Computer Vision with Neural Systems at Int. Joint Conf. on Neurlal Networks, 11, 2017.

- [5] N. Tijtgat, W. Van Ranst, T. Goedeme, B. Volckaert, and F. De Turck, “Embedded real-time object detection for a uav warning system,” Proc. of the IEEE Int. Conf. on Computer Vision Workshops, pp. 2110-2118, 2017.

- [6] R. Giubilato, S. Chiodini, M. Pertile, and S. Debei, “An evaluation of ROS-compatible stereo visual SLAM methods on a nVidia Jetson TX2,” Measurement, Vol.140, pp. 161-170, 2019.

- [7] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “SSD: Single Shot MultiBox Detector,” Proc. of the European Conf. on Computer Vision, 2016.

- [8] J. Redmon and A. Farhadi, “Yolov3: An incremental improvement,” arXiv: 1804.02767, 2018.

- [9] M. Quigley, K. Conley, B. Gerkey, J. Faust, T. Foote, J. Leibs, R. Wheeler, and A. Y. Ng, “ROS: an open-source Robot Operating System,” Proc. of Workshop on Open Source Software of the IEEE Int. Conf. on Robotics and Automation, Vol.3, p. 5, 2009.

- [10] S. Kato, E. Takeuchi, Y. Ishiguro, Y. Ninomiya, K. Takeda, and H. Tsuyoshi, “An Open Approach to Autonomous Vehicles,” IEEE Micro, Vol.35, No.6, pp. 60-68, 2015.

- [11] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” Proc. of European Conf. on Computer Vision, pp. 740-755, 2014.

- [12] S. Yogamani et al., “Woodscape: A multi-task, multi-camera fisheye dataset for autonomous driving,” Proc. of the IEEE/CVF Int. Conf. on Computer Vision, pp. 9308-9318, 2019.

- [13] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks,” Advances in Neural Information Processing Systems, pp. 91-99, 2015.

- [14] L. Jiao, F. Zhang, F. Liu, S. Yang, L. Li, Z. Feng, and R. Qu, “A Survey of Deep Learning-Based Object Detection,” IEEE Access, Vol.7, pp. 128837-128868, 2019.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.