Paper:

Pose Estimation of Swimming Fish Using NACA Airfoil Model for Collective Behavior Analysis

Hitoshi Habe*, Yoshiki Takeuchi*, Kei Terayama**, and Masa-aki Sakagami***

*Kindai University

3-4-1 Kowakae, Higashi-osaka, Osaka 577-8502, Japan

**Yokohama City University

1-7-29 Suehiro-cho, Tsurumi-ku, Yokohama, Kanagawa 230-0045, Japan

***Kyoto University

Yosida Nihonmatsu-cho, Sakyo-ku, Kyoto 606-8316, Japan

We propose a pose estimation method using a National Advisory Committee for Aeronautics (NACA) airfoil model for fish schools. This method allows one to understand the state in which fish are swimming based on their posture and dynamic variations. Moreover, their collective behavior can be understood based on their posture changes. Therefore, fish pose is a crucial indicator for collective behavior analysis. We use the NACA model to represent the fish posture; this enables more accurate tracking and movement prediction owing to the capability of the model in describing posture dynamics. To fit the model to video data, we first adopt the DeepLabCut toolbox to detect body parts (i.e., head, center, and tail fin) in an image sequence. Subsequently, we apply a particle filter to fit a set of parameters from the NACA model. The results from DeepLabCut, i.e., three points on a fish body, are used to adjust the components of the state vector. This enables more reliable estimation results to be obtained when the speed and direction of the fish change abruptly. Experimental results using both simulation data and real video data demonstrate that the proposed method provides good results, including when rapid changes occur in the swimming direction.

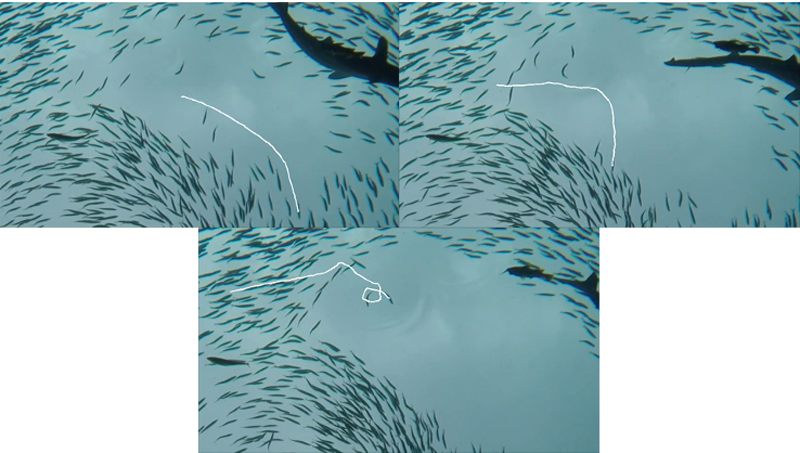

Tracking result of swimming fish in a fish school

- [1] J. Toner and Y. Tu, “Flocks, herds, and schools: A Quantitative Theory of Flocking,” Physical Review E, Vol.58, No.4, pp. 4828-4858, 1998.

- [2] I. D. Couzin, J. Krause, R. James, G. D. Ruxton, and N. R. Franks, “Collective Memory and Spatial Sorting in Animal Groups,” J. of Theoretical Biology, Vol.218, No.1, pp. 1-11, 2002.

- [3] I. D. Couzin, J. Krause, N. R. Franks, and S. A. Levin, “Effective Leadership and Decision-making in Animal Groups on the Move,” Nature, Vol.433, No.7025, pp. 513-516, 2005.

- [4] T. Vicsek and A. Zafeiris, “Collective Motion,” Physics Reports, Vol.517, No.3, pp. 71-140, 2012.

- [5] A. D. Liliya and V. G. Artyom, “Research and Study of the Hybrid Algorithms Based on the Collective Behavior of Fish Schools and Classical Optimization Methods,” Algorithms, Vol.13, Issue 4, 85, 2020.

- [6] J. Delcourt, M. Denoel, M. Ylieff, and P. Poncin, “Video Multitracking of Fish Behavior: a synthesis and future perspectives,” Fish and Fishers, Vol.14, No.2, pp. 186-204, 2013.

- [7] K. Terayama, H. Habe, and M. Sakagami, “Multiple Fish Tracking with an NACA Airfoil Model for Collective Behavior Analysis,” IPSJ Trans. on Computer Vision and Applications, Vol.8, No.4, pp. 1-7, 2016.

- [8] H. Akimoto and H. Miyata “Finite-volume simulation of a Flow about a Moving Body with Deformation,” Proc. 5th Int. Symp. Comp. Fluid Dynamics, Vol.1, pp. 13-18, 1993.

- [9] M. Alexander, M. Pranav, M. C. Kevin, A. Taiga, N. M. Venkatesh, W. M. Mackenzie, and B. Matthias, “DeepLabCut: marker-less pose estimation of user-defined body parts with deep learning,” Nature Neuroscience, Vol.21, Issue 9, pp. 1281-1289, 2018.

- [10] I. Michael and B. Andrew, “CONDENSATION – Conditional Density Propagation for Visual Tracking,” Int. J. of Computer Vision, Vol.29, No.1, pp. 5-28, 1998.

- [11] T. N. Duc, L. Wanqing, and O. O. Philip, “Human detection from images and videos: A survey,” Pattern Recognition, Vol.51, pp. 148-175, 2016.

- [12] T. Fukunaga, S. Kubota, S. Oda, and W. Iwasaki, “GroupTracker: Video tracking system for multiple animals under severe occlusion,” Computational Biology and Chemistry, Vol.57, pp. 39-45, 2015.

- [13] O. Yamanaka and R. Takeuchi, “UMATracker: an intuitive image-based tracking platform,” J. of Experimental Biology, Vol.221, jeb182469, 2018.

- [14] Y. Okuda, H. Kamada, S. Takahashi, S. Kaneko, K. Kawabata, and F. Takemura, “Method of Dynamic Image Processing for Ecology Observation of Marine Life,” J. Robot. Mechatron., Vol.25, No.5, pp. 820-829, 2013.

- [15] Z. Cao, G. Hidalgo, T. Simon, S.-E. Wei, and Y. Sheikh, “OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.43, No.1, pp. 172-186, 2021.

- [16] S. Kreiss, L. Bertoni, and A. Alahi, “PifPaf: Composite Fields for Human Pose Estimation,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 11969-11978, 2019.

- [17] K. Scott, C. D. Gelatt, and P. V. Mario, “Optimization by simulated annealing,” Science, Vol.220, No.4598, pp. 671-680, 1983.

- [18] C. Ma, Y. Li, F. Yang, Z. Zhang, Y. Zhuang, H. Jia, and X. Xie, “Deep Association: End-to-end Graph-Based Learning for Multiple Object Tracking with Conv-Graph Neural Network,” Proc. of the 2019 on Int. Conf. on Multimedia Retrieval (ICMR ’19), pp. 253-261, 2019.

- [19] T. Kikuchi, K. Nonaka, and K. Sekiguchi, “Moving Horizon Estimation with Probabilistic Data Association for Object Tracking Considering System Noise Constraint,” J. Robot. Mechatron., Vol.32, No.3, pp. 537-547, 2020.

- [20] K. W. Xiaolin and C. Jinxiang, “Modeling 3D human poses from uncalibrated monocular images,” Int. Conf. on Computer Vision, pp. 1873-1880, 2009.

- [21] T. Ura, “Development Timeline of the Autonomous Underwater Vehicle in Japan,” J. Robot. Mechatron., Vol.32, No.4, pp. 713-721, 2020.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.