Paper:

Localization of Flying Bats from Multichannel Audio Signals by Estimating Location Map with Convolutional Neural Networks

Kazuki Fujimori*, Bisser Raytchev*, Kazufumi Kaneda*, Yasufumi Yamada*, Yu Teshima**, Emyo Fujioka**, Shizuko Hiryu**, and Toru Tamaki***

*Hiroshima University

1-4-1 Kagamiyama, Higashi-hiroshima, Hiroshima 739-8527, Japan

**Doshisha University

1-3 Tatara-miyakodani, Kyotanabe, Kyoto 610-0394, Japan

***Nagoya Institute of Technology

Gokiso-cho, Showa-ku, Nagoya, Aichi 466-8555, Japan

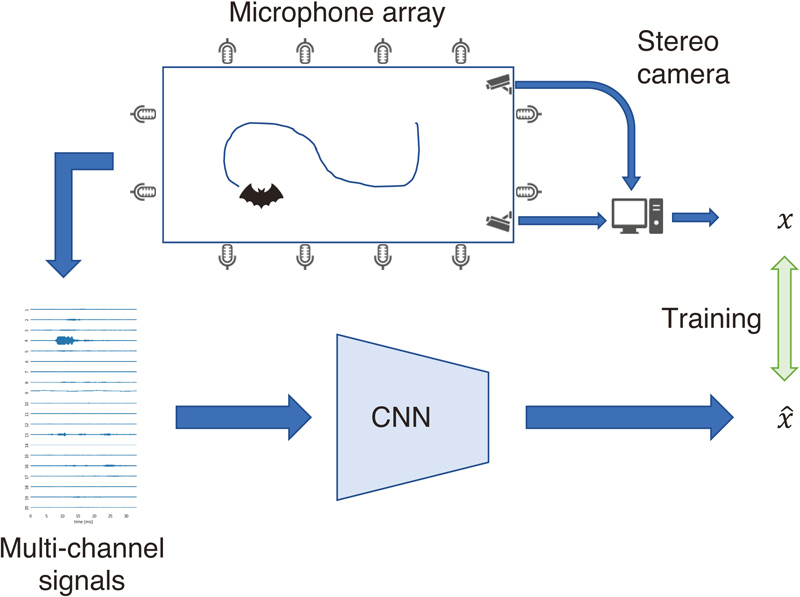

We propose a method that uses ultrasound audio signals from a multichannel microphone array to estimate the positions of flying bats. The proposed model uses a deep convolutional neural network that takes multichannel signals as input and outputs the probability maps of the locations of bats. We present experimental results using two ultrasound audio clips of different bat species and show numerical simulations with synthetically generated sounds.

Bat localization with deep learning

- [1] B. Tian and H.-U. Schnitzler, “Echolocation signals of the greater horseshoe bat (Rhinolophus ferrumequinum) in transfer flight and during landing,” The J. of the Acoustical Society of America, Vol.101, pp. 2347-2364, 1997.

- [2] K. Ghose, T. K. Horiuchi, P. S. Krishnaprasad, and C. F. Moss, “Echolocating bats use a nearly time-optimal strategy to intercept prey,” PLOS Biology, Vol.4, No.5, 2006.

- [3] E. Fujioka, I. Aihara, S. Watanabe, M. Sumiya, S. Hiryu, J. A. Simmons, H. Riquimaroux, and Y. Watanabe, “Rapid shifts of sonar attention by Pipistrellus abramus during natural hunting for multiple prey,” The J. of the Acoustical Society of America, Vol.136, No.6, pp. 3389-3400, 2014.

- [4] J. C. Koblitz, “Arrayvolution: using microphone arrays to study bats in the field,” Canadian J. of Zoology, Vol.96, No.9, pp. 933-938, 2018.

- [5] A.-M. Seibert, J. C. Koblitz, A. Denzinger, and H.-U. Schnitzler, “Scanning behavior in echolocating common pipistrelle bats (Pipistrellus pipistrellus),” PLOS ONE, Vol.8, No.4, pp. 1-11, 2013.

- [6] M. Ballerini, N. Cabibbo, R. Candelier, A. Cavagna, E. Cisbani, I. Giardina, V. Lecomte, A. Orlandi, G. Parisi, A. Procaccini, M. Viale, and V. Zdravkovic, “Interaction ruling animal collective behavior depends on topological rather than metric distance: Evidence from a field study,” Proc. of the National Academy of Sciences, Vol.105, No.4, pp. 1232-1237, 2008.

- [7] Y. Yamada, S. Hiryu, and Y. Watanabe, “Species-specific control of acoustic gaze by echolocating bats, Rhinolophus ferrumequinum nippon and pipistrellus abramus, during flight,” J. of Comparative Physiology, A: Neuroethology, Sensory, Neural, and Behavioral Physiology, Vol.202, pp. 791-801, 2016.

- [8] C. Knapp and G. Carter, “The generalized correlation method for estimation of time delay,” IEEE Trans. on Acoustics, Speech, and Signal Processing, Vol.24, No.4, pp. 320-327, 1976.

- [9] R. Schmidt, “Multiple emitter location and signal parameter estimation,” IEEE Trans. on Antennas and Propagation, Vol.34, No.3, pp. 276-280, 1986.

- [10] M. S. Brandstein and H. F. Silverman, “A robust method for speech signal time-delay estimation in reverberant rooms,” 1997 IEEE Int. Conf. on Acoustics, Speech, and Signal Processing, Vol.1, pp. 375-378, 1997.

- [11] W. He, P. Motlicek, and J.-M. Odobez, “Deep neural networks for multiple speaker detection and localization,” 2018 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 74-79, 2018.

- [12] W. He, P. Motlicek, and J.-M. Odobez, “Joint localization and classification of multiple sound sources using a multi-task neural network,” Proc. Interspeech 2018, pp. 312-316, 2018.

- [13] E. L. Ferguson, S. B. Williams, and C. T. Jin, “Sound source localization in a multipath environment using convolutional neural networks,” 2018 IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP), pp. 2386-2390, 2018.

- [14] R. Takeda and K. Komatani, “Unsupervised adaptation of deep neural networks for sound source localization using entropy minimization,” 2017 IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP), pp. 2217-2221, 2017.

- [15] Y. Sun, J. Chen, C. Yuen, and S. Rahardja, “Indoor sound source localization with probabilistic neural network,” IEEE Trans. on Industrial Electronics, Vol.65, No.8, pp. 6403-6413, 2018.

- [16] S. Chakrabarty and E. Habets, “Multi-speaker localization using convolutional neural network trained with noise,” Workshop on Machine Learning for Audio Processing (ML4Audio) at NIPS2017, 2017.

- [17] N. Yalta, K. Nakadai, and T. Ogata, “Sound source localization using deep learning models,” J. of Robotics and Mechatronics, Vol.29, pp. 37-48, 2017.

- [18] E. Ferguson, S. Williams, and C. Jin, “Sound source localization in a multipath environment using convolutional neural networks,” 2018 IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP), pp. 2386-2390, 2018.

- [19] T. Hirvonen, “Classification of spatial audio location and content using convolutional neural networks,” 138th Audio Engineering Society Convention, Vol.2, 9294, 2015.

- [20] S. Adavanne, A. Politis, and T. Virtanen, “Direction of arrival estimation for multiple sound sources using convolutional recurrent neural network,” 2018 26th European Signal Processing Conf. (EUSIPCO), pp. 1462-1466, 2018.

- [21] N. Ma, T. May, and G. J. Brown, “Exploiting deep neural networks and head movements for robust binaural localization of multiple sources in reverberant environments,” IEEE/ACM Trans. on Audio, Speech, and Language Processing, Vol.25, No.12, pp. 2444-2453, 2017.

- [22] D. Salvati, C. Drioli, and G. L. Foresti, “Exploiting CNNS for improving acoustic source localization in noisy and reverberant conditions,” IEEE Trans. on Emerging Topics in Computational Intelligence, Vol.2, No.2, pp. 103-116, 2018.

- [23] W. Ma and X. Liu, “Phased microphone array for sound source localization with deep learning,” Aerospace Systems, Vol.2, pp. 71-81, 2019.

- [24] E. Thuillier, H. Gamper, and I. J. Tashev, “Spatial audio feature discovery with convolutional neural networks,” 2018 IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP), pp. 6797-6801, 2018.

- [25] F. Vesperini, P. Vecchiotti, E. Principi, S. Squartini, and F. Piazza, “Localizing speakers in multiple rooms by using deep neural networks,” Computer Speech & Language, Vol.49, pp. 83-106, 2018.

- [26] S. Adavanne, A. Politis, J. Nikunen, and T. Virtanen, “Sound event localization and detection of overlapping sources using convolutional recurrent neural networks,” IEEE J. of Selected Topics in Signal Processing, Vol.13, No.1, pp. 34-48, 2019.

- [27] J. Vera-Diaz, D. Pizarro, and J. Macias-Guarasa, “Towards end-to-end acoustic localization using deep learning: From audio signals to source position coordinates,” Sensors, Vol.18, No.10, 3418, 2018.

- [28] S. Palazzo, C. Spampinato, I. Kavasidis, D. Giordano, and M. Shah, “Generative adversarial networks conditioned by brain signals,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 3410-3418, 2017.

- [29] C. Spampinato, S. Palazzo, I. Kavasidis, D. Giordano, N. Souly, and M. Shah, “Deep learning human mind for automated visual classification,” The IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2017.

- [30] Z. Cao, T. Simon, S.-E. Wei, and Y. Sheikh, “Realtime multi-person 2D pose estimation using part affinity fields,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2017.

- [31] S.-E. Wei, V. Ramakrishna, T. Kanade, and Y. Sheikh, “Convolutional pose machines,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2016.

- [32] B. D. Lawrence and J. A. Simmons, “Measurements of atmospheric attenuation at ultrasonic frequencies and the significance for echolocation by bats,” The J. of the Acoustical Society of America, Vol.71, No.3, pp. 585-590, 1982.

- [33] K. Motoi, M. Sumiya, E. Fujioka, and S. Hiryu, “Three-dimensional sonar beam-width expansion by Japanese house bats (Pipistrellus abramus) during natural foraging,” The J. of the Acoustical Society of America, Vol.141, No.5, pp. EL439-EL444, 2017.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.