Paper:

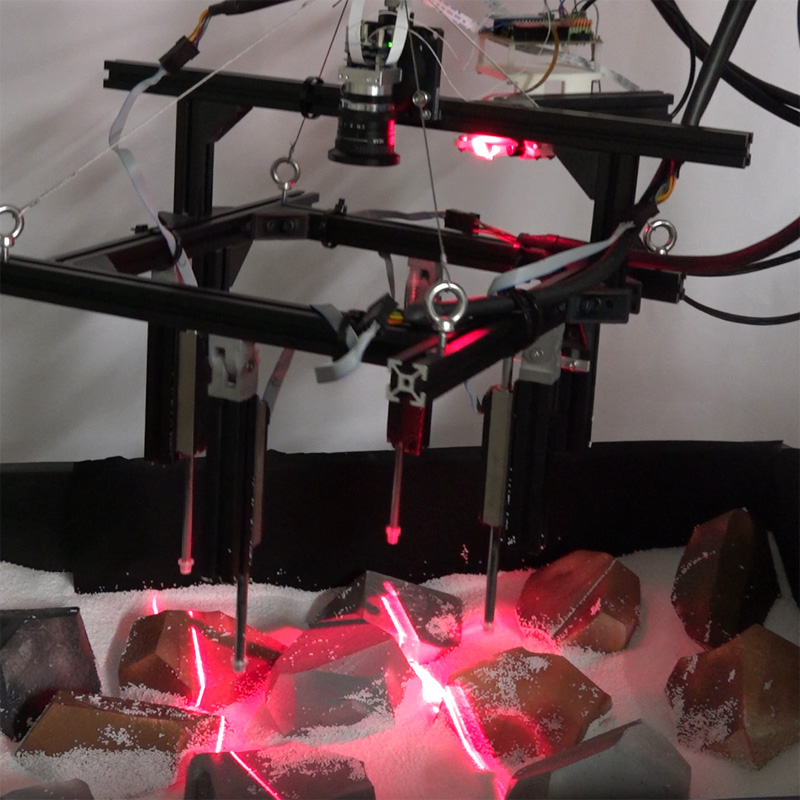

Stabilization System for UAV Landing on Rough Ground by Adaptive 3D Sensing and High-Speed Landing Gear Adjustment

Mikihiro Ikura, Leo Miyashita, and Masatoshi Ishikawa

Graduate School of Information Science and Technology, The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

This paper proposes a real-time landing gear control system based on adaptive and high-speed 3D sensing to enable the safe landing of unmanned aerial vehicles (UAVs) on rough ground. The proposed system controls the measurement area on the ground according to the position and attitude of the UAV and enables high-speed 3D sensing of the focused areas in which the landing gears are expected to contact the ground. Furthermore, the spatio-temporal resolution of the measurement can be improved by focusing a measurement area and the proposed system can recognize the detailed shape of the ground and the dynamics. These detailed measurement results are used to control the lengths of the landing gears at high speed, and it is ensured that all the landing gears contact the ground simultaneously to reduce the instability at touchdown. In the experiment setup, the proposed system realized high-speed sensing for heights of contact points of two landing gears at a rate of 100 Hz and almost simultaneous contact on ground within 36 ms.

Landing demonstration on rough ground with high-speed stabilization system

- [1] F. Mancini, M. Dubbini, M. Gattelli, F. Stecchi, S. Fabbri, and G. Gabbianelli, “Using Unmanned Aerial Vehicles (UAV) for High-Resolution Reconstruction of Topography: The Structure from Motion Approach on Coastal Environments,” Remote Sens., Vol.5, No.12, pp. 6880-6898, 2013.

- [2] S. Scherer, L. Chamberlain, and S. Singh, “Autonomous landing at unprepared sites by a full-scale helicopter,” Rob. Auto. Syst., Vol.60, No.12, pp. 1545-1562, 2012.

- [3] C. Theodore and M. Tischler, “Precision Autonomous Landing Adaptive Control Experiment (PALACE),” National Aeronautics and Space Administration Moffett Field CA Ames Research Center, 2006.

- [4] J. Mackay, G. Ellingson, and T. W. McLain, “Landing Zone Determination for Autonomous Rotorcraft in Surveillance Applications,” AIAA Guid., Nav., Control Conf., p. 1137, Jan. 2016.

- [5] B. Curless and M. Levoy, “Better optical triangulation through spacetime analysis,” Proc. IEEE Int. Conf. Comp. Vis., pp. 987-994, 1995.

- [6] K. Sato and S. Inokuchi, “Three-dimensional surface measurement by space encoding range imaging,” J. Rob. Syst., Vol.2, pp. 27-39, 1985.

- [7] V. Srinivasan, H. Liu, and M. Halioua, “Automated phase-measuring profilometry of 3-D diffuse objects,” Appl. Opt., Vol.23, No.18, pp. 3105-3108, 1984.

- [8] T. Yamazaki, H. Katayama, S. Uehara, A. Nose, M. Kobayashi, S. Shida, M. Odahara, K. Takamiya, Y. Hisamatsu, S. Matsumoto, L. Miyashita, Y. Watanabe, T. Izawa, Y. Muramatsu, and M. Ishikawa, “A 1ms high-speed vision chip with 3D-stacked 140GOPS column-parallel PEs for spatio-temporal image processing,” 2017 IEEE Int. Solid State Circ. Conf. (ISSCC), pp. 82-83, Feb. 2017.

- [9] M. Ishikawa, K. Ogawa, T. Komuro, and I. Ishii, “A cmos vision chip with simd processing element array for 1 ms image processing,” 1999 IEEE Int. Solid State Circ. Conf. (ISSCC), pp. 206-207, Feb. 1999.

- [10] T. Komuro, A. Iwashita, and M. Ishikawa, “A QVGA-Size Pixel-Parallel Image Processor for 1,000-fps Vision,” IEEE Micro, Vol.29, No.6, pp. 58-67, 2009.

- [11] S. Huang, K. Shinya, N. Bergström, Y. Yamakawa, T. Yamazaki, and M. Ishikawa, “Dynamic compensation robot with a new high-speed vision system for flexible manufacturing,” Int. J. Adv. Mfg. Tech., Vol.95, No.9-12, pp. 4523-4533, 2018.

- [12] L. Miyashita, T. Yamazaki, K. Uehara, Y. Watanabe, and M. Ishikawa, “Portable Lumipen: Dynamic SAR in Your Hand,” 2018 IEEE Int. Conf. Multimedia Expo. (ICME), pp. 1-6, July 2018.

- [13] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You Only Look Once: Unified, Real-Time Object Detection,” Proc. IEEE Conf. Comp. Vis. Pattern Recogit. (CVPR), pp. 779-788, 2016.

- [14] Q. Zhao, T. Sheng, Y. Wang, Z. Tang, Y. Chen, L. Cai, and H. Ling, “M2det: A single-shot object detector based on multi-level feature pyramid network,” Thirty-Third AAAI Conf. Art. Int. (AAAI19), 2019.

- [15] A. Marcu, D. Costea, V. Licaret, M. Pirvu, E. Slusanschi, and M. Leordeanu, “Safeuav: Learning to estimate depth and safe landing areas for uavs from synthetic data,” Euro. Conf. Comp. Vis. (ECCV) Workshops, September 2018.

- [16] A. Johnson, J. Montgomery, and L. Matthies, “Vision guided landing of an autonomous helicopter in hazardous terrain,” Proc. IEEE Int. Conf. Rob. Auto., pp. 3966-3971, 2005.

- [17] F. Amzajerdian, D. Pierrottet, L. B. Petway, G. D. Hines, V. E. Roback, and R. A. Reisse, “Lidar Sensors for Autonomous Landing and Hazard Avoidance,” AIAA SPACE 2013 Conf. Expo., pp. 1-11, 2013.

- [18] J. Chen, Q. Gu, H. Gao, T. Aoyama, T. Takaki, and I. Ishii, “Fast 3-D shape measurement using blink-dot projection,” Proc. IEEE/RSJ Int. Conf. Intel. Robots Syst., pp. 2683-2688, 2013.

- [19] S. Tabata, S. Noguchi, Y. Watanabe, and M. Ishikawa, “High-speed 3D sensing with three-view geometry using a segmented pattern,” Proc. IEEE/RSJ Int. Conf. Intel. Robots Syst., pp. 3900-3907, 2013.

- [20] Y. Nagai and Y. Kuroda, “Control of Spatial Points Density According to Object Shape with 3D LiDAR,” Proc. SICE 20 Syst. Integ. Div. (SI2019), pp. 2093-2097, 2019.

- [21] S. Baker, D. Soccol, A. Postula, and M. V. Srinivasan, “Passive landing gear using coupled mechanical design,” Australasian Conf. Rob. Auto. (ACRA), pp. 2-4, 2013.

- [22] J. Kiefer, M. Ward, and M. Costello, “Rotorcraft Hard Landing Mitigation Using Robotic Landing Gear,” J. Dyn. Syst. Meas. Cont., Vol.138, No.3, 2016.

- [23] Y. Sarkisov, G. Yashin, E. Tsykunov, and D. Tsetserukou, “DroneGear: A novel robotic landing gear with embedded optical torque sensors for safe multicopter landing on an uneven surface,” IEEE Rob. Auto. Lett., Vol.3, No.3, pp. 1912-1917, 2018.

- [24] T. Senoo, K. Murakami, and M. Ishikawa, “Deformable robot behavior based on the standard linear solid model,” 2017 IEEE Conf. Cont. Tech. Appl. (CCTA), pp. 746-751, Aug. 2017.

- [25] Y. Nakabo and M. Ishikawa, “Visual impedance using 1 ms visual feedback system,” Proc. 1998 IEEE Int. Conf. Rob. Auto., Vol.3, pp. 2333-2338, May 1998.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.