Paper:

Effects of Presenting People Flow Information by Vibrotactile Stimulation for Visually Impaired People on Behavior Decision

Kanon Fujino and Mihoko Niitsuma

Department of Precision Mechanics, Chuo University

1-13-27 Kasuga, Bunkyo-ku, Tokyo 112-8551, Japan

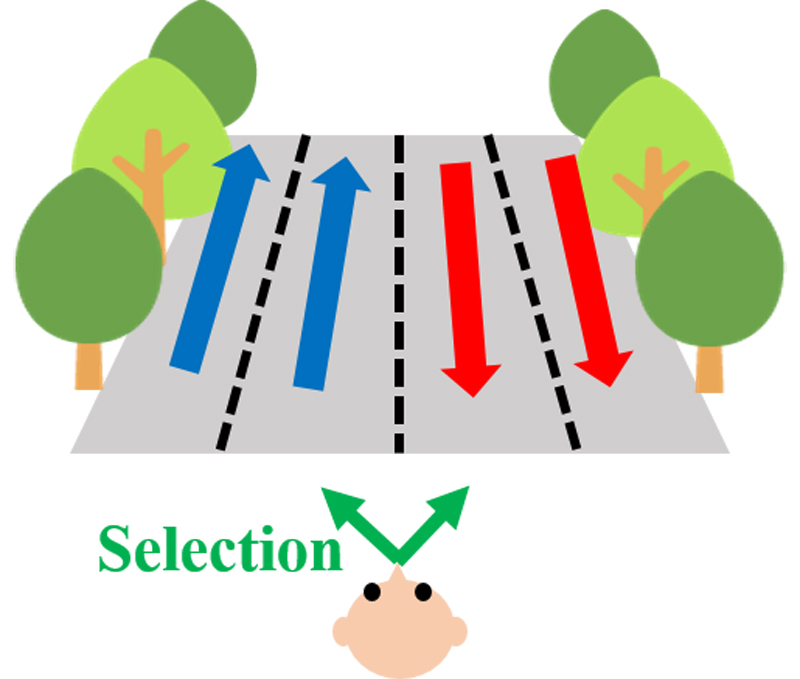

Many studies have been conducted on walking support for visually impaired people. However, only a few studies have contributed to the wide understanding of their surrounding environment. In this study, the focus was on the flow of people in environmental information. The flow of people is formed by the presence of many pedestrians in the surroundings of the walking environment. If visually impaired people can independently make decisions by grasping the dynamic environment of people flow, they can walk with ease and peace of mind. A method is proposed that extracts people flow information and selects the information necessary for understanding the environment. The method utilizes vibrotactile stimulation. The effectiveness of the proposed method in determining the surrounding environment and the influence of vibration information on behavior decisions were verified.

Select a walking position based on the flow

- [1] L. Dunai, G. P. Fajarnes, V. S. Praderas, B. D. Garcia , and I. L. Lingual, “Real-Time Assistance Prototype – a new Navigation Aid for blind people,” IECON 2010 – 36th Annual Conf. on IEEE Industrial Electronics Society, pp. 1173-1178, 2010.

- [2] Y. Wang and K. J. Kuchenbecker, “HALO: Haptic Alerts for Low-hanging Obstacles in white cane navigation,” 2012 IEEE Haptics Symp. (HAPTICS), pp. 527-532, 2012.

- [3] K. Tobita, K. Sagayama, and H. Ogawa, “Examination of a Guidance Robot for Visually Impaired People,” J. Robot. Mechatron., Vol.29, No.4, pp. 720-727, 2017.

- [4] T. Watabe and M. Niitsuma, “Mental Map Generation Assistance Tool Using Relative Pitch Difference and Angular Information for Visually Impaired People,” 2013 IEEE 4th Int. Conf. on Cognitive Infocommunications, pp. 225-260, 2013.

- [5] Y. Suma, D. Yanagisawa, and K. Nishinari, “Anticipation effect in pedestrian dynamics: modeling and experiments,” Physica A: Statistical Mechanics and its Applications, Vol.391, Issues 1-2, pp. 248-263, 2012.

- [6] Y. Miyagi, M. Onishi, C. Watanabe, T. Itoh, and M. Taktsuka, “Feature Extraction and Visualization for Symbolic People Flow Data,” 2016 20th Int. Conf. Information Visualization (IV), pp. 9-14, 2016.

- [7] C. Vhilipirea, C. Dobre, M. Baratci, and M. van Steen, “Identifying movements in noisy crowd analitics data,” 2018 19th IEEE Int. Conf. on Mobile Data Management, pp. 161-166, 2018.

- [8] J. Zelek, R. Audette, J. Balthazaar, and C. Dunk, “A stereo-vision system for the visually impaired,” University of Guelph, Technical Report 2000-41x-1, 2000.

- [9] L. A. Johnson and C. M. Higgings, “A Navigation aid for blind using tactile-visual sensory substitution,” Proc. of the 28th IEEE EMBS Annual Int. Conf., pp. 6289-6292, 2006.

- [10] S. Cardin, D. Thalmann, and F. Vexo, “A wearable system for mobility improvement of visually impaired people,” The Visual Computer, Vol.23, No.2, pp. 109-118, 2007.

- [11] M. K. Dobrzynski, S. Mejri, S. Wischmann, and D. Floreano, “Quantifying information transfer through a head-attached vibrotactile display: principles for design and control,” IEEE Trans. on Biomedical Engineering, Vol.59, No.7, pp. 2011-2018, 2012.

- [12] R. L. Klatzky and S. J. Lederman, “Haptic perception: A tutorial,” Attention Perception Psychophys., Vol.71, No.7, pp. 1439-1459, 2009.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.