Paper:

Convolutional Neural Network Transfer Learning Applied to the Affective Auditory P300-Based BCI

Akinari Onishi*,**

*Chiba University

1-33 Yayoicho, Inage-ku, Chiba-shi, Chiba 263-8522, Japan

**National Institute of Technology, Kagawa College

551 Kohda, Takuma-cho, Mitoyo-shi, Kagawa 769-1192, Japan

Brain-computer interface (BCI) enables us to interact with the external world via electroencephalography (EEG) signals. Recently, deep learning methods have been applied to the BCI to reduce the time required for recording training data. However, more evidence is required due to lack of comparison. To reveal more evidence, this study proposed a deep learning method named time-wise convolutional neural network (TWCNN), which was applied to a BCI dataset. In the evaluation, EEG data from a subject was classified utilizing previously recorded EEG data from other subjects. As a result, TWCNN showed the highest accuracy, which was significantly higher than the typically used classifier. The results suggest that the deep learning method may be useful to reduce the recording time of training data.

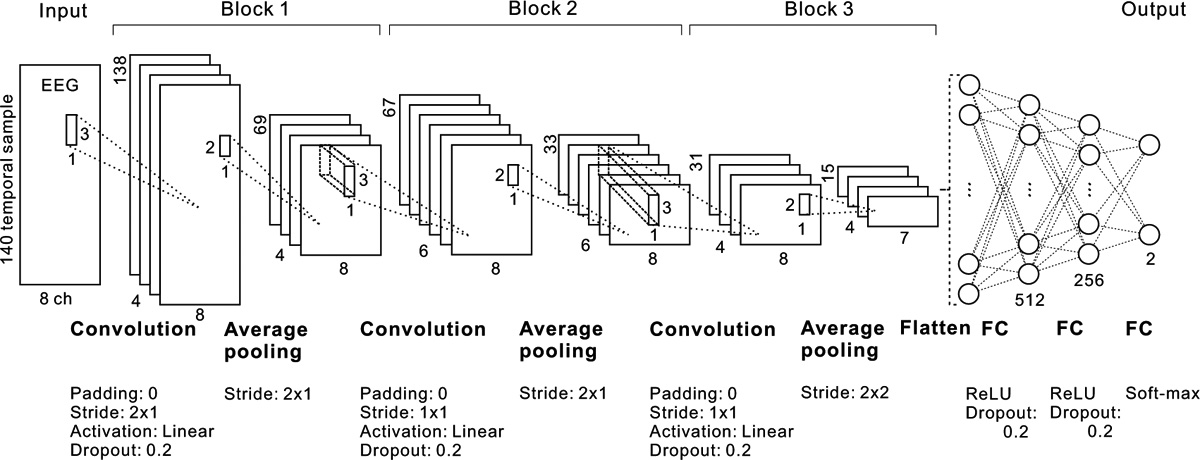

Time-wise convolutional neural network

- [1] J. R. Wolpaw, N. Birbaumer, D. J. McFarland, G. Pfurtscheller, and T. M. Vaughan, “Brain-computer interfaces for communication and control,” Clinical Neurophysiology, Vol.113, No.6, pp. 767-791, 2002.

- [2] L. A. Farwell and E. Donchin, “Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials,” Electroencephalography and Clinical Neurophysiology, Vol.70, No.6, pp. 510-523, 1988.

- [3] F. Nijboer, A. Furdea, I. Gunst, J. Mellinger, D. J. McFarland, N. Birbaumer, and A. Kübler, “An auditory brain–computer interface (BCI),” J. of Neuroscience Methods, Vol.167, No.1, pp. 43-50, 2008.

- [4] A. M. Brouwer and J. B. van Erp, “A tactile P300 brain-computer interface,” Frontiers in Neuroscience, Vol.4, No.19, 20582261, 2010.

- [5] A. Onishi, K. Takano, T. Kawase, H. Ora, and K. Kansaku, “Affective stimuli for an auditory P300 brain-computer interface,” Frontiers in Neuroscience, Vol.11, p. 552, 2017.

- [6] A. Onishi and S. Nakagawa, “How does the degree of valence influence affective auditory P300-based BCIs?” Frontiers in Neuroscience, Vol.13, p. 45, 2019.

- [7] D. J. Krusienski, E. W. Sellers, F. Cabestaing, S. Bayoudh, D. J. McFarland, T. M. Vaughan, and J. R. Wolpaw, “A comparison of classification techniques for the P300 Speller,” J. of Neural Engineering, Vol.3, No.4, p. 299, 2006.

- [8] A. Rakotomamonjy and V. Guigue, “BCI competition III: dataset II-ensemble of SVMs for BCI P300 speller,” IEEE Trans. on Biomedical Engineering, Vol.55, No.3, pp. 1147-1154, 2008.

- [9] U. Hoffmann, J. M. Vesin, T. Ebrahimi, and K. Diserens, “An efficient P300-based brain-computer interface for disabled subjects,” J. of Neuroscience Methods, Vol.167, No.1, pp. 115-125, 2008.

- [10] G. E. Hinton and R. R. Salakhutdinov, “Reducing the dimensionality of data with neural networks,” Science, Vol.313, No.5786, pp. 504-507, 2006.

- [11] H. Cecotti and A. Graser, “Convolutional neural networks for P300 detection with application to brain-computer interfaces,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.33, No.3, pp. 433-445, 2010.

- [12] G. B. Kshirsagar and N. D. Londhe, “Improving performance of Devanagari script input-based P300 speller using deep learning,” IEEE Trans. on Biomedical Engineering, Vol.66, No.11, pp. 2992-3005, 2018.

- [13] M. Liu, W. Wu, Z. Gu, Z. Yu, F. Qi, and Y. Li, “Deep learning based on Batch Normalization for P300 signal detection,” Neurocomputing, Vol.275, pp. 288-297, 2018.

- [14] R. Maddula, J. Stivers, M. Mousavi, S. Ravindran, and V. de Sa, “Deep Recurrent Convolutional Neural Networks for Classifying P300 BCI signals,” Proc. of the 7th Graz Brain-Computer Interface Conf., pp. 291-296, 2017.

- [15] E. Carabez, M. Sugi, I. Nambu, and Y. Wada, “Identifying single trial event-related potentials in an earphone-based auditory brain-computer interface,” Applied Sciences, Vol.7, No.11, p. 1197, 2017.

- [16] T. Kodama and S. Makino, “Convolutional neural network architecture and input volume matrix design for ERP classifications in a tactile P300-based brain-computer interface,” Proc. of the 39th Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society, pp. 3814-3817, 2017.

- [17] S. Kundu and S. Ari, “MsCNN: A Deep Learning Framework for P300 Based Brain-Computer Interface Speller,” IEEE Trans. on Medical Robotics and Bionics, Vol.2, No.1, pp. 86-93, 2020.

- [18] A. Onishi and S. Nakagawa, “Comparison of Classifiers for the Transfer Learning of Affective Auditory P300-Based BCIs,” Proc. of the 41th Annual Int. Conf. of the IEEE Engineering in Medicine and Biology Society, pp. 6766-6769, 2019.

- [19] A. Onishi and K. Natsume, “Overlapped partitioning for ensemble classifiers of P300-based brain computer interfaces,” PLoS ONE, Vol.9, No.4, e93045, 2014.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.