Paper:

Human-Wants Detection Based on Electroencephalogram Analysis During Exposure to Music

Shin-ichi Ito, Momoyo Ito, and Minoru Fukumi

Tokushima University

2-1 Minami-josanjima, Tokushima 770-8506, Japan

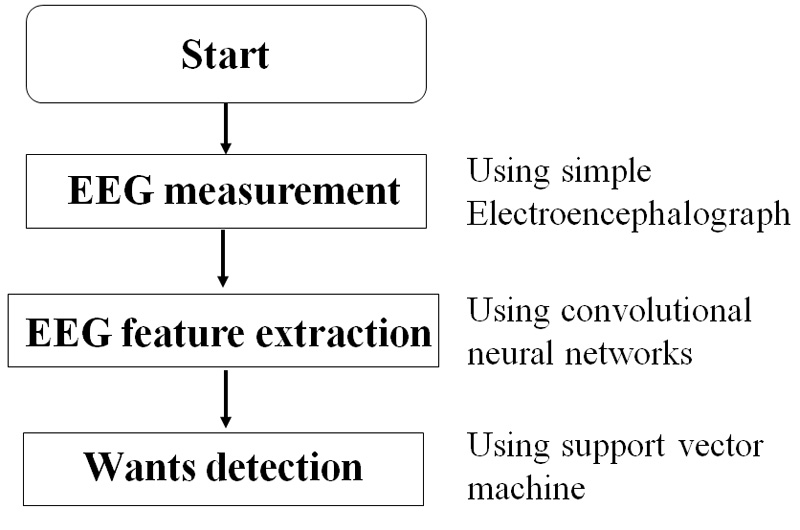

We propose a method to detect human wants by using an electroencephalogram (EEG) test and specifying brain activity sensing positions. EEG signals can be analyzed by using various techniques. Recently, convolutional neural networks (CNNs) have been employed to analyze EEG signals, and these analyses have produced excellent results. Therefore, this paper employs CNN to extract EEG features. Also, support vector machines (SVMs) have shown good results for EEG pattern classification. This paper employs SVMs to classify the human cognition into “wants,” “not wants,” and “other feelings.” In EEG measurements, the electrical activity of the brain is recorded using electrodes placed on the scalp. The sensing positions are related to the frontal cortex and/or temporal cortex activities although the mechanism to create wants is not clear. To specify the sensing positions and detect human wants, we conducted experiments using real EEG data. We confirmed that the mean and standard deviation values of the detection accuracy rate were 99.4% and 0.58%, respectively, when the target sensing positions were related to the frontal and temporal cortex activities. These results prove that both the frontal and temporal cortex activities are relevant for creating wants in the human brain, and that CNN and SVM are effective for the detection of human wants.

Human-wants detection method

- [1] F. Lotte, M. Congedo, F. Lecuyer, and B. Arnaldi, “A review of classification algorithms for EEG-based brain-computer interfaces: a 10 year update,” J. Neural Eng., Vol.15, No.3, pp. R1-R28, 2018.

- [2] J. X. Chen, P. W. Zhang, Z. J. Mao, Y. F. Huang, D. M. Jiang, and Y. N. Zhang, “Accurate EEG-based emotion recognition on combined features using deep convolutional neural networks,” IEEE Access, Vol.7, pp. 44317-44328, 2019.

- [3] R. M. Mehmood, R. Du, and H. J. Lee, “Optimal feature selection and deep learning ensembles method for emotion recognition from human brain EEG sensors,” IEEE Access, Vol.5, pp. 14797-14806, 2017.

- [4] H. Ullah, M. Uzair, A. Mahmood, M. Ullah, S. D. Khan, and F. A. Cheikh, “Internal emotion classification using EEG signal with sparse discriminative ensemble,” IEEE Access, Vol.7, pp. 40144-40153, 2019.

- [5] G. Zhao, Y. Ge, B. Shen, X. Ei, and H. Wang, “Emotion analysis for personality interface from EEG signal,” IEEE Trans. Affect. Comput., Vol.9, No.3, pp. 362-371, 2018.

- [6] B. Xing, H. Zhang, K. Zhang, L. Zhang, X. Wu, X. Shi, S. Yu, and S. Zhang, “Exploiting EEG signals and audiovisual feature fusion for video emotion recognition,” IEEE Access, Vol.7, pp. 59844-59861, 2019.

- [7] C. Qing, R. Qiao, X. Xu, and Y. Cheng, “Interpretable emotion recognition using EEG signals,” IEEE Access, Vol.7, pp. 94160-94170, 2019.

- [8] J. Chen, D. Jiang, and Y. Zhang, “A Common Spatial Pattern and Wavelet Packet Decomposition Combined Method for EEG-Based Emotion Recognition,” J. Adv. Comput. Intell. Intell. Inform., Vol.23, No.2, pp. 274-281, 2019.

- [9] R. Cabredo, R. Legaspi, P. Salvador Inventado, and M. Numao, “Discovering Emotion-Inducing Music Features Using EEG Signals,” J. Adv. Comput. Intell. Intell. Inform., Vol.17, No.3, pp. 362-370, 2013.

- [10] S. U. Amin, M. Alsulaiman, G. Muhammad, M. A. Bencherif, and M. S. Hossain, “Multilevel weighted feature fusion using convolutional neural networks for EEG motor imagery classification,” IEEE Access, Vol.7, pp. 18940-18950, 2019.

- [11] Q. Liu, J. Cai, S.-Z. Fan, M. F. Abbod, J.-S. Shieh, Y. Kung, and L. Lin, “Spectrum analysis of EEG signals using CNN to model patient’s consciousness level based on anesthesiologists’ experience,” IEEE Access, Vol.7, pp. 53731-53742, 2019.

- [12] N. M. Krishna, K. Sekaran, A. V. N. Vamsi, G. S. P. Ghantasala, P. Chandana, S. Kadry, T. Blazauskas, and R. Damasevicius, “An efficient mixture model approach in brain-machine interface systems for extracting the psychological status of mentally impaired person using EEG signals,” IEEE Access, Vol.7, pp. 77905-77914, 2019.

- [13] H. Lee and S. Choi, “PCA+HMM+SVM for EEG pattern classification,” Proc. 7th Int. Symp. on Signal Processing and its Application, Vol.1, pp. 541-544, 2003.

- [14] C. Wang, J. Zou, J. Zhang, M. Wang, and R. Wang, “Feature extraction and recognition of epileptiform activity in EEG by combining PCA with ApEn,” Cogn. Neurodyn., Vol.4, No.3, pp. 233-240, 2010.

- [15] T. Hoya, G. Hori, H. Bakardjian, S. Nishimura, T. Suzuki, Y. Miyawaki, A. Funase, and J. Cao, “Classification of single trial EEG signals by a combined principal + independent component analysis and probabilistic neural network approach,” Proc. ICA2003, pp. 197-202, 2003.

- [16] S. Jirayucharoensak, S. Pan-Ngum, and P. Israsena, “EEG-based emotion recognition using deep learning network with principal component based covariate shift adaptation,” Sci. World J., Vol.2014, pp. 1-10, 2014.

- [17] N. Mammone, F. L. Foresta, and F. C. Morabito, “Automatic artifact rejection from multichannel scalp EEG by wavelet ICA,” IEEE Sens. J., Vol.12, No.3, pp. 533-542, 2011.

- [18] A. Kachenoura, L. Albera, L. Senhadji, and P. Comon, “ICA: a potential tool for BCI systems,” IEEE Signal Process. Mag., Vol.25, No.1, pp. 57-68, 2008.

- [19] X. Bai, X. Wang, S. Zheng, and M. Yu, “The offline feature extraction of four-class motor imagery EEG based on ICA and wavelet-CSP,” Proc. Chin. Control Conf., pp. 7189-7194, 2014.

- [20] J. F. Borisoff, S. G. Mason, A. Bashashati, and G. E. Birch, “Brain-computer interface design for asynchronous control applications: improvements to the LF-ASD asynchronous brain switch,” IEEE Trans. Biomed. Eng., Vol.51, No.6, pp. 985-992, 2004.

- [21] B. Blankertz, G. Curio, and K. R. Muller, “Classifying single trial EEG: towards brain computer interfacing,” Advances in Neural Information Processing Systems, NIPS 01, Vol.14, pp. 157-164, 2002.

- [22] G. J. Mclachlan, “Discriminant analysis and statistical pattern recognition,” J. Royal Stat. Soc., Vol.168, No.2, pp. 192-194, 2012.

- [23] K. R. Muller, C. W. Anderson, and G. E. Birch, “Linear and nonlinear methods for brain-computer interfaces,” IEEE Trans. Neur. Sys. Reh. Eng., Vol.11, No.2, pp. 165-169, 2003.

- [24] T. Felzer and B. Freisieben, “Analyzing EEG signals using the probability estimating guarded neural classifier,” Neural Systems and Rehabilitation Engineering, IEEE Trans., Vol.11, No.4, pp. 361-371, 2003.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.