Paper:

Moving Horizon Estimation with Probabilistic Data Association for Object Tracking Considering System Noise Constraint

Tomoya Kikuchi, Kenichiro Nonaka, and Kazuma Sekiguchi

Tokyo City University

1-28-1 Tamazutsumi, Setagaya, Tokyo 158-8857, Japan

Object tracking is widely utilized and becomes indispensable in automation technology. In environments containing many objects, however, occlusion and false recognition frequently occur. To alleviate these issues, in this paper, we propose a novel object tracking method based on moving horizon estimation incorporating probabilistic data association (MHE-PDA) through a probabilistic data association filter (PDAF). Since moving horizon estimation (MHE) is accomplished through numerical optimization, we can ensure that the estimation is consistent with physical constraints and robust to outliers. The robustness of the proposed method against occlusion and false recognition is verified by comparison with PDAF through simulations of a cluttered environment.

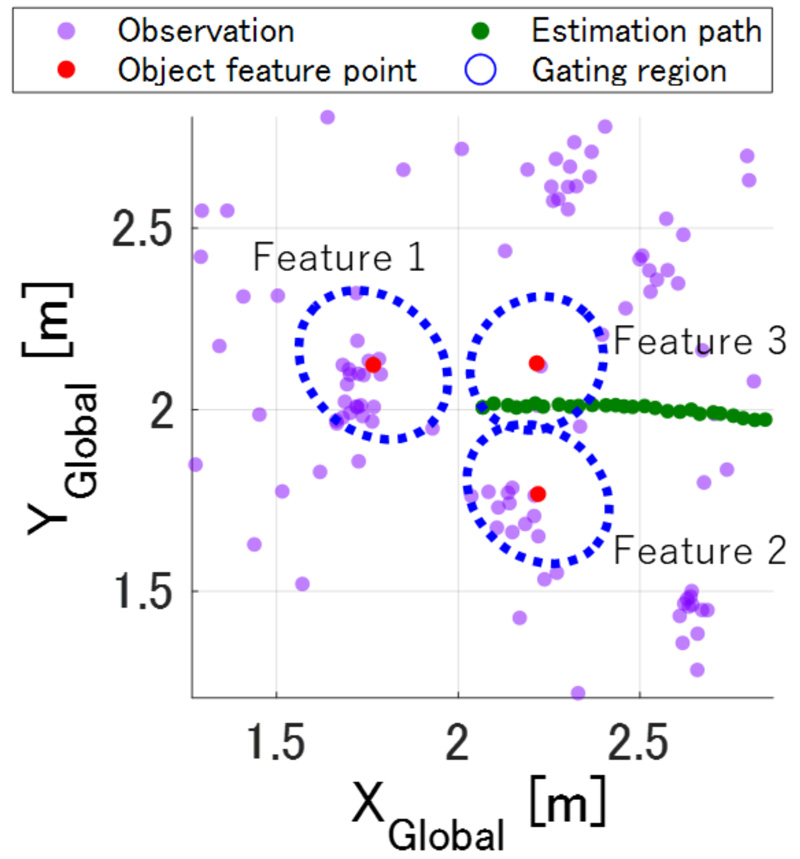

Tracking a target surrounded by false objects

- [1] F. Chenavier and J. L. Crowley, “Position estimation for a mobile robot using vision and odometry,” Proc. of 1992 IEEE Int. Conf. on Robotics and Automation, Vol.3, pp. 2588-2593, 1992.

- [2] K. Sakai, Y. Maeda, S. Miyoshi, and H. Hikawa, “Visual feedback robot system via fuzzy control,” Proc. of SICE Annual Conf. 2010, pp. 3264-3267, 2010.

- [3] L. Sigal, A. O. Balan, and M. J. Black, “Humaneva: Synchronized video and motion capture dataset and baseline algorithm for evaluation of articulated human motion,” Int. J. of Computer Vision, Vol.87, No.1, pp. 4-27, 2009.

- [4] S. A. Habsi, M. Shehada, M. Abdoon, A. Mashood, and H. Noura, “Integration of a vicon camera system for indoor flight of a parrot ar drone,” Proc. of 2015 10th Int. Symp. on Mechatronics and its Applications (ISMA), pp. 1-6, 2015.

- [5] Z. T. Dydek, A. M. Annaswamy, and E. Lavretsky, “Adaptive control of quadrotor uavs: A design trade study with flight evaluations,” IEEE Trans. on Control Systems Technology, Vol.21, No.4, pp. 1400-1406, 2013.

- [6] J. Gall, B. Rosenhahn, T. Brox, and H.-P. Seidel, “Optimization and filtering for human motion capture,” Int. J. of Computer Vision, Vol.87, No.1, pp. 75-92, 2008.

- [7] K. Ohno, T. Nomura, and S. Tadokoro, “Real-time robot trajectory estimation and 3d map construction using 3d camera,” 2006 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 5279-5285, 2006.

- [8] S. R. E. Datondji, Y. Dupuis, P. Subirats, and P. Vasseur, “A survey of vision-based traffic monitoring of road intersections,” IEEE Trans. on Intelligent Transportation Systems, Vol.17, No.10, pp. 2681-2698, 2016.

- [9] Z. Liu and Z. You, “A real-time vision-based vehicle tracking and traffic surveillance,” Proc. of 8th ACIS Int. Conf. on Software Engineering, Artificial Intelligence, Networking, and Parallel/Distributed Computing (SNPD 2007), Vol.1, pp. 174-179, 2007.

- [10] J. Sochor, R. Juránek, and A. Herout, “Traffic surveillance camera calibration by 3d model bounding box alignment for accurate vehicle speed measurement,” Computer Vision and Image Understanding, Vol.161, pp. 87-98, 2017.

- [11] Y. Yuan, Z. Xiong, and Q. Wang, “An incremental framework for video-based traffic sign detection, tracking, and recognition,” IEEE Trans. on Intelligent Transportation Systems, Vol.18, No.7, pp. 1918-1929, 2017.

- [12] V. D. Nguyen, H. Van Nguyen, D. T. Tran, S. J. Lee, and J. W. Jeon, “Learning framework for robust obstacle detection, recognition, and tracking,” IEEE Trans. on Intelligent Transportation Systems, Vol.18, No.6, pp. 1633-1646, 2017.

- [13] G. Prabhakar, B. Kailath, S. Natarajan, and R. Kumar, “Obstacle detection and classification using deep learning for tracking in high-speed autonomous driving,” 2017 IEEE Region 10 Symp. (TENSYMP), pp. 1-6, 2017.

- [14] L. A. Camñas-Mesa, T. Serrano-Gotarredona, S. Ieng, R. Benosman, and B. Linares-Barranco, “Event-driven stereo visual tracking algorithm to solve object occlusion,” IEEE Trans. on Neural Networks and Learning Systems, Vol.29, No.9, pp. 4223-4237, 2018.

- [15] A. Kainuma, H. Madokoro, K. Sato, and N. Shimoi, “Occlusion-robust segmentation for multiple objects using a micro air vehicle,” Proc. of 2016 16th Int. Conf. on Control, Automation and Systems (ICCAS), pp. 111-116, 2016.

- [16] S. Zhang, X. Yu, Y. Sui, S. Zhao, and L. Zhang, “Object tracking with multi-view support vector machines,” IEEE Trans. on Multimedia, Vol.17, No.3, pp. 265-278, 2015.

- [17] C. H. Kuo, S. W. Sun, and P. C. Chang, “A skeleton-based pairwise curve matching scheme for people tracking in a multi-camera environment,” Proc. of 2013 Asia-Pacific Signal and Information Processing Association Annual Summit and Conf., pp. 1-5, 2013.

- [18] T. Umeda, K. Sekiyama, and T. Fukuda, “Vision-based object tracking by multi-robots,” J. Robot. Mechatron., Vol.24, No.3, pp. 531-539, 2012.

- [19] M. Wang, Y. Liu, D. Su, Y. Liao, L. Shi, J. Xu, and J. Valls Miro, “Accurate and real-time 3-d tracking for the following robots by fusing vision and ultrasonar information,” IEEE/ASME Trans. on Mechatronics, Vol.23, No.3, pp. 997-1006, 2018.

- [20] Y. Yun, P. Agarwal, and A. D. Deshpande, “Accurate, robust, and real-time estimation of finger pose with a motion capture system,” Proc. of 2013 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1626-1631, 2013.

- [21] E. S. Lee and D. Kum, “Feature-based lateral position estimation of surrounding vehicles using stereo vision,” Proc. of 2017 IEEE Intelligent Vehicles Symp. (IV), pp. 779-784, 2017.

- [22] M. Takahashi, K. Nonaka, and K. Sekiguchi, “Moving horizon estimation for vehicle robots using partial marker information of motion capture system,” J. of Physics: Conf. Series, Vol.744, No.1, 012049, 2016.

- [23] R. Ding, M. Yu, and W.-H. Chen, “A multiple target tracking strategy using moving horizon estimation approach aided by road constraint,” Proc. of 24th Int. Technical Conf. on the Enhanced Safety of Vehicles (ESV), pp. 1-13, 2015.

- [24] R. Ding, M. Yu, H. Oh, and W. Chen, “New multiple-target tracking strategy using domain knowledge and optimization,” IEEE Trans. on Systems, Man, and Cybernetics: Systems, Vol.47, No.4, pp. 605-616, 2017.

- [25] Y. Ishikawa, N. Takahashi, T. Takahama, and K. Nonaka, “Moving horizon estimation of lateral and angular displacement for steering control,” Trans. of the Society of Automotive Engineers of Japan, Vol.50, No.5, pp. 1487-1493, 2019.

- [26] A. Liu, W. Zhang, M. Z. Q. Chen, and L. Yu, “Moving horizon estimation for mobile robots with multirate sampling,” IEEE Trans. on Industrial Electronics, Vol.64, No.2, pp. 1457-1467, 2017.

- [27] T. Kikuchi, K. Tsuno, K. Nonaka, and K. Sekiguchi, “Continuous marker association utilizing potential function for motion capture systems,” Proc. of 2019 IEEE/SICE Int. Symp. on System Integration (SII), pp. 578-583, 2019.

- [28] R. Abe, T. Kikuchi, K. Nonaka, and K. Sekiguchi, “Robust object tracking with continuous data association based on artificial potential moving horizon estimation,” 21st IFAC World Congress, 2020 (in press).

- [29] T. Kirubarajan and Y. Bar-Shalom, “Probabilistic data association techniques for target tracking in clutter,” Proc. of the IEEE, Vol.92, No.3, pp. 536-557, 2004.

- [30] S. B. Colegrove and S. J. Davey, “The probabilistic data association filter with multiple nonuniform clutter regions,” Record of the IEEE 2000 Int. Radar Conf., pp. 65-70, 2000.

- [31] X. Chen, Y. Li, Y. Li, J. Yu, and X. Li, “A novel probabilistic data association for target tracking in a cluttered environment,” Sensors (Basel), Vol.16, No.12, 2180, 2016.

- [32] T. Yang and P. G. Mehta, “Probabilistic data association-feedback particle filter for multiple target tracking applications,” J. of Dynamic Systems, Measurement, and Control, Vol.140, pp. 1-14, 2017.

- [33] T. Kikuchi, K. Nonaka, and K. Sekiguchi, “Visual object tracking by moving horizon estimation with probabilistic data association,” Proc. of 2020 IEEE/SICE Int. Symp. on System Integration (SII), pp. 115-120, 2020.

- [34] M. Grinberg, “Feature-based Probablistic Data Association for Video-Based Multi-Object Tracking,” KIT Scientific Publishing, 2017.

- [35] K. Shibata, K. Nonaka, and K. Sekiguchi, “Model predictive obstacle avoidance control suppressing expectation of relative velocity against obstacles,” 2019 IEEE Conf. on Control Technology and Applications (CCTA), pp. 59-64, 2019.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.