Paper:

Semi-Automatic Dataset Generation for Object Detection and Recognition and its Evaluation on Domestic Service Robots

Yutaro Ishida and Hakaru Tamukoh

Kyushu Institute of Technology

2-4 Hibikino, Wakamatsu-ku, Kitakyushu, Fukuoka 808-0196, Japan

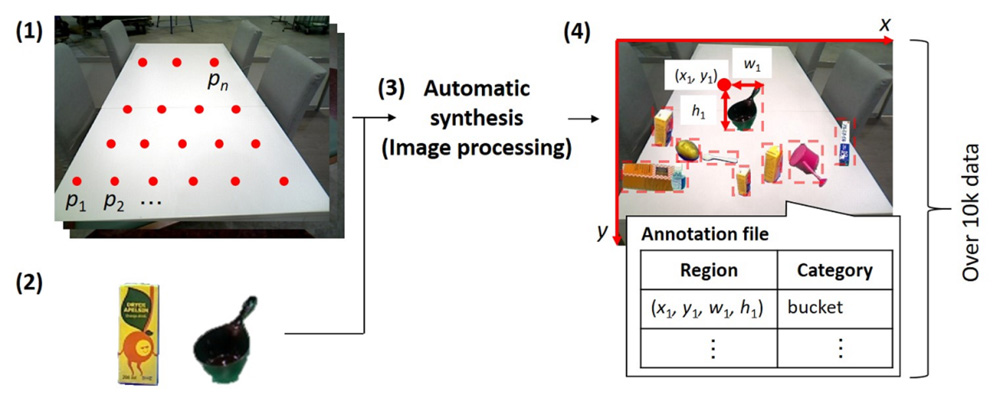

This paper proposes a method for the semi-automatic generation of a dataset for deep neural networks to perform end-to-end object detection and classification from images, which is expected to be applied to domestic service robots. In the proposed method, the background image of the floor or furniture is first captured. Subsequently, objects are captured from various viewpoints. Then, the background image and the object images are composited by the system (software) to generate images of the virtual scenes expected to be encountered by the robot. At this point, the annotation files, which will be used as teaching signals by the deep neural network, are automatically generated, as the region and category of the object composited with the background image are known. This reduces the human workload for dataset generation. Experiment results showed that the proposed method reduced the time taken to generate a data unit from 167 s, when performed manually, to 0.58 s, i.e., by a factor of approximately 1/287. The dataset generated using the proposed method was used to train a deep neural network, which was then applied to a domestic service robot for evaluation. The robot was entered into the World Robot Challenge, in which, out of ten trials, it succeeded in touching the target object eight times and grasping it four times.

Dataset generation for object detection

- [1] T. Yamamoto, K. Terada, A. Ochiai, F. Saito, Y. Asahara, and K. Murase, “Development of Human Support Robot as the research platform of a domestic mobile manipulator,” ROBOMECH J., Vol.6, 4, 2019.

- [2] Y. Nakagawa and N. Nakagawa, “Relationship Between Human and Robot in Nonverbal Communication,” J. Adv. Comput. Intell. Intell. Inform., Vol.21, No.1, pp. 20-24, 2017.

- [3] J. Cai and T. Matsumaru, “Human Detecting and Following Mobile Robot Using a Laser Range Sensor,” J. Robot. Mechatron., Vol.26, No.6, pp. 718-734, 2014.

- [4] M. Tanaka, H. Matsubara, and T. Morie, “Human Detection and Face Recognition Using 3D Structure of Head and Face Surfaces Detected by RGB-D Sensor,” J. Robot. Mechatron., Vol.27, No.6, pp. 691-697, 2015.

- [5] M. Hashimoto, Y. Domae, and S. Kaneko, “Current Status and Future Trends on Robot Vision Technology,” J. Robot. Mechatron., Vol.29, No.2, pp. 275-286, 2017.

- [6] Z. Chai and T. Matsumaru, “ORB-SHOT SLAM: Trajectory Correction by 3D Loop Closing Based on Bag-of-Visual-Words (BoVW) Model for RGB-D Visual SLAM,” J. Robot. Mechatron., Vol.29, No.2, pp. 365-380, 2017.

- [7] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” Proc. of the 25th Int. Conf. on Neural Information Processing Systems, Vol.1, pp. 1097-1105, 2012.

- [8] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You Only Look Once: Unified, Real-Time Object Detection,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 779-788, 2016.

- [9] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C. Fu, and A. C. Berg, “SSD: Single Shot MultiBox Detector,” European Conf. on Computer Vision, pp. 21-37, 2016.

- [10] T. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft COCO: Common Objects in Context,” Proc. of European Conf. on Computer Vision, pp. 740-755, 2014.

- [11] M. Everingham, L. V. Gool, C. K. I. Williams, J. Winn, and A. Zisserman, “The Pascal Visual Object Classes (VOC) Challenge,” Int. J. of Computer Vision, Vol.88, pp. 303-338, 2010.

- [12] J. Hestness, S. Narang, N. Ardalani, G. Diamos, H. Jun, H. Kianinejad, M. M. A. Patwary, Y. Yang, and Y. Zhou, “Deep Learning Scaling is Predictable, Empirically,” arXiv:1712.00409, 2017.

- [13] S. Hori, I. Yutaro, Y. Kiyama, Y. Tanaka, Y. Kuroda, M. Hisano, Y. Imamura, T. Himaki, Y. Yoshimoto, Y. Aratani, K. Hashimoto, G. Iwamoto, H. Fujita, T. Morie, and H. Tamukoh, “Hibikino-Musashi@Home 2017 Team Description Paper,” arXiv:1711.05457, 2017.

- [14] M. Wise, M. Ferguson, D. King, E. Diehr, and D. Dymesich, “Fetch and freight: Standard platforms for service robot applications,” Proc. of Workshop on Autonomous Mobile Service Robots, 2016.

- [15] P. Jordi, M. Luca, and F. Francesco, “TIAGo: the modular robot that adapts to different research needs,” Proc. of Int. Workshop on Robot Modularity, 2016.

- [16] T. Wisspeintner, T. van der Zant, L. Iocchi, and S. Schiffer, “RoboCup Home: Scientific Competition and Benchmarking for Domestic Service Robots,” Interaction Studies, pp. 392-426, 2009.

- [17] L. Iocchi, D. Holz, J. Ruiz-del-Solar, K. Sugiura, and T. van der Zant, “Analysis and results of evolving competitions for domestic and service robots,” Artificial Intelligence, pp. 258-281, 2015.

- [18] H. Okada, T. Inamura, and K. Wada, “What competitions were conducted in the service categories of the World Robot Summit?,” Advanced Robotics, Vol.33, No.17, pp. 900-910, 2019.

- [19] G. Georgakis, A. Mousavian, A. C. Berg, and J. Kosecka, “Synthesizing Training Data for Object Detection in Indoor Scenes,” arXiv:1702.07836, 2017.

- [20] G. Georgakis, M. A. Reza, A. Mousavian, P. Le, and J. Košecká, “Multiview RGB-D dataset for object instance detection,” Proc. of 2016 4th Int. Conf. on 3D Vision (3DV), pp. 426-434, 2016.

- [21] K. Lai, L. Bo, and D. Fox, “Unsupervised feature learning for 3D scene labeling,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 3050-3057, 2014.

- [22] A. Mousavian, H. Pirsiavash, and J. Kosecka, “Joint semantic segmentation and depth estimation with deep convolutional networks,” Proc. of 2016 4th Int. Conf. on 3D Vision (3DV), pp. 611-619, 2016.

- [23] C. Taylor and A. Cowley, “Parsing indoor scenes using RGB-D imagery,” Robotics: Science and Systems, 2012.

- [24] Y. Boykov, O. Veksler, and R. Zabih, “Fast approximate energy minimization via graph cuts,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.23, Issue 11, pp. 1222-1239, 2001.

- [25] A. Singh, J. Sha, K. Narayan, T. Achim, and P. Abbeel, “A large-scale 3D database of object instances,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 509-516, 2014.

- [26] K. Lai, L. Bo, X. Ren, and D. Fox, “A large-scale hierarchical multi-view RGB-D object dataset,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 1817-1824, 2011.

- [27] M. Tanaka, R. Kamio, and M. Okutomi, “Seamless image cloning by a closed form solution of a modified Poisson problem,” Proc. of Special Interest Group on Computer GRAPHics Asia, 15, 2012.

- [28] J. Deng, W. Dong, R. Socher, L. Li, K. Li, and L. Fei-Fei, “ImageNet: A large-scale hierarchical image database,” Proc. of IEEE Conf. Computer Vision and Pattern Recognition, pp. 248-255, 2009.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.