Paper:

Mood Perception Model for Social Robot Based on Facial and Bodily Expression Using a Hidden Markov Model

Jiraphan Inthiam*, Abbe Mowshowitz**, and Eiji Hayashi*

*Graduate School of Computer Science and Systems Engineering, Kyushu Institute of Technology

680-4 Kawazu, Iizuka, Fukuoka 820-8502, Japan

**Department of Computer Science, The City College of New York

160 Convent Avenue, New York, NY 10031, USA

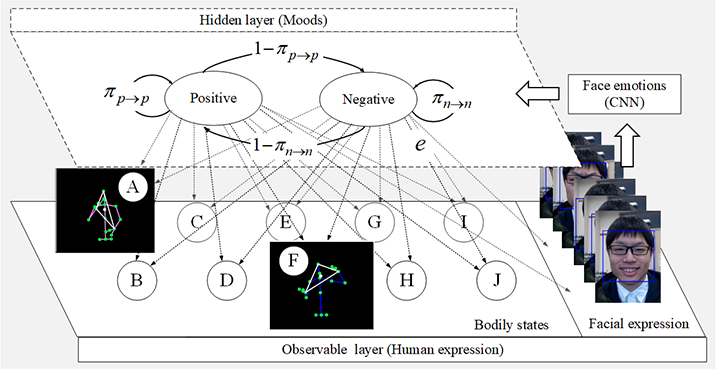

In the normal course of human interaction people typically exchange more than spoken words. Emotion is conveyed at the same time in the form of nonverbal messages. In this paper, we present a new perceptual model of mood detection designed to enhance a robot’s social skill. This model assumes 1) there are only two hidden states (positive or negative mood), and 2) these states can be recognized by certain facial and bodily expressions. A Viterbi algorithm has been adopted to predict the hidden state from the visible physical manifestation. We verified the model by comparing estimated results with those produced by human observers. The comparison shows that our model performs as well as human observers, so the model could be used to enhance a robot’s social skill, thus endowing it with the flexibility to interact in a more human-oriented way.

Mood perception model based human expression

- [1] N. Kubota and S. Wakisaka, “An Emotional Model Based on Location-Dependent Memory for Partner Robots,” J. Robot. Mechatron., Vol.21, No.3, pp. 317-323, 2016.

- [2] W. Jitviriya, M. Koike, and E. Hayashi, “Emotional model for robotic system using a self-organizing map combined with Markovian model,” J. Robot. Mechatron., Vol.27, No.5, pp. 563-570, 2015.

- [3] F. Jimenez, T. Yoshikawa, T. Furuhashi, and M. Kanoh, “Effects of a novel sympathy-expression method on collaborative learning among junior high school students and robots,” J. Robot. Mechatron., Vol.30, No.2, pp. 282-291, 2018.

- [4] A. Mehrabian, “Silent messages,” 1st ed., Wadsworth Publishing Company, 1971.

- [5] K. R. Kulkarni and S. B. Bagal, “Facial Expression Recognition,” 2015 Annu. IEEE India Conf., pp. 1-5, 2015.

- [6] Z. Liu and S. Wang, “Emotion Recognition Using Hidden Markov Models from Facial Temperature Sequence,” S. D’Mello, A. Graesser, B. Schuller, and J. C. Martin (Eds.), “Affective Computing and Intelligent Interaction,” Vol.6975, Springer, pp. 240-247, 2011.

- [7] G. Castellano, S. D. Villalba, and A. Camurri, “Recognising Human Emotions from Body Movement and Gesture Dynamics BT – Affective Computing and Intelligent Interaction,” ACII, pp. 71-82, 2007.

- [8] A. Kleinsmith, P. R. De Silva, and N. Bianchi-Berthouze, “Cross-cultural differences in recognizing affect from body posture,” Interact. Comput., Vol.18, No.6, pp. 1371-1389, 2006.

- [9] I. Behoora and C. S. Tucker, “Machine learning classification of design team members’ body language patterns for real time emotional state detection,” Des. Stud., Vol.39, pp. 100-127, 2015.

- [10] H. Aviezer, Y. Trope, and A. Todorov, “Body cues, not facial expressions, discriminate between intense positive and negative emotions,” Science, Vol.338, Issue 6111, pp. 1225-1229, 2012.

- [11] M. M. Gross, E. A. Crane, and B. L. Fredrickson, “Methodology for Assessing Bodily Expression of Emotion,” J. Nonverbal Behav., Vol.34, No.4, pp. 223-248, 2010.

- [12] G. E. Kang and M. M. Gross, “Emotional influences on sit-to-walk in healthy young adults,” Hum. Mov. Sci., Vol.40, pp. 341-351, 2015.

- [13] H. Gunes and M. Piccardi, “Bi-modal emotion recognition from expressive face and body gestures,” J. Netw. Comput. Appl., Vol.30, No.4, pp. 1334-1345, 2007.

- [14] G. Castellano and L. Kessous, “Emotion recognition through multiple modalities: face, body gesture, speech and emotion in human-computer,” C. Peter and R. Beale (Eds.), “Affect and Emotion in Human-Computer Interaction. Lecture Notes in Computer Science,” Vol.4868, Springer, pp. 92-103, 2008.

- [15] M. Gavrilescu, “Recognizing emotions from videos by studying facial expressions, body postures and hand gestures,” Proc. of 2015 23rd Telecommun. Forum (TELFOR 2015), pp. 720-723, 2016.

- [16] J. Morita, Y. Nagai, and T. Moritsu, “Relations Between Body Motion and Emotion: Analysis Based on Laban Movement Analysis,” CogSci, pp. 1026-1031, 2013.

- [17] M. Masuda, S. Kato, and H. Itoh, “A Laban-based approach to emotional motion rendering for human-robot interaction,” Lect. Notes Comput. Sci., Vol.6243, LNCS, pp. 372-380, 2010.

- [18] W. H. Kim, J. W. Park, W. H. Lee, M. J. Chung, and H. S. Lee, “LMA based emotional motion representation using RGB-D camera,” ACM/IEEE Int. Conf. Human-Robot Interact., pp. 163-164, 2013.

- [19] E. A. Crane and M. M. Gross, “Effort-Shape Characteristics of Emotion-Related Body Movement,” J. of Nonverbal Behavior, Vol.37, Issue 2, pp. 91-105, 2013.

- [20] J. Inthiam, E. Hayashi, W. Jitviriya, and A. Mowshowitz, “Development of an emotional expression platform based on LMA-shape and interactive evolution computation,” Proc. 2018 4th Int. Conf. on Control, Automation and Robotics (ICCAR 2018), pp. 11-16, 2018.

- [21] E. Volkova, S. De La Rosa, H. H. Bulthoff, and B. Mohler, “The MPI emotional body expressions database for narrative scenarios,” PLoS One, Vol.9, No.12, pp. 1-28, 2014.

- [22] F. L. Philippe, R. Koestner, S. Lecours, G. Beaulieu-Pelletier, and K. Bois, “The Role of Autobiographical Memory Networks in the Experience of Negative Emotions: How Our Remembered Past Elicits Our Current Feelings,” Emotion, Vol.11, No.6, pp. 1279-1290, 2011.

- [23] T. L. Nwe, S. W. Foo, and L. C. De Silva, “Speech emotion recognition using hidden Markov models,” Speech Commun., Vol.41, No.4, pp. 603-623, 2003.

- [24] Z. Liu and S. Wang, “Emotion Recognition Using Hidden Markov Models from Facial Temperature Sequence BT,” Int. Conf. on Affective Computing and Intelligent Interaction, pp. 240-247, 2011.

- [25] D. Le and E. M. Provost, “Emotion recognition from spontaneous speech using Hidden Markov models with deep belief networks,” Proc. 2013 IEEE Work. Autom. Speech Recognit. Understanding, (ASRU 2013), pp. 216-221, 2013.

- [26] S. An, L. J. Ji, M. Marks, and Z. Zhang, “Two sides of emotion: Exploring positivity and negativity in six basic emotions across cultures,” Front. Psychol., Vol.8, No.APR, pp. 1-14, 2017.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.