Paper:

Underwater Structure from Motion for Cameras Under Refractive Surfaces

Xiaorui Qiao, Atsushi Yamashita, and Hajime Asama

The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

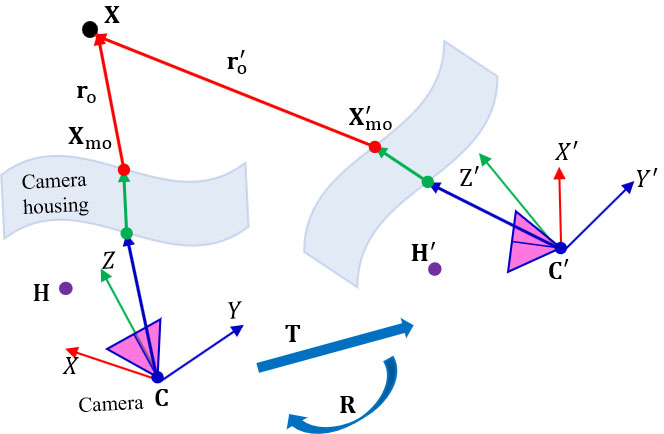

Structure from Motion (SfM), as a three-dimensional (3D) reconstruction technique, can estimate the structure of an object by using a single moving camera. Cameras deployed in underwater environments are generally confined to waterproof housings. Thus, the light rays entering the camera are refracted twice; once at the interface between the water and the camera housing, and again at the interface between the camera housing and air. Images captured from scenes in underwater environments are prone to, and deteriorate, from distortion caused by this refraction. Severe distortions in geometric reconstruction would be caused if the refractive distortion is not properly addressed. Here, we propose a SfM approach to deal with the refraction in a camera system including a refractive surface. The impact of light refraction is precisely modeled in the refractive model. Based on the model, a new calibration and camera pose estimation method is proposed. This proposed method assists in accurate 3D reconstruction using the refractive camera system. Experiments, including simulations and real images, show that the proposed method can achieve accurate reconstruction, and effectively reduce the refractive distortion compared to conventional SfM.

Refractive camera pose estimation

- [1] S. Yasukawa, J. Ahn, Y. Nishida, T. Sonoda, K. Ishii, and T. Ura, “Vision system for an autonomous underwater vehicle with a benthos sampling function,” J. Robot. Mechatron., Vol.30, No.2, pp. 248-256, 2018.

- [2] X. Qiao, Y. Ji, A. Yamashita, and H. Asama, “Visibility enhancement for underwater robots based on an improved underwater light model,” J. Robot. Mechatron., Vol.30, No.5, pp. 781-790, 2018.

- [3] M. Myint, K. Yonemori, A. Yanou, K. N. Lwin, M. Minami, and S. Ishiyama, “Visual servoing for underwater vehicle using dual-eyes evolutionary real-time pose tracking,” J. Robot. Mechatron., Vol.28, No.4, pp. 543-558, 2016.

- [4] A. Jordt-Sedlazeck and R. Koch, “Refractive structure-from-motion on underwater images,” Proc. of the 2013 IEEE Int. Conf. on Computer Vision, pp. 57-64, 2013.

- [5] X. Xu, R. Che, R. Nian, B. He, M. Chen, and A. Lendasse, “Underwater 3d object reconstruction with multiple views in video stream via structure from motion,” Proc. of OCEANS 2016, pp. 1-5, 2016.

- [6] J. Henderson, O. Pizarro, M. Johnson-Roberson, and I. Mahon, “Mapping submerged archaeological sites using stereo-vision photogrammetry,” Int. J. of Nautical Archaeology, Vol.42, No.2, pp. 243-256, 2013.

- [7] S. B. Williams, O. R. Pizarro, M. V. Jakuba, C. R. Johnson, N. S. Barrett, R. C. Babcock, G. A. Kendrick, P. D. Steinberg, A. J. Heyward, P. J. Doherty et al., “Monitoring of benthic reference sites: using an autonomous underwater vehicle,” IEEE Robotics and Automation Magazine, Vol.19, No.1, pp. 73-84, 2012.

- [8] V. Murino, A. Trucco, and C. S. Regazzoni, “A probabilistic approach to the coupled reconstruction and restoration of underwater acoustic images,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.20, No.1, pp. 9-22, 1998.

- [9] N. Brahim, D. Guériot, S. Daniel, and B. Solaiman, “3d reconstruction of underwater scenes using didson acoustic sonar image sequences through evolutionary algorithms,” Proc. of OCEANS 2011, pp. 1-6, 2011.

- [10] T. Guerneve and Y. Petillot, “Underwater 3d reconstruction using blueview imaging sonar,” Proc. of OCEANS 2015, pp. 1-7, 2015.

- [11] Y. Yang, B. Zheng, H. Zheng, Z. Wang, G. Wu, and J. Wang, “3d reconstruction for underwater laser line scanning,” Proc. of OCEANS 2013, pp. 1-3, 2013.

- [12] S. Chi, Z. Xie, and W. Chen, “A laser line auto-scanning system for underwater 3d reconstruction,” Sensors, Vol.16, No.9, p. 1534, 2016.

- [13] S. Jiang, F. Sun, Z. Gu, H. Zheng, W. Nan, and Z. Yu, “Underwater 3d reconstruction based on laser line scanning,” Proc. of OCEANS 2017, pp. 1-6, 2017.

- [14] A. Sarafraz and B. K. Haus, “A structured light method for underwater surface reconstruction,” ISPRS J. of Photogrammetry and Remote Sensing, Vol.114, pp. 40-52, 2016.

- [15] Y. Wang, S. Negahdaripour, and M. D. Aykin, “Calibration and 3d reconstruction of underwater objects with non-single-view projection model by structured light stereo imaging,” Applied Optics, Vol.55, No.24, pp. 6564-6575, 2016.

- [16] V. Brandou, A. G. Allais, M. Perrier, E. Malis, P. Rives, J. Sarrazin, and P. M. Sarradin, “3d reconstruction of natural underwater scenes using the stereovision system iris,” Proc. of OCEANS 2007, pp. 1-6, 2007.

- [17] M. Takagi, H. Mori, A. Yimit, Y. Hagihara, and T. Miyoshi, “Development of a small size underwater robot for observing fisheries resources – underwater robot for assisting abalone fishing –,” J. Robot. Mechatron., Vol.28, No.3, pp. 397-403, 2016.

- [18] R. Hartley and A. Zisserman, “Multiple view geometry in computer vision,” Cambridge University Press, 2003.

- [19] A. Schmitt, H. Müller, and W. Leister, “Ray tracing algorithms-theory and practice,” R. A. Earnshaw (Ed.), “Theoretical Foundations of Computer Graphics and CAD,” Springer, pp. 997-1030, 1988.

- [20] R. Ferreira and J. S. J. P. Costeira, “Stereo reconstruction of a submerged scene,” Proc. of the 2005 Iberian Conf. on Pattern Recognition and Image Analysis, pp. 102-109, 2005.

- [21] O. Pizarro, R. M. Eustice, and H. Singh, “Relative pose estimation for instrumented, calibrated imaging platforms,” Proc. of the 2003 Digital Image Computing Techniques and Applications, pp. 601-612, 2003.

- [22] J. M. Lavest, G. Rives, and J. T. Lapresté, “Underwater camera calibration,” Proc. of the 2000 European Conf. Computer Vision, pp. 654-668, 2000.

- [23] A. Yamashita, E. Hayashimoto, T. Kaneko, and Y. Kawata, “3-d measurement of objects in a cylindrical glass water tank with a laser range finder,” Proc. of the 2003 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, Vol.2, pp. 1578-1583, 2003.

- [24] A. Yamashita, H. Higuchi, T. Kaneko, and Y. Kawata, “Three dimensional measurement of object’s surface in water using the light stripe projection method,” Proc. of the 2004 IEEE Int. Conf. on Robotics and Automation, Vol.3, pp. 2736-2741, 2004.

- [25] A. Yamashita, S. Ikeda, and T. Kaneko, “3-d measurement of objects in unknown aquatic environments with a laser range finder,” Proc. of the 2005 IEEE Int. Conf. on Robotics and Automation, pp. 3912-3917, 2005.

- [26] A. Yamashita, S. Kato, and T. Kaneko, “Robust sensing against bubble noises in aquatic environments with a stereo vision system,” Proc. of the 2006 IEEE Int. Conf. on Robotics and Automation, pp. 928-933, 2006.

- [27] A. Yamashita, A. Fujii, and T. Kaneko, “Three dimensional measurement of objects in liquid and estimation of refractive index of liquid by using images of water surface with a stereo vision system,” Proc. of the 2008 IEEE Int. Conf. on Robotics and Automation, pp. 974-979, 2008.

- [28] A. Yamashita, Y. Shirane, and T. Kaneko, “Monocular underwater stereo-3d measurement using difference of appearance depending on optical paths,” Proc. of the 2010 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3652-3657, 2010.

- [29] J. Gedge, M. Gong, and Y. Yang, “Refractive epipolar geometry for underwater stereo matching,” Proc. of the 2011 Canadian Conf. on Computer and Robot Vision, pp. 146-152, 2011.

- [30] A. Jordt-Sedlazeck and R. Koch, “Calibration of housing parameters for underwater stereo-camera rigs,” Proc. of the 2011 British Machine Vision Conf., pp. 1-11, 2011.

- [31] L. Kang, L. Wu, Y. Wei, S. Lao, and Y. Yang, “Two-view underwater 3d reconstruction for cameras with unknown poses under flat refractive interfaces,” Pattern Recognition, Vol.69, pp. 251-269, 2017.

- [32] H. Kume, H. Fujii, A. Yamashita, and H. Asama, “Scale reconstructable structure from motion using refraction with omnidirectional camera,” Proc. of the 11th France-Japan 9th Europe-Asia Congress on Mechatronics / 17th Int. Conf. on Research and Education in Mechatronics, pp. 117-122, 2016.

- [33] A. Shibata, H. Fujii, A. Yamashita, and H. Asama, “Absolute scale structure from motion using a refractive plate,” Proc. of the IEEE/SICE Int. Symp. on System Integration, pp. 540-545, 2015.

- [34] A. Shibata, Y. Okumura, H. Fujii, A. Yamashita, and H. Asama, “Refraction-based bundle adjustment for scale reconstructable structure from motion,” J. Robot. Mechatron., Vol.30, No.4, pp. 660-670, 2018.

- [35] P. T. Boggs and J. Tolle, “Sequential quadratic programming,” Acta Numerica, Vol.4, pp. 1-51, 1995.

- [36] R. Pless, “Using many cameras as one,” Proc. of the 2003 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, Vol.2, pp. II-587-93, 2003.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.