Paper:

Improved Artificial Bee Colony Algorithm and its Application in Classification

Haiquan Wang*, Jianhua Wei**, Shengjun Wen*, Hongnian Yu***, and Xiguang Zhang*

*Zhongyuan Petersburg Aviation College, Zhongyuan University of Technology

41 Zhongyuan Road, Zhengzhou 450007, China

**School of Electric and Information Engineering, Zhongyuan University of Technology

41 Zhongyuan Road, Zhengzhou 450007, China

***Faculty of Science and Technology, Bournemouth University

Fern Barrow, Poole, Dorset BH12 5BB, United Kingdom

For improving the classification accuracy of the classifier, a novel classification methodology based on artificial bee colony algorithm is proposed for optimal feature and SVM parameters selection. In order to balance the ability of exploration and exploitation of traditional ABC algorithm, improvements are introduced for the generation of initial solution set and onlooker bee stage. The proposed algorithm is applied to four datasets with different attribute characteristics from UCI and efficiency of the algorithm is proved from the results.

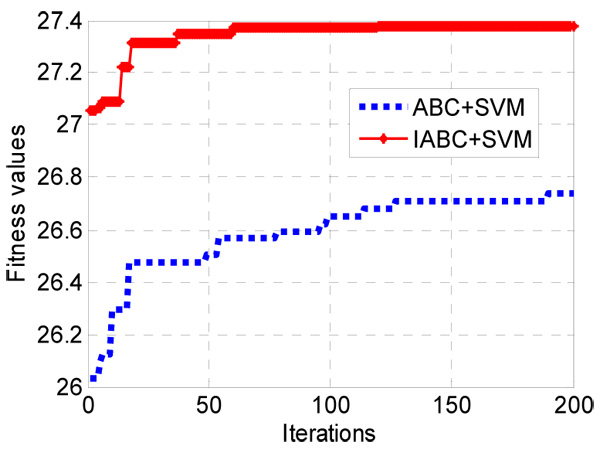

Fitness values of two optimization methods for abalone dataset

- [1] U. Fayyad, G. Piatetsky-Shapiro, and P. Smith, “From Data Mining to Knowledge Discovery: An Overview, Advances in Knowledge Discovery and Data Mining,” MIT Press, pp. 1-34, 1996.

- [2] F. Otero, A. Freitas, and C. Johnson, “A new sequential covering strategy for inducing classification rules with ant colony algorithms,” IEEE Trans. on Evolutionary Computation, Vol.17, No.1, pp. 64-76, 2013.

- [3] H. Liu and H. Motoda, “Feature selection for knowledge discovery and data mining,” Kluwer Academic, 1998.

- [4] H. Peng, F. Long, and C. Ding, “Feature selection based on mutual information: criteria of max-dependency, max-relevance, and min-redundancy,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.27, No.8, pp. 1226-1238, 2005.

- [5] P. E. Meyer and G. Bontempi, “On the use of variable complementarity for feature selection in cancer classification,” Proc. of European workshop on applications of evolutionary computing: Evo Workshops, pp. 91-102, 2006.

- [6] H. H. Yang and J. Moody, “Feature selection based on joint mutual information,” Proc. of Int. ICSC Symp. on Advances in Intelligent Data Analysis, pp. 1-8, 1999.

- [7] D. Lin and X. Tang, “Conditional Infomax Learning: An Integrated Framework for Feature Extraction and Fusion,” Proc. 9th European Conf. on Computer Vision, pp. 68-82, 2006.

- [8] C. T. Tran, M. Zhang, P. Andreae, and B. Xue, “A Wrapper Feature Selection Approach to Classification with Missing Data,” Lecture Notes in Computer Science, Vol.9597, pp. 685-700, 2016.

- [9] A Wang, N. An, G. Chen, J. Yang, and L. Li, “Incremental wrapper based gene selection with markov blanket,” IEEE Int. Conf. on Bioinformatics and Biomedicine, pp. 74-79, 2014.

- [10] O. Soufan, D. Kleftogiannis, P. Kalnis, and V. B. Bajic, “DWFS: A Wrapper Feature Selection Tool Based on a Parallel Genetic Algorithm,” PLoS One, Vol.10, No.2, 2015.

- [11] D. Chyzhyk, A. Savio, and M. Grana, “Evolutionary ELM wrapper feature selection for Alzheimer’s disease CAD on anatomical brain MRI,” Neurocomputing, Vol.128, pp. 73-80, 2014.

- [12] R. Panthonga and A. Srivihokb, “Wrapper Feature Subset Selection for Dimension Reduction Based on Ensemble Learning Algorithm,” Procedia Computer Science, Vol.72, pp. 162-169, 2015.

- [13] D. Rodrigues et al., “A wrapper approach for feature selection based on Bat Algorithm and Optimum-Path Forest, Expert Systems with Applications,” Vol.41, Issue 5, pp. 2250-2258, 2014.

- [14] H. Banati and M. Bajaj, “Fire Fly Based Feature Selection Approach,” Int. J. of Computer Science Issues, Vol.8, No.2, pp. 473-480, 2011.

- [15] S. Kashef and H. Nezamabadi-pour, “An advanced ACO algorithm for feature subset selection,” Neurocomputing, Vol.147, pp. 271-279, 2015.

- [16] V. Srikrishna, R. Ghosh, V. Ravi, and K. Deb, “Elitist Quantum-Inspired Differential Evolution Based Wrapper for Feature Subset Selection,” Lecture Notes in Computer Science, Vol.9426, pp. 113-124, 2015.

- [17] R. Panthonga and A. Srivihokb, “Wrapper Feature Subset Selection for Dimension Reduction Based on Ensemble Learning Algorithm,” Procedia Computer Science, Vol.72, pp. 162-169, 2015.

- [18] P.-N. Tan and M. Steinbach, “Introduction to Data Mining,” Addison-Wesley Companion Book, 2006.

- [19] G. Gu, R. Hu, and Y. Li, “Study on Identification of Damage to Wind Turbine Blade Based on Support Vector Machine and Particle Swarm Optimization,” J. Robot. Mechatron., Vol.27, No.3, pp. 244-250, 2015.

- [20] H. Zhu and X. Li, “Research on a New Method based on Improved ACO Algorithm and SVM Model for Data Classification,” Int. J. of Database Theory and Application, Vol.9, No.1, pp. 217-226, 2016.

- [21] H. Gou, T. Shi, L. Yan, and J. Xiao, “Gait and Posture Analysis Method Based on Genetic Algorithm and Support Vector Machines with Acceleration Data,” J. Robot. Mechatron., Vol.28, pp. 418-424, 2016.

- [22] H. Ceylan and H. K. Ozturk, “Estimating energy demand of Turkey based on economic indicators using genetic algorithm approach,” Energy Convers Management, Vol.45, pp. 2525-2537, 2004.

- [23] S. Sarafrazi and H. Nezamabadi-pour, “Facing the classification of binary problems with a GSA-SVM hybrid system,” Mathematical and Computer Modelling, Vol.57, Issues 1-2, pp. 270-278, 2013.

- [24] C.-L. Huang and J.-F. Dun, “A distributed PSO-CSVM hybrid system with features selection and parameter optimization,” Applied Soft Computing, Vol.8, Issue 4, pp. 1381-1391, 2008.

- [25] L. Zhou, K. K. Lai, and J. Yen, “Bankruptcy prediction using SVM models with a new approach to combine features selection and parameter optimization,” Int. J. of Systems Science, Vol.45, Issue 3, pp. 241-253, 2014.

- [26] F. Samadzadegan, H. Hasania, and T. Schenk, “Simultaneous Feature Selection and SVM Parameter Determination in Classification of Hyperspectral Imagery Using Ant Colony Optimization,” Canadian J. of Remote Sensing, Vol.38, Issue 2, pp. 139-156, 2012.

- [27] D. Karaboga and B. Akay, “A survey: algorithms simulating bee swarm intelligence,” Artificial Intelligence Review, Vol.31, No.1, pp. 68-85, 2009.

- [28] D. Karaboga and B. Basturk, “A powerful and efficient algorithm for numerical function optimization: artificial bee colony (ABC) algorithm,” J. of Global optimization, Vol.39, pp. 459-471, 2007.

- [29] P. Dass, S. S. Jadon, H. Sharma, J. C. Bansal, and K. E. Nygard, “Hybridisation of classical unidimensional search with ABC to improve exploitation capability,” Int. J. of Artificial Intelligence and Soft Computing, Vol.5, No.2, pp. 151-164, 2015.

- [30] H. Liu and H. Motoda, “Feature selection for knowledge discovery and data mining,” Kluwer Academic, 1998.

- [31] Q.-K. Pan, “An effective co-evolutionary artificial bee colony algorithm for steelmaking-continuous casting scheduling,” European J. of Operational Research, Vol.250, Issue 3, pp. 702-714, 2016.

- [32] J. Liu, “Extended integer tent maps and dynamic hash function,” J. of Communications, Vol.31, No.5, pp. 51-59, 2010.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.