Paper:

End-to-End Autonomous Mobile Robot Navigation with Model-Based System Support

Alexander Carballo*, Shunya Seiya**, Jacob Lambert**, Hatem Darweesh**, Patiphon Narksri*, Luis Yoichi Morales*, Naoki Akai*, Eijiro Takeuchi**, and Kazuya Takeda**

*Institutes of Innovation for Future Society, Nagoya University

Furo-cho, Chikusa-ku, Nagoya 464-8601, Japan

**Graduate School of Informatics, Nagoya University

Furo-cho, Chikusa-ku, Nagoya 464-8603, Japan

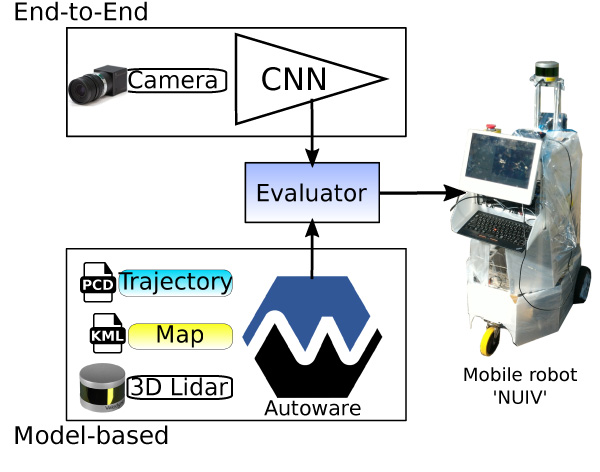

Autonomous mobile robot navigation in real unmodified outdoor areas frequented by people on their business, children playing, fast running bicycles, and even robots, remains a difficult challenge. For eleven years, the Tsukuba Challenge Real World Robot Challenge (RWRC) has brought together robots, researchers, companies, government, and ordinary citizens, under the same outdoor space to push forward the limits of autonomous mobile robots. For the Tsukuba Challenge 2017 participation, our team proposed to study the problem of sensors-to-actuators navigation (also called End-to-End), this is, having the robot to navigate towards the destination on a complex path, not only moving straight but also turning at intersections. End-to-End (E2E) navigation was implemented using a convolutional neural network (CNN): the robot learns how to go straight, turn left, and turn right, using camera images and trajectory data. E2E network training and evaluation was performed at Nagoya University, on similar outdoor conditions to that of Tsukuba Challenge 2017 (TC2017). Even thought E2E was trained on a different environment and conditions, the robot successfully followed the designated trajectory in the TC2017 course. Learning how to follow the road no matter the environment is of the key attributes of E2E based navigation. Our E2E does not perform obstacle avoidance and can be affected by illumination and seasonal changes. Therefore, to improve safety and add fault tolerance measures, we developed an E2E navigation approach with model-based system as backup. The model-based system is based on our open source autonomous vehicle software adapted to use on a mobile robot. In this work we describe our approach, implementation, experiences and main contributions.

Our system for mobile robot navigation

- [1] S. Yuta, H. Hashimoto, and H. Tashiro, “Tsukuba challenge – real world robot challenge (rwrc): Toward actual autonomous robots in our daily life,” 25th Annual Conf. of the Robotics Society of Japan, 3D19, 2007.

- [2] S. Yuta, M. Mizukawa, H. Hashimoto, H. Tashiro, and T. Okubo, “Tsukuba challenge 2009 – towards robots working in the real world: Records in 2009 –,” J. of Field Robotics, Vol.23, No.2, pp. 201-206, 2011.

- [3] S. Yuta, “Open experiment of autonomous navigation of mobile robots in the city: Tsukuba challenge 2014 and the results,” J. of Robotics and Mechatronics, Vol.27, No.4, pp. 318-326, 2015.

- [4] Y. Morales, E. Takeuchi, A. Carballo, W. Tokunaga, H. Kuniyoshi, A. Aburadani, A. Hirosawa, Y. Nagasaka, Y. Suzuki, and T. Tsubouchi, “1km autonomous robot navigation on outdoor pedestrian paths “running the tsukuba challenge 2007”,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 219-225, 2008.

- [5] Y. Morales, A. Carballo, E. Takeuchi, A. Aburadani, and T. Tsubouchi, “Autonomous robot navigation in outdoor cluttered pedestrian walkways,” J. of Field Robotics, Vol.26, No.8, pp. 609-635, 2009.

- [6] N. Akai, K. Yamauchi, K. Inoue, Y. Kakigi, Y. Abe, and K. Ozaki, “Development of mobile robot “SARA” that completed mission in real world robot challenge 2014,” J. of Robotics and Mechatronics, Vol.27, No.4, pp. 327-336, 2015.

- [7] A. Sujiwo, T. Ando, E. Takeuchi, Y. Ninomiya, and M. Edahiro, “Monocular vision-based localization using ORB-SLAM with LIDAR-aided mapping in real-world robot challenge,” J. of Robotics and Mechatronics, Vol.28, No.4, pp. 479-490, 2016.

- [8] A. Sujiwo, E. Takeuchi, L. Y. Morales, N. Akai, H. Darweesh, Y. Ninomiya, and M. Edahiro, “Robust and accurate monocular vision-based localization in outdoor environments of real-world robot challenge,” J. of Robotics and Mechatronics, Vol.29, No.4, pp. 685-696, 2017.

- [9] H. Darweesh, E. Takeuchi, K. Takeda, Y. Ninomiya, A. Sujiwo, L. Y. Morales, N. Akai, T. Tomizawa, and S. Kato, “Open source integrated planner for autonomous navigation in highly dynamic environments,” J. of Robotics and Mechatronics, Vol.29, No.4, pp. 668-684, 2017.

- [10] M. Suzuki, T. Saitoh, E. Terada, and Y. Kuroda, “Near-to-far self-supervised road estimation for complicated environments,” Int. Federation of Automatic Control (IFAC) Proc., Vol.43, No.18, pp. 689-694, 2010.

- [11] K. Hosaka and T. Tomizawa, “A person detection method using 3d laser scanner – proposal of efficient grouping method of point cloud data –,” J. of Robotics and Mechatronics, Vol.27, No.4, pp. 374-381, 2015.

- [12] T. Tomizawa and R. Moriai, “Using difference images to detect pedestrian signal changes,” J. of Robotics and Mechatronics, Vol.29, No.4, pp. 706-711, 2017.

- [13] K. Shigematsu, Y. Konishi, T. Tsubouchi, K. Suwabe, R. Mitsudome, H. Date, and A. Ohya, “Recognition of a traffic signal and a search target using deep learning for tsukuba challenge 2016,” 17th SICE SI Division Annual Conf., pp. 1398-1401, 2016 (in Japanese).

- [14] S. Bando, T. Nakabayashi, S. Kawamoto, and H. Bando, “Approach of tsuchiura project in tsukuba challenge 2016,” 17th SICE SI Division Annual Conf., pp. 1392-1397, 2016 (in Japanese).

- [15] R. Mitsudome, H. Date, A. Suzuki, T. Tsubouchi, and A. Ohya, “Autonomous mobile robot searching for persons with specific clothing on urban walkway,” J. of Robotics and Mechatronics, Vol.27, No.4, pp. 649-659, 2017.

- [16] Y. LeCun, U. Muller, J. Ben, E. Cosatto, and B. Flepp, “Off-road obstacle avoidance through end-to-end learning,” Advances in Neural Information Processing Systems 18 (NIPS), pp. 739-746, MIT Press, 2005.

- [17] M. Bojarski, D. D. Testa, D. Dworakowski, B. Firner, B. Flepp, P. Goyal, L. D. Jackel, M. Monfort, U. Muller, J. Zhang, X. Zhang, J. Zhao, and K. Zieba, “End to end learning for self-driving cars,” arXiv preprint arXiv:1604.07316, 2016.

- [18] H. Xu, Y. Gao, F. Yu, and T. Darrell, “End-to-end learning of driving models from large-scale video datasets,” The IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2174-2182, 2017.

- [19] S. Yang, W. Wang, C. Liu, W. Deng, and J. K. Hedrick, “Feature analysis and selection for training an end-to-end autonomous vehicle controller using deep learning approach,” pp. 1033-1038, 2017.

- [20] S. Kato, E. Takeuchi, Y. Ishiguro, Y. Ninomiya, K. Takeda, and T. Hamada, “An open approach to autonomous vehicles,” IEEE Micro, Vol.35, No.6, pp. 60-68, 2015.

- [21] D. A. Pomerleau, “ALVINN: An autonomous land vehicle in a neural network,” Advances in Neural Information Processing Systems 1 (NIPS), pp. 305-313, Morgan-Kaufmann, 1988.

- [22] D. A. Pomerleau, “Efficient training of artificial neural networks for autonomous navigation,” Neural Computation, Vol.3, No.1, pp. 88-97, 1991.

- [23] D. A. Pomerleau, “Knowledge-based training of artificial neural networks for autonomous robot driving,” Robot learning, pp. 19-43, 1993.

- [24] T. M. Jochem, D. A. Pomerleau, and C. E. Thorpe, “Vision-based neural network road and intersection detection and traversal,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS) ‘Human Robot Interaction and Cooperative Robots,’ Vol.3, pp. 344-349, 1995.

- [25] M. Bajracharya, A. Howard, L. H. Matthies, B. Tang, and M. Turmon, “Autonomous off-road navigation with end-to-end learning for the LAGR program,” J. of Field Robotics, Vol.26, No.1, pp. 3-25, 2009.

- [26] C. Chen, A. Seff, A. Kornhauser, and J. Xiao, “Deepdriving: Learning affordance for direct perception in autonomous driving,” IEEE Int. Conf. on Computer Vision (ICCV), pp. 2722-2730, 2015.

- [27] S. Seiya, D. Hayashi, E. Takeuchi, C. Miyajima, and K. Takeda, “Evaluation of deep learning-based driving signal generation methods for vehicle control,” Fourth Int. Symposium on Future Active Safety Technology: towards zero traffic accidents (FAST-zero), TuC-P1-4, 2017.

- [28] Z. Zhang, “A flexible new technique for camera calibration. IEEE Transactions on Pattern Analysis and Machine Intelligence,” Vol.22, No.11, pp. 1330-1334, 2000.

- [29] R. Hartley and A. Zisserman, “Multiple View Geometry in Computer Vision,” Cambridge University Press, 2003.

- [30] O. Amidi and C. E. Thorpe, “Integrated mobile robot control,” Mobile Robots V, Vol.1388, pp. 504-524, 1991.

- [31] R. C. Coulter, “Implementation of the pure pursuit path tracking algorithm,” Technical Report CMU-RI-TR-92-01, Carnegie-Mellon University, Robotics Institute, 1992.

- [32] E. Takeuchi and T. Tsubouchi, “A 3-d scan matching using improved 3-d normal distributions transform for mobile robotic mapping,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 3068-3073, 2006.

- [33] M. Magnusson, “The three-dimensional normal-distributions transform: an efficient representation for registration, surface analysis, and loop detection,” Ph.D. dissertation, Örebro Universitet, 2009.

- [34] N. Akai, L. Y. Morales, T. Yamaguchi, E. Takeuchi, Y. Yoshihara, H. Okuda, T. Suzuki, and Y. Ninomiya, “Autonomous driving based on accurate localization using multilayer lidar and dead reckoning,” IEEE Int. Conf. on Intelligent Trans. Systems (ITSC), pp. 1147-1152, 2017.

- [35] W. Maddern, A. Stewart, C. McManus, B. Upcroft, W. Churchill, and P. Newman, “Illumination invariant imaging: Applications in robust vision-based localisation, mapping and classification for autonomous vehicles,” Visual Place Recognition in Changing Environments Workshop, IEEE Int. Conf. on Robotics and Automation (ICRA), Vol.2, p. 3, 2014.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.