Paper:

Real-Time Monocular Three-Dimensional Motion Tracking Using a Multithread Active Vision System

Shaopeng Hu, Mingjun Jiang, Takeshi Takaki, and Idaku Ishii

Robotics Laboratory, Graduate School of Engineering, Hiroshima University

1-4-1 Kagamiyama, Higashi-hiroshima, Hiroshima 739-8527, Japan

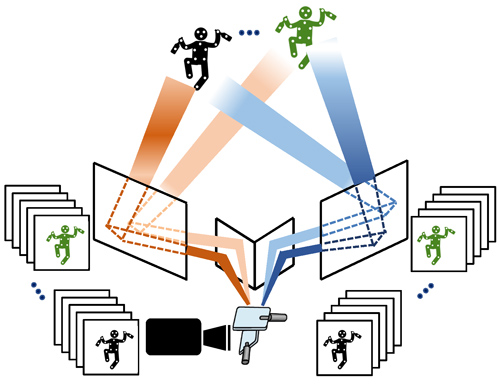

In this study, we developed a monocular stereo tracking system to be used as a marker-based, three-dimensional (3-D) motion capture system. This system aims to localize dozens of markers on multiple moving objects in real time by switching five hundred different views in 1 s. The ultrafast mirror-drive active vision used in our catadioptric stereo tracking system can accelerate a series of operations for multithread gaze control with video shooting, computation, and actuation within 2 ms. By switching between five hundred different views in 1 s, with real-time video processing for marker extraction, our system can function as J virtual left and right pan-tilt tracking cameras, operating at 250/J fps to simultaneously capture and process J pairs of 512 × 512 stereo images with different views via the catadioptric mirror system. We conducted several real-time 3-D motion experiments to capture multiple fast-moving objects with markers. The results demonstrated the effectiveness of our monocular 3-D motion tracking system.

Monocular 3-D motion tracking

- [1] T. B. Moeslund, A. Hilton, and V. Kruger, “A survey of advances in vision-based human motion capture and analysis,” Computer Vision and Image Understanding, Vol.104, No.2, pp. 90-126, 2006.

- [2] A. W. M. Smeulders, D. M. Chu, R. Cucchiara, S. Calderara, A. Dehghan, and M. Shah, “Visual tracking: an experimental survey,” IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI), Vol.36, No.7, pp. 1442-1468, 2014.

- [3] S. N. Purkayastha, M. D. Byrne, and M. K. O’Malley, “Human-scale motion capture with an accelerometer-based gaming controller,” J. Robot. Mechatron., Vol.25, No.3, pp. 458-465, 2013.

- [4] O. Mendels, H. Stern, and S. Berman, “User identification for home entertainment based on free-air hand motion signatures,” IEEE Trans. Syst., Man, Cybern., Syst., Vol.44, No.11, pp. 1461-1473, 2014.

- [5] C. Bregler, “Motion capture technology for entertainment,” IEEE Sign. Proc. Mag., Vol.24, No.6, pp. 156-158, 2007.

- [6] W. M. Hu, T. N. Tan, L. Wang, and S. J. Maybank, “A survey on visual surveillance of object motion and behaviors,” IEEE Trans. Systems, Man, and Cybernetics, Part C: Applications and Reviews, Vol.34, No.3, pp. 334-352, 2004.

- [7] K. A. Joshi and D. G. Thakore, “A survey on moving object detection and tracking in video surveillance system,” Int. J. Soft Comput. Eng. (IJSCE), Vol.2, No.3, pp. 44-48, 2012.

- [8] B. Noureddin, P. D. Lawrence, and C. F. Man, “A non-contact device for tracking gaze in a human computer interface,” Comput. Vis. Image Underst., Vol.98, No.1, pp. 52-82, 2005.

- [9] A. Jaimes and N. Sebe, “Multimodal human-computer interaction: A survey,” Comput. Vis. Image Underst., Vol.108, No.1, pp. 116-134, 2007.

- [10] M. Field, D. Stirling, F. Naghdy, and Z. Pan, “Motion capture in robotics review,” IEEE Int. Conf. on Control and Automation (ICCA), pp. 1697-1702, 2009.

- [11] H. Audren, J. Vaillant, A. Kheddar, A. Escande, K. Kaneko, and E. Yoshida, “Model preview control in multi-contact motion application to a humanoid robot,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 4030-4035, 2014.

- [12] K. Dorfmüller, “Robust tracking for augmented reality using retroreflective markers,” Comput. Graph., Vol.23, pp. 795-800, 1999.

- [13] U. C. Ugbolue, E. Papi, K. T. Kaliarntas, A. Kerr, L. Earl, V. M. Pomeroy, and P. J. Rowe, “The evaluation of an inexpensive, 2D, video based gait assessment system for clinical use,” Gait Posture, Vol.38, pp. 483-489, 2013.

- [14] M. Myint, K. Yonemori, A. Yanou, K. N. Lwin, M. Minami, and S. Ishiyama, “Visual Servoing for Underwater Vehicle Using Dual-Eyes Evolutionary Real-Time Pose Tracking,” J. Robot. Mechatron., Vol.28, No.4, pp. 543-558, 2016.

- [15] A. Censi, J. Strubel, C. Brandli, T. Delbruck, and D. Scaramuzza, “Low-latency localization by Active LED Markers tracking using a Dynamic Vision Sensor,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 891-898, 2013.

- [16] C. Krishnan, E. P. Washabaugh, and Y. Seetharaman, “A low cost realtime motion tracking approach using webcam technology,” J. Biomech, Vol.48, No.4, pp. 544-548, 2015.

- [17] K. Cheung, S. Baker, and T. Kanade, “Shape-from-silhouette of articulated objects and its use for human body kinematics estimation and motion capture,” Proc. of the IEEE Computer Society Conf. on Computer Vision and Pattern Recognition (CVPR), Vol.1, pp. 77-84, 2003.

- [18] J. L. Martínez, A. Mandow, J. Morales, S. Pedraza, and A. García-Cerezo, “Approximating kinematics for tracked mobile robots,” Int. J. Robot. Res., Vol.24, No.10, pp. 867-878, 2005.

- [19] C. Theobalt, I. Albrecht, J. Haber, M. Magnor, and H.-P. Seidel, “Pitching a baseball: Tracking high-speed motion with multi-exposure images,” ACM Trans. Graph., Vol.23, No.3, pp. 540-547, 2004.

- [20] A. Aristidou and J. Lasenby, “Real-time marker prediction and CoR estimation in optical motion capture,” The Visual Computer, Vol.29, No.1, pp. 7-26, 2013.

- [21] S. Hu, Y. Matsumoto, T. Takaki, and I. Ishii, “Monocular stereo measurement using high-speed catadioptric tracking,” Sensors, Vol.17, No.8, pp. 1839, 2017.

- [22] J. Gluckman and S. K. Nayar, “Rectified catadioptric stereo sensors,” IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI), Vol.24, No.2, pp. 224-236, 2002.

- [23] H. Mitsumoto, S. Tamura, K. Okazaki, N. Kajimi, and Y. Fukui, “3-D reconstruction using mirror images based on a plane symmetry recovery method,” IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI), Vol.14, No.9, pp. 941-945, 1992.

- [24] Z. Zhang and H. Tsui, “3-D reconstruction from a single view of an object and its image in a plane mirror,” IEEE Int. Conf. on Pattern Recognition (ICPR), Vol.2, pp. 1174-1176, 1998.

- [25] A. Goshtasby and W. Gruver, “Design of a single-lens stereo camera system,” Pattern Recognition, Vol.26, No.6, pp. 923-937, 1993.

- [26] T. P. Pachidis and J. N. Lygouras, “Pseudostereo-vision system: a monocular stereo-vision system as a sensor for real-time robot applications,” IEEE Trans. on Instrumentation and Measurement, Vol.56, No.6, pp. 2547-2560, 2007.

- [27] M. Inaba, T. Hara, and H. Inoue, “A stereo viewer based on a single camera with view-control mechanism,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Vol.3, pp. 1857-1865, July 1993.

- [28] H. Mathieu and F. Devernay, “Systeme de miroirs pour la stereoscopie,” Technical Report 0172, INRIA Sophia-Antipolis, 1995 (in French).

- [29] D. H. Lee and I. S. Kweon, “A novel stereo camera system by a biprism,” IEEE Trans. on Robotics and Automation, Vol.16, No.5, pp. 528-541, 2001.

- [30] Y. Xiao and K. B. Lim, “A prism-based single-lens stereovision system: from trinocular to multi-ocular,” Image and vision Computing, Vol.25, No.11, pp. 1725-1736, 2007.

- [31] D. Southwell, A. Basu, M. Fiala, and J. Reyda, “Panoramic stereo,” IEEE Int. Conf. on Pattern Recognition (ICPR), Vol.1, pp. 378-382, 1996.

- [32] S. Yi and N. Ahuja, “An omnidirectional stereo vision system using a single camera,” IEEE Int. Conf. on Pattern Recognition (ICPR), Vol.4, pp. 861-865, 2006.

- [33] J. Gluckman, S. K. Nayar, and K. J. Thoresz, “Real-time omnidirectional and panoramic stereo,” Comput. Vis. Image Underst., Vol.1, pp. 299-303, 1998.

- [34] H. Koyasu, J. Miura, and Y. Shirai, “Real-time omnidirectional stereo for obstacle detection and tracking in dynamic environments,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Vol.1, pp. 31-36, 2001.

- [35] L. Zhu and W. Weng, “Catadioptric stereo-vision system for the real-time monitoring of 3D behavior in aquatic animals,” Physiol. Behav., Vol.91, No.1, pp. 106-119, 2007.

- [36] M. Lauer, M. Schonbein, S. Lange, and S. Welker, “3D-object tracking with a mixed omnidirectional stereo camera system,” Mechatronics, Vol.21, pp. 390-398, 2011.

- [37] M. Shimizu and M. Okutomi, “Calibration and rectification for reflection stereo,” IEEE Computer Society Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1-8, 2008.

- [38] E. P. Krotkov, “Active computer vision by cooperative focus and stereo,” Springer Science+Business Media, pp. 1-17, 2012.

- [39] D. Wan and J. Zhou, “Stereo vision using two PTZ cameras,” Computer Vision and Image Understanding, Vol.112, pp. 184-194, 2008.

- [40] S. Kumar, C. Micheloni, and C. Piciarelli, “Stereo localization using dual PTZ cameras,” Int. Conf. on Computer Analysis of Images and Patterns, pp. 1061-1069, 2009.

- [41] C. Micheloni, B. Rinner, and G. L. Foresti, “Video analysis in pan-tilt-zoom camera networks,” IEEE Signal Processing Magazine, Vol.27, pp. 78-90, 2010.

- [42] W. Kong, D. Zhang, X. Wang, X. Xian, and J. Zhang, “Autonomous landing of an UAV with a ground-based actuated infrared stereo vision system,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 2963-2970, 2013.

- [43] I. Ishii, T. Taniguchi, R. Sukenobe, and K. Yamamoto, “Development of high-speed and real-time vision platform, H3 Vision,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 3671-3678, 2009.

- [44] I. Ishii, T. Tatebe, Q. Gu, Y. Moriue, T. Takaki, and K. Tajima, “2000 fps real-time vision system with high-frame-rate video recording,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1536-1541, 2010.

- [45] T. Yamazaki, H. Katayama, S. Uehara, A. Nose, M. Kobayashi, S. Shida, M. Odahara, K. Takamiya, Y. Hisamatsu, S. Matsumoto, L. Miyashita, Y. Watanabe, T. Izawa, Y. Muramatsu, and M. Ishikawa, “4.9 A 1ms high-speed vision chip with 3D-stacked 140GOPS column-parallel PEs for spatio-temporal image processing,” IEEE Int. Solid-State Circuits Conf. (ISSCC), pp. 82-83, 2017.

- [46] I. Ishii, T. Taniguchi, K. Yamamoto, and T. Takaki, “High-frame-rate optical flow system,” IEEE Trans. Circ. Sys. Video Tech., Vol.22, No.1, pp. 105-112, 2012.

- [47] Q. Gu, T. Takaki, and I. Ishii, “Fast FPGA-based multi-object feature extraction,” IEEE Trans. Circ. Sys. Video Tech., Vol.23, No.1, pp. 30-45, 2013.

- [48] I. Ishii, T. Ichida, Q. Gu, and T. Takaki, “500-fps face tracking system,” J. Real-time Image Proc., Vol.8, No.4, pp. 379-388, 2013.

- [49] A. Namiki, Y. Imai, M. Kaneko, and M. Ishikawa, “Development of a high-speed multifingered hand system and its application to catching,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 2666-2671, 2003.

- [50] M. Jiang, T. Aoyama, T. Takaki, and I. Ishii, “Pixel-level and robust vibration source sensing in high-frame-rate video analysis,” Sensors, Vol.16, No.11, p. 1842, 2016.

- [51] M. Jiang, Q. Gu, T. Aoyama, T. Takaki, and I. Ishii, “Real-time vibration source tracking using high-speed vision,” IEEE Sensors J., Vol.17, No.11, pp. 1513-1527, 2017.

- [52] Q. Gu, T. Aoyama, T. Takaki, and I. Ishii, “Simultaneous vision-based shape and motion analysis of cells fast-flowing in a microchannel,” IEEE Trans. Automat. Sci. Eng., Vol.12, No.1, pp. 204-215, 2015.

- [53] Q. Gu, T. Kawahara, T. Aoyama, T. Takaki, I. Ishii, A. Takemoto, and N. Sakamoto, “LOC-based high-throughput cell morphology analysis system,” IEEE Trans. Automat. Sci. Eng., Vol.12, No.4, pp. 1346-1356, 2015.

- [54] H. Yang, Q. Gu, T. Aoyama, T. Takaki, and I. Ishii, “Dynamics-based stereo visual inspection using multidimensional modal analysis,” IEEE Sensors J., Vol.13, No.12, pp. 4831-4843, 2013.

- [55] K. Okumura, K. Yokoyama, H. Oku, and M. Ishikawa, “1 ms auto pantilt – video shooting technology for objects in motion based on saccade mirror with background subtraction,” Advan. Robot., Vol.29, No.7, pp. 457-468, 2015.

- [56] L. Li, T. Aoyama, T. Takaki, I. Ishii, H. Yang, C. Umemoto, H. Matsuda, M. Chikaraishi, and A. Fujiwara, “Vibration distribution measurement using a high-speed multithread active vision,” IEEE Int. Conf. on Advanced Intelligent Mechatronics (AIM), pp. 400-405, 2017,

- [57] Q. Gu, T. Takaki, and I. Ishii, “A fast multi-object extraction algorithm based on cell-based connected components labeling,” IEICE Trans. on Information and Systems, Vol.95, No.2, pp. 636-645, 2012.

- [58] A. Gaschler, D. Burschka, and G. Hager, “Epipolar-based stereo tracking without explicit 3D reconstruction,” IEEE Int. Conf. on Pattern Recognition (ICPR), pp. 1755-1758, 2010.

- [59] W. Xu, R. Foong, and H. Ren, “Maker based shape tracking of a flexible serpentine manipulator,” IEEE Int. Conf. on Information and Automation, pp. 637-642, 2015.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.