Paper:

Vision System for an Autonomous Underwater Vehicle with a Benthos Sampling Function

Shinsuke Yasukawa*,**, Jonghyun Ahn***, Yuya Nishida***, Takashi Sonoda***, Kazuo Ishii***, and Tamaki Ura***

*The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-8505, Japan

**Recreation Lab, Inc.

1-6-1 Otemachi, Chiyoda-ku, Tokyo 100-0004, Japan

***Kyushu Institute of Technology

2-4 Hibikino, Wakamatsu-ku, Kitakyushu, Fukuoka 808-0196, Japan

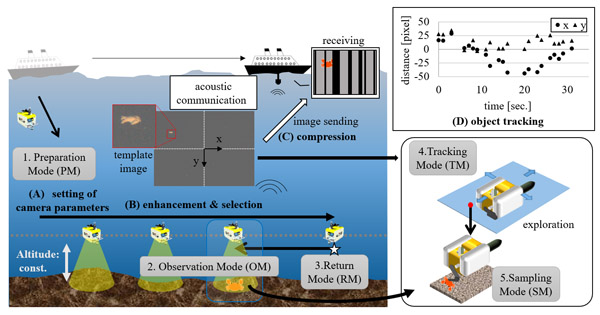

We developed a vision system for an autonomous underwater robot with a benthos sampling function, specifically sampling-autonomous underwater vehicle (AUV). The sampling-AUV includes the following five modes: preparation mode (PM), observation mode (OM), return mode (RM), tracking mode (TM), and sampling mode (SM). To accomplish the mission objective, the proposed vision system comprises software modules for image acquisition, image enhancement, object detection, image selection, and object tracking. The camera in the proposed system acquires images in intervals of five seconds during OM and RM, and in intervals of one second during TM. The system completes all processing stages in the time required for image acquisition by employing high-speed algorithms. We verified the effective operation of the proposed system in a pool.

Flow of sampling mission and image processing tasks for a sampling-AUV

- [1] Y. Nishida, K. Nagahashi, T. Sato, A. Bodenmann, B. Thornton, A. Asada, and T. Ura, “Autonomous Underwater, Vehicle ‘BOSS-A’ for Acoustic and Visual Survey of Manganese Crusts,” J. of Robotics and Mechatronics, Vol.28, No.1, pp. 91-94, 2016.

- [2] L. L. Whitcomb, “Underwater robotics: Out of the research laboratory and into the field. In Robotics and Automation,” Proc. ICRA’00. IEEE Int. Conf., Vol.1, pp. 709-716, 2000.

- [3] M. Grasmueck, G. P. Eberli, D. A. Viggiano, T. Correa, G. Rathwell, and J. Luo, “Autonomous underwater vehicle (AUV) mapping reveals coral mound distribution, morphology, and oceanography in deep water of the Straits of Florida,” Geophysical Research Letters, Vol.33, Issue 23, L23616, 2006.

- [4] M. Johnson-Roverson, O. Pizarro, S. B. Williams, and I. Mahon, “Generation and visualization of large-scale three-dimensional reconstructions from underwater robotics surveys,” J. of Field Robotics, Vol.27, Issue 1, pp. 21-51, 2009.

- [5] B. Thorton, A. Bodenmann, O. Pizarro, S. B. Williams, A. Friedman, R. Nakajima, K. Takai, K. Motoki, T. Watsuji, H. Hirayama, Y. Matsui, H. Watanabe, and T. Ura, “Biometric assessment of deep-sea vent megabenthic communities using multi-resolution 3D image reconstructions,” Deep Sea Research Part I: Oceanographic Research Papers, Vol.116, pp. 200-219, 2016.

- [6] Y . Nishida, J. Kojima, Y. Itoh, K. Tamura, H. Sugimatsu, K. Kim, T. Sudo, and T. Ura, “Virtual Mooring Buoy “ABA” for Multiple Autonomous Underwater Vehicles Operation,” J. of Robotics and Mechatronics, Vol.28, No.1, pp. 86-90, 2016.

- [7] Y. Nishida, T. Sonoda, S. Yasukawa, J. Ahn, K. Nagano, K. Ishii, and T. Ura, “Development of an autonomous underwater vehicle with human-aware robot navigation,” OCEANS 2016 MTS/IEEE Monterey, pp. 1-4, 2016.

- [8] J. Ahn, S. Yasukawa, T. Sonoda, Y. Nishida, K. Ishii, and T. Ura, “Image enhancement and compression of deep-sea floor image for acoustic transmission,” OCEANS 2016-Shanghai, pp. 1-6, 2016.

- [9] J. Ahn, S. Yasukawa, T. Weerakoon, T. Sonoda, Y. Nishida, T. Ura, and K. Ishii, “Sea-Floor Image Transmission System for AUV,” OCEANS 2017-Aberdeen, pp. 1-6, 2016.

- [10] D. Lee, G. Kim, D. Kim, H. Myung, and H. T. Choi, “Vision-based object detection and tracking for autonomous navigation of underwater robots,” Ocean Engineering, Vol.48, pp, 59-68, 2012.

- [11] M. Myint, K. Yonemori, A. Yanou, K. N. Lwin, M. Minami, and S. Ishiyama, “Visual Servoing for Underwater Vehicle Using Dual-Eyes Evolutionary Real-Time Pose Tracking,” J. of Robotics and Mechatronics, Vol.28, No.4, pp. 543-558, 2016.

- [12] J. Aulinas, M. Carreras, X. Llado, J. Salvi, R. Garcia, R. Prados, and Y. R. Petillot, “Feature extraction for underwater visual SLAM,” OCEANS 2011-Spain, pp. 1-7, 2011.

- [13] R. Schettini and S. Corchs, “Underwater image processing: state of the art of restoration and image enhancement methods,” EURASIP J. on Advances in Signal Proc., Vol.1, 2010.

- [14] E. H. Land and J. J. McCann, “Lightness and retinex theory,” Josa, Vol.61, No.1, pp. 1-11, 1971.

- [15] D. J. Jobson and G. A. Woodell, “Properties of a center/surround Retinex: Part 2. Surround design,” NASA Technical Memorandum, 110188, 15, 1995.

- [16] C. A. Mead and M. A. Mahowald, “A silicon model of early visual processing,” Neural Networks, Vol.1, No.1, pp. 91-97, 1988.

- [17] K. Sugimoto and S. I. Kamata, “Efficient Constant-time Gaussian Filtering with Sliding DCT/DST-5 and Dual-domain Error Minimization,” ITE Trans. on Media Technology and Applications, Vol.3, No.1, pp. 12-21, 2015.

- [18] L. Itti and C. Koch, “A saliency-based search mechanism for overt and covert shifts of visual attention,” Vision research, Vol.40, No.10, pp. 1489-1506, 2000.

- [19] H. Bay, A. Ess, T. Tuytelaars, and L. Van Gool, “Speeded-up robust features (SURF),” Computer vision and image understanding, Vol.110, No.3, pp. 346-359, 2008.

- [20] Z. U. Rahman, D. J. Jobson, and G. A. Woodell, “Multi-scale retinex for color image enhancement,” Proc., Int. Conf. on Image Processing 1996, Vol.3, pp. 1003-1006, 1996.

- [21] S. Yasukawa, H. Okuno, S. Kameda, and T. Yagi, “A vision sensor system with a real-time multi-scale filtering function,” Int. J. of Mechatronics and Automation, Vol.4, No.4, pp. 248-258, 2014.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.