Paper:

Image Mosaicing Using Multi-Modal Images for Generation of Tomato Growth State Map

Takuya Fujinaga, Shinsuke Yasukawa, Binghe Li, and Kazuo Ishii

Kyushu Institute of Technology

2-4 Hibikino, Wakamatsu-ku, Fukuoka 808-0196, Japan

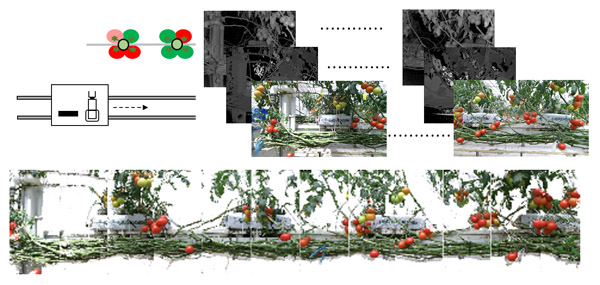

Due to the aging and decreasing the number of workers in agriculture, the introduction of automation and precision is needed. Focusing on tomatoes, which is one of the major types of vegetables, we are engaged in the research and development of a robot that can harvest the tomatoes and manage the growth state of tomatoes. For the robot to automatically harvest tomatoes, it must be able to automatically detect harvestable tomatoes positions, and plan the harvesting motions. Furthermore, it is necessary to grasp the positions and maturity of tomatoes in the greenhouse, and to estimate their yield and harvesting period so that the robot and workers can manage the tomatoes. The purpose of this study is to generate a tomato growth state map of a cultivation lane, which consists of a row of tomatoes, aimed at achieving the automatic harvesting and the management of tomatoes in a tomato greenhouse equipped with production facilities. Information such as the positions and maturity of the tomatoes is attached to the map. As the first stage, this paper proposes a method of generating a greenhouse map (a wide-area mosaic image of a tomato cultivation lane). Using the infrared image eases a correspondence point problem of feature points when the mosaic image is generated. Distance information is used to eliminate the cultivation lane behind the targeted one as well as the background scenery, allowing the robot to focus on only those tomatoes in the targeted cultivation lane. To verify the validity of the proposed method, 70 images captured in a greenhouse were used to generate a single mosaic image from which tomatoes were detected by visual inspection.

The tomato harvesting robot moves on the rail

- [1] J. Sato, “Farming Robots,” J. Rob. Mech., Vol.9, No.4, pp. 287-292, 1997.

- [2] N. Kondo and M. Monta, “Fruit Harvesting Robotics,” J. Rob. Mech., Vol.11, No.4, pp. 287-292, 1999.

- [3] S. Arima and N. Kondo, “Cucumber Harvesting Robot and Plant Training System,” J. Rob. Mech., Vol.11, No.3, pp. 208-212, 1999.

- [4] S. Bachche and K. Oka, “Design, Modeling and Performance Testing of End-Effector for Sweet Pepper Harvesting Robot Hand,” J. Rob. Mech., Vol.25, No.4, pp. 705-717, 2013.

- [5] N. Irie, N. Taguchi, T. Horie, and T. Ishimatsu, “Development of Asparagus Harvester Coordinated with 3-D Vision Sensor,” J. Rob. Mech., Vol.21, No.5, pp. 583-589, 2009.

- [6] N. Noguchi, J. F. Reid, Q. Zhang, L. Tian, and A. C. Hansen, “Vision Intelligence for Mobile Agro-Robotics System,” J. Rob. Mech., Vol.11, No.3, pp. 193-199, 1999.

- [7] M. Monta, N. Kondo, S. Arima, and K. Namba, “Robotic Vision for Bioproduction Systems,” J. Rob. Mech., Vol.15, No.3, pp. 341-348, 2003.

- [8] N. Kondo, K. Yamamoto, H. Shimizu, and K. Yata, “A machine vision system for tomato cluster harvesting robot,” Engineering in Agriculture, Environment and Food, Vol.2, No.2, pp. 60-65, 2009.

- [9] H. Yaguchi, K. Nagahama, T. Hasegawa, and M. Inaba, “Development of an autonomous tomato harvesting robot with rotational plucking gripper,” 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 652-657, 2016.

- [10] W. Lili, Z. Bo, F. Jinwei, H. Xiaoan, W. Shu, L. Yashuo, Z. Qiangbing, and W. Chongfeng, “Development of a tomato harvesting robot used in greenhouse,” Int. J. Agric. and Bio. Eng., Vol.10, No.4, pp. 140-149, 2017.

- [11] S. Yasukawa, B. Li, T. Sonoda, and K. Ishii, “Development of a Tomato Harvesting Robot,” The 2017 Int. Conf. on Artificial Life and Robotics (ICAROB 2017), pp. 408-411, 2017.

- [12] R. F. Teimourlou, A. Arefi, and A. M. Motlagh, “A Machine Vision System for the Real-Time Harvesting of Ripe Tomato,” J. Agricultural Machinery Science, Vol.7, No.2, pp. 159-165, 2011.

- [13] G. G. Michael, S. H. G. Walter, T. Kneissl, W. Zuschneid, C. Gross, P. C. McGuire, A. Dumke, B. Schreiner, S. Gasselt, K. Gwinner, and R. Jaumann, “Systematic processing of mars express HRSC panchromatic and colour image mosaics: Image equalisation using an external brightness reference,” Planetary and Space Science, Vol.121, pp. 18-26, 2016.

- [14] K. Jerosch, A. Ludtke, M. Schluter, and G. T. Ioannidis, “Automatic content-based analysis of georeferenced image data: Detection of Beggiatoa mats in seafloor video mosaics from the Hakon Mosby Mud Volcano,” Computers and Geosciences, Vol.33, pp. 202-218, 2007.

- [15] E. H. Helmer, T. S. Ruzycki, J. M. Wunderle Jr, S. Vogesser, B. Ruefenacht, C. Kwit, T. J. Brandeis, and D. N. Ewert, “Mapping tropical dry forest height, foliage height profiles and disturbance type and age with a time series of cloud-cleared Landsat and ALI image mosaics to characterize avian habitat,” Remote Sensing of Environment, Vol.114, pp. 2457-2473, 2010.

- [16] T. Suzuki, Y. Amano, T. Hashizume, S. Suzuki, and A. Yamada, “Generation of Large Mosaic Images for Vegetation Monitoring a Using Small Unmanned Aerial Vehicle,” J. Rob. Mech., Vol.22, No.2, pp. 212-220, 2010.

- [17] Y. Kanazawa and K. Kanatani, “Stabilizing Image Mosaicing by Model Selection,” 3D Structure from Images – SMILE 2000, pp. 35-51, 200.

- [18] D. Marr, “Vision,” San Francisco: W. H. Freeman and Company, 1982.

- [19] O. Faugeras, “Three-Dimensional Computer Vision: A Geometric Viewpoint,” MIT Press, 1993.

- [20] P. Bao and D. Xu, “Complex wavelet-based image mosaic using edge-preserving visual perception modeling,” Computer and Graphics, Vol.23, pp. 309-321, 1999.

- [21] H. Bay, T. Tuytelaars, and L. V. Gool, “SURF:Speeded Up Robust Features,” Computer Vision and Image Understanding (CVIU), Vol.110, No.3, pp. 346-359, 2008.

- [22] Z. Zhang, “A Flexible New Technique for Camera Calibration,” IEEE Trans. on Pattern Analysis and Machine Intelligence. Vol.22, No.11, pp. 1330-1334, 2000.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.