Paper:

Supporting Reaching Movements of Robotic Hands Subject to Communication Delay by Displaying End Effector Position Using Three Orthogonal Rays

Akihito Chinen and Kiyoshi Hoshino

University of Tsukuba

1-1-1 Tennodai, Tsukuba 305-8573, Japan

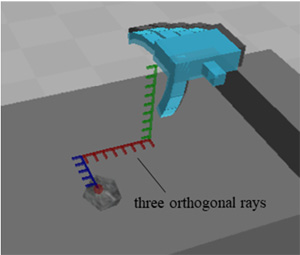

In this paper, we propose an operation support system, which shows the spatial relation between a robotic hand and an object, to overcome reduced usability due to communication delay. A communication delay of four seconds occurs during remote operation between the earth and the moon. Previous studies have used a method that feeds back the predicted position of a robotic hand after the communication delay occurs. However, this does not allow an accurate reaching motion necessary for grasping. Therefore, we try to show the spatial relation between a robotic hand and an object using three orthogonal rays, where each is annotated with 1 cm increments. Based on the results of our experiments, the grasping success rate of the proposed method increases by three times. As a result, the proposed method is effective in making accurate reaching motions for grasping in the visual-servoing remote control between the earth and the moon.

Supporting robotic movements subject to communication delay

- [1] J. Haruyama et al., “Possible lunar lava tube skylight observed by SELENE cameras,” Geophysical Research Letters, Vol.36, No.21, 2009.

- [2] J. Haruyama, I. Kawano, T. Kubota, M. Otsuki, H. Kato, T. Nishibori, T. Iwata, Y. Yamamoto, Y. Ishihara, A. Nagamatsu, K. Shimada, T. Hasenaka, T. Morota, M. N. Nishino, K. Hashizume, K. Saiki, M. Shirao, G. Komatsu, N. Hasebe, H. Shimizu, H. Miyamoto, K. Kobayashi, S. Yokobori, T. Michikami, S. Yamamoto, Y. Yokota, H. Arisumi, G. Ishigami, K. Furutani, and Y. Michikawa, “Mission Concepts of Unprecedented Zipangu Underworld of the Moon Exploration (UZUME) Project,” Trans. of the Japan Society for Aeronautical and Space Sciences, Aerospace Technology Japan, Vol.14, No.ists30, pp. Pk_147-Pk_150, 2016.

- [3] S. Tachi, “Telexistence: Enabling Humans to be Virtually Ubiquitous,” Computer Graphics and Applications, Vol.36, No.1, pp. 8-14, 2016.

- [4] T. B. Sheridan, “Space teleoperation through time delay: Review and prognosis,” IEEE Trans. on Robotics and Automation, Vol.9, No.5, pp. 592-606, 1993.

- [5] L. F. Peñin and K. Matsumoto, “Teleoperation with time delay: A survey and its use in space robotics,” National Aerospace Laboratory (NAL), 2002.

- [6] W. R. Ferrell, “Remote manipulation with transmission delay,” IEEE Trans. on Human Factors in Electronics, Vol.1, pp. 24-32, 1965.

- [7] Y. Shirai and H. Inoue, “Guiding a Robot by Visual Feedback in Assembling Tasks,” Pattern Recognition, Vol.5 pp. 99-108, 1973.

- [8] K. Hashimoto, T. Kimoto, T. Ebine, and H. Kimura, “Manipulator control with image-based visual servo,” IEEE Int. Conf. Robotics and Automation, pp. 2267-2271, 1991.

- [9] A. Nicolas, “Fast and reliable passive trinocular stereo vision,” Proc. ICCV’87, 1987.

- [10] K. Suzuki et al., “Pre-shaping of the fingertip of robot hand covered with net structure proximity sensor,” Trans. of the Society of Instrument and Control Engineers, Vol.48, No.4, pp. 232-240, 2012.

- [11] H. Kaijen et al., “Reactive grasping using optical proximity sensors,” IEEE Int. Conf. on Robotics and Automation (ICRA’09), 2009.

- [12] T. Hashimoto, T. B. Sheridan, and M. V. Noyes, “Effects of predicted information in teleoperation with time delay,” The Japanese J. of Ergonomics Vol.22, No.2, pp. 91-92, 1986.

- [13] A. K. Bejczy, W. S. Kim, and S. C. Venema, “The Phantom Robot: Predictive Displays for Teleoperation with Time Delay,” Proc. of IEEE Int. Conf. on Robotics and Automation, 1990.

- [14] T.-J. Tarn and K. Brady, “A framework for the control of time-delayed telerobotic systems,” IFAC Proc., Vol.30, No.20, pp. 599-604, 1997.

- [15] K. Uratani et al., “A study of depth visualization techniques for virtual annotations in augmented reality,” Proc. of the 2005 IEEE Conf. on Virtual Reality (VR 2005), 2005.

- [16] C. Furmanski, R. Azuma, and M. Daily, “Augmented-reality visualizations guided by cognition: Perceptual heuristics for combining visible and obscured information,” Proc. of the 1st Int. Symposium on Mixed and Augmented Reality 2002 (ISMAR 2002), 2002.

- [17] Y. Tsumaki and M. Uchiyama, “Predictive Display of Virtual Beam for Space Teleoperation,” Proc. of the IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1544-1549, 1996.

- [18] G. Hirzinger, H. Heidl, and K. Landzettel, “Predictor and Knowledge Based Telerobotic Control Concept,” Proc. IEEE Int. Conf. on Robotics and Automation, pp. 1768-1777, Scottsdale, Arizona, May 14-19, 1989.

- [19] G. Hirzinger, B. Brunner, J. Dietric, and J. Heindl, “Sensor-Based Space Robotics-ROTEX and Its Telerobotic Feature,” IEEE Trans. on Robotics and Automation, Vol.9, No.5, pp. 649-663, 1993.

- [20] K. Tokoi, E. Oyama, and I. Kawano, “An AR System to Share the Field of View of the Robot at the Remote Location using HMD,” Proc. of 60th Space Sciences and Technology Conf., 2C06, 2016.

- [21] I. Kawano, J. Haruyama, K. Okada, K. Hoshino, E. Ohyama, K. Tokoi, Y. Wakabayashi, M. Otsuki, and M. Sakurai, “System Study of Exploration of Lunar and Mars Holes and underlying subsurface,” Proc. of 60th Space Sciences and Technology Conf., 1C07, 2016.

- [22] K. Hoshino, T. Kasahara, M. Tomida, and T. Tanimoto, “Gesture-World Environment Technology for Mobile Manipulation – Remote Control System of a Robot with Hand Pose Estimation,” J. of Robotics and Mechatronics, Vol.24, No.1, pp. 180-190, 2012.

- [23] K. Hoshino and M. Tomida, “3D hand pose estimation using a single camera for unspecified users,” J. of Robotics and Mechatronics, Vol.21, No.6, pp. 749-757, 2009.

- [24] E. Simonson, “Measurement of fusion frequency of flicker as a test for fatigue of the central nervous system,” Indust. Hyg. & Toxicol., Vol.23, pp. 83-89, 1941.

- [25] J. M. Smith and H. Misiak, “Critical flicker frequency (CFF) and psychotropic drugs in normal human subjects – a review,” Psychopharmacology, Vol.47, pp. 175-182, 1976.

- [26] S. G. Hart and L. E. Staveland, “Developmentof NASA-TLX (Task Load Index) Results of empirical and theoretical research,” P. A. Hancock and N. Meshkati (Eds.), Human Mental Workload, North-Holland, pp. 139-183, 1988.

- [27] S. Haga and N. Mizukami, “Japanese version of NASA Task Load Index Sensitivity of its workload score to difficulty of three different laboratory tasks,” The Japanese J. of Ergonomics, Vol.32, No.2, pp. 71-79, 1996.

- [28] S. Miyake, “Special Issues No.3: Measurement Technique for Ergonomics, Section 3: Psychological Measurements and Analyses (6), Mental Workload Assessment and Analysis – A Reconsideration of the NASA-TLX –,” The Japanese J. of Ergonomics, Vol.51, No.6, pp. 391-398, 2015.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.