Paper:

Automating the Appending of Image Information to Grid Map Corresponding to Object Shape

Tomohito Takubo, Hironobu Takaishi, and Atsushi Ueno

Guraduate School of Engineering, Osaka City University

3-3-138 Sugimoto, Sumiyoshi-ku, Osaka 558-8585, Japan

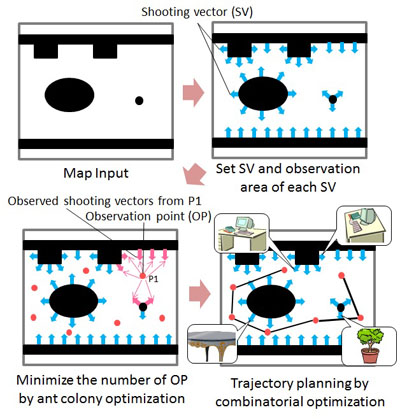

A technique for automating the Image-Information-Added Map, a mapping method for photographing an object at a required resolution, is proposed. The picture shooting vector indicating the angle for taking a picture with sufficient resolution is defined according to the shape of the object surface, and the operator controls a robot remotely to acquire pictures by checking the picture shooting vector in our previous study. For an automated inspection system, image acquisition should be automated. Assuming a 2-D grid map is prepared, first, the shooting vectors are set on the surface of the object in the map, and the picture shooting areas are defined. In order to reduce the number of the points that the mobile robot moves to to take pictures, an overlapping picture shooting area should be selected. As the selection of the points where pictures are taken is a set covering problem, the ant colony optimization method is used to solve it. Edge Exchange Crossover (EXX) is used to select picture taking points that are connected for efficient checking. The proposed method is implemented in a robot and evaluated according to the resolution of the collected images in an experimental environment.

Trajectory planning for automating the appending of image information

- [1] C. Fruh and A. Zakhor, “3D Model Generation for Cities Using Aerial Photographs and Ground Level Laser Scans,” Proc. of the Computer Vision and Pattern Recognition, Vol.2.2, pp. 31-38, 2001.

- [2] O. Pizarro, R. Eustice, and H. Singh, “Large Area 3D Reconstructions from Underwater Surveys,” Proc. of the OCEANS 2004 MTS/IEEE Conf. and Exhibition, Vol.2, pp. 678-687, 2004.

- [3] P. Biber, S. Fleck, F. Busch, M. Wand, T. Duckett, and W. Strasser, “3D modeling of indoor environments by mobile platform with a laser scanner and panoramic camera,” Proc. of the European Signal Processing Conf., Vol.4, pp. 3430-3435, 2005.

- [4] R. Triebel, P. Pfaff, and W. Burgard, “Multi-level surface maps for outdoor terrain mapping and loop closing,” Proc. of the Intelligent Robots and Systems, pp. 2276-2282, 2006.

- [5] P. Newman and M. Chandran-Ramesh, “Describing, Navigating and Recognising Urban Spaces – Building An End-to-End SLAM System,” Springer Tracts in Advanced Robotics, Vol.66, pp. 237-253, 2011.

- [6] R. Zask and M. Dailey, “Rapid 3d visualization of indoor scenes using 3D occupancy grid isosurfaces,” Proc. of the ECTICON, Vol.2, pp. 672-675, 2009.

- [7] M. Blosch, S. Weiss, D. Scaramuzza, and R. Siegwart, “Vision based mav navigation in unknown and unstructured environments,” Proc. of the IEEE Int. Conf. on Robotics and Automation, pp. 21-28, 2010.

- [8] S. Kawakami, T. Takubo, K. Ohara, Y. Mae, and T. Arai, “Image Information Added Map Making Interface for Compensating Image Resolution,” J. of Robotics and Mechatronics, Vol.24, No.3, pp. 507-516, Jun. 2012.

- [9] K. Ishikawa, Y. Amano, T. Hashizume, J. Takiguchi, and N. Kajiwara, “A Mobile Mapping System for Precise Road Line Localization Using a Single Camera and 3D Road Model,” J. of Robotics and Mechatronics, Vol.19, No.2, pp. 174-180, 2007.

- [10] K. Ishikawa, Y. Amano, and T. Hashizume, “Path Planning for Mobile Mapping System Considering the Geometry of the GPS Satellite,” J. of Robotics and Mechatronics, Vol.25, No.3, pp. 545-552, 2013.

- [11] T. Suzuki, Y. Amano, T. Hashizume, and S. Suzuki, “3D Terrain Reconstruction by Small Unmanned Aerial Vehicle Using SIFT-Based Monocular SLAM,” J. of Robotics and Mechatronics, Vol.23, No.2, pp. 292-301, 2011.

- [12] N. M. H. Basri, K. S. M. Sahari, and A. Anuar, “Development of a Robotic Boiler Header Inspection Device with Redundant Localization System,” J. of Advanced Computational Intelligence and Intelligent Informatics, Vol.18, No.3, pp. 451-458, 2014.

- [13] P. Kriengkomol, K. Kamiyama, M. Kojima, M. Horade, Y. Mae, and T. Arai, “A New Close-Loop Control Method for an Inspection Robot Equipped with Electropermanent-Magnets,” J. of Robotics and Mechatronics, Vol.28, No.2, pp. 185-193, 2016.

- [14] Y. Tokura, K. Toba, and Y. Takada, “Practical Applications of HORNET to Inspect Walls of Structures,” J. of Robotics and Mechatronics, Vol.28, No.3, pp. 320-327, 2016.

- [15] Z.-G. Ren, “New ideas for applying ant colony optimization to the set covering problem,” Computers & Industrial Engineering, Vol.58, pp. 774-784, 2010.

- [16] S. Koyama, T. Maruya, K. Horiguchi, and T. Sawa, “Digital Image Analysis of Cracks on the Concrete Surface Based on the Gabor Wavelet Transformation,” J. of JSCE, Division E, Vol.68, No.3, pp. 178-194, 2012 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.