Paper:

Ego-Noise Suppression for Robots Based on Semi-Blind Infinite Non-Negative Matrix Factorization

Kazuhiro Nakadai*,**, Taiki Tezuka*, and Takami Yoshida*

*Graduate School of Information Science and Engineering, Tokyo Institute of Technology

2-12-1 Ookayama, Meguro-ku, Tokyo 152-8552, Japan

**Honda Research Institute Japan Co., Ltd.

8-1 Honcho, Wako-shi, Saitama 351-0188, Japan

* This work is an extension of our publication “Taiki Tezuka, Takami Yoshida, Kazuhiro Nakadai: Ego-motion noise suppression for robots based on Semi-Blind Infinite Non-negative Matrix Factorization, ICRA 2014, pp.6293-6298, 2014.”

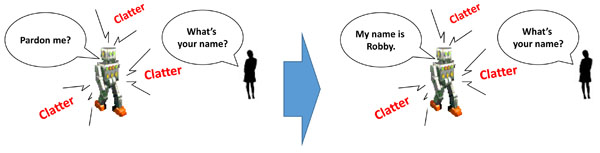

Ego-noise suppression achieves speech recognition even during motion

- [1] K. Nakadai, T. Lourens, H. G. Okuno, and H. Kitano, “Active audition for humanoid,” Proc. of 17th National Conf. on Artificial Intelligence (AAAI-2000), pp. 832-839, 2000.

- [2] K. Nakadai, D. Matsuura, H. G. Okuno, and H. Tsujino, “Improvement of recognition of simultaneous speech signals using av integration and scattering theory for humanoid robots,” Speech Communication, Vol.44, pp. 97-112, 2004.

- [3] Y. Nishimura, M. Ishizuka, K. Nakadai, M. Nakano, and H. Tsujino, “Speech recognition for a humanoid with motor noise utilizing missing feature theory,” Proc. of 6th IEEE-RAS Int. Conf. on Humanoid Robots (Humanoids 2006), pp. 26-33, 2006.

- [4] T. Rodemann, M. Heckmann, F. Joublin, C. Goerick, and B. Schölling, “Real-time sound localization with a binaural head-system using a biologically-inspired cue-triple mapping,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS-2006), pp. 860-865, 2006.

- [5] J. Hornstein, M. Lopes, J. Santos-Victor, and F. Lacerda, “Sound localization for humanoid robots – building audio-motor maps based on the hrtf,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS-2006), pp. 1171-1176, 2006.

- [6] T. Shimoda, T. Nakashima, M. Kumon, R. Kohzawa, I. Mizumoto, and Z. Iwai, “Spectral cues for robust sound localization with pinnae,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS-2006), pp. 386-391, 2006.

- [7] A. Portello, P. Danes, and S. Argentieri, “Active binaural localization of intermittent moving sources in the presence of false measurements,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS-2012), pp. 3294-3299, 2012.

- [8] J.-M. Valin, F. Michaud, B. Hadjou, and J. Rouat, “Localization of simultaneous moving sound sources for mobile robot using a frequency-domain steered beamformer approach,” Proc. of IEEE Int. Conf. on Robotics and Automation (ICRA 2004), 2004.

- [9] Y. Sasaki, M. Kabasawa, S. Thompson, S. Kagami, and K. Oro, “Spherical microphone array for spatial sound localization for a mobile robot,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS-2012), pp. 713-718, 2012.

- [10] S. Yamamoto, K. Nakadai, M. Nakano, H. Tsujino, J.-M. Valin, K. Komatani, T. Ogata, and H. G. Okuno, “Design and implementation of a robot audition system for automatic speech recognition of simultaneous speech,” Proc. of the 2007 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU-2007), pp. 111-116, Dec. 2007.

- [11] H. Saruwatari, Y. Mori, T. Takatani, S. Ukai, K. Shikano, T. Hiekata, and T. Morita, “Two-stage blind source separation based on ica and binary masking for real-time robot audition system,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS-2005), pp. 209-214, 2005.

- [12] T. Yoshida and K. Nakadai, “Active audio-visual integration for voice activity detection based on a causal bayesian network,” Proc. of the 2012 IEEE RAS Int. Conf. on Humanoid Robots (Humanoids 2012), pp. 370-375, 2012.

- [13] K. Nakadai, H. Nakajima, K. Yamada, Y. Hasegawa, T. Nakamura, and H. Tsujino, “Sound source tracking with directivity pattern estimation using a 64ch microphone array,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS-2005), pp. 196-202, Aug. 2005.

- [14] F. Perrodin, J. Nikolic, J. Busset, and R. Y. Siegwart, “Design and calibration of large microphone arrays for robotic applications,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2012), pp. 4596-4601, 2012.

- [15] J. Even, C. Ishi, P. Heracleous, T. Miyashita, and N. Hagita, “Combining laser range finders and local steered response power for audio monitoring,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2012), pp. 986-991, 2012.

- [16] J. Even, H. Sawada, H. Saruwatari, K. Shikano, and T. Takatani, “Semi-blind suppression of internal noise for hands-free robot spoken dialog system,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2009), pp. 658-663, 2009.

- [17] A. Ito, T. Kanayama, M. Suzuki, and S. Makino, “Internal noise suppression for speech recognition by small robots,” Proc. of European Conf. on Speech Communication and Technology (Eurospeech-2005), pp. 2685-2688, 2005.

- [18] S. F. Boll, “Suppression of acoustic noise in speech using spectral subtraction,” IEEE Trans. on Acoustics, Speech, and Signal Processing, Vol.27, No.2, pp. 113-120, 1979.

- [19] B. Raj, M. L. Seltzer, and R. M. Stern, “Reconstruction of missing features for robust speech recognition,” Speech Communication, Vol.43, No.4, pp. 275-296, 2004.

- [20] G. Ince, K. Nakamura, F. Asano, H. Nakajima, and K. Nakadai, “Assessment of general applicability of ego noise estimation,” Proc. of IEEE Int. Conf. on Robotics and Automation (ICRA 2011), pp. 3517-3522, 2011.

- [21] J. L. Oliveira, G. Ince, K. Nakamura, K. Nakadai, H. G. Okuno, F. Gouyon, and L. P. Reis, “Beat tracking for interactive dancing robots,” I. J. Humanoid Robotics, Vol.12, No.4, 2015.

- [22] A. Deleforge and W. Kellermann, “Phase-optimized K-SVD for signal extraction from underdetermined multichannel sparse mixtures,” Proc. of the 2015 IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP), pp. 355-359, 2015.

- [23] T. Tezuka, T. Yoshida, and K. Nakadai, “Ego-motion noise suppression for robots based on semi-blind infinite non-negative matrix factorization,” Proc. of IEEE Int. Conf. on Robotics and Automation (ICRA 2014), pp. 6293-6298, 2014.

- [24] L. C. Parra and C. V. Alvino, “Geometric source separation: Mergin convolutive source separation with geometric beamforming,” IEEE Trans. on Speech and Audio Processing, Vol.10, No.6, pp. 352-362, 2002.

- [25] T. Virtanen, “Monaural sound source separation by nonnegative matrix factorization with temporal continuity and sparseness criteria,” IEEE Trans. on Audio, Speech, and Language Processing, Vol.15, No.3, pp. 1066-1074, 2006.

- [26] S. A. Abdallah and M. D. Plumbley, “Polyphonic music transcription by non-negative sparse coding of power spectra,” Proc. of the 5th Int. Conf. on Music Information Retrieval (ISMIR 2004), pp. 10-14, 2004.

- [27] T. L. Griffiths and Z. Ghahramani, “The indian buffet process: An introduction and review,” J. of Machine Learning Research, Vol.12, pp. 1185-1224, 2011.

- [28] M. N. Schmidt and M. Mørup, “Infinite non-negative matrix factorization,” European Signal Processing Conf. (EUSIPCO), 2010.

- [29] M. D. Hoffman, D. M. Blei, and P. R. Cook, “Bayesian nonparametric matrix factorization for recorded music,” Proc. of the 27th Int. Conf. on Machine Learning (ICML2010), pp. 439-446, 2010.

- [30] G. Ince, K. Nakadai, T. Rodemann, H. Tsujino, and J. Imura, “Whole body motion noise cancellation of a robot for improved automatic speech recognition,” Advanced Robotics, Vol.25, No.11-12, pp. 1405-1426, 2011.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.