Paper:

Real-Time Image Mosaicing System Using a High-Frame-Rate Video Sequence

Qingyi Gu, Sushil Raut, Ken-ichi Okumura, Tadayoshi Aoyama, Takeshi Takaki, and Idaku Ishii

Department of System Cybernetics, Hiroshima University

1-4-1 Kagamiyama, Higashi-Hiroshima, Hiroshima 739-8527, Japan

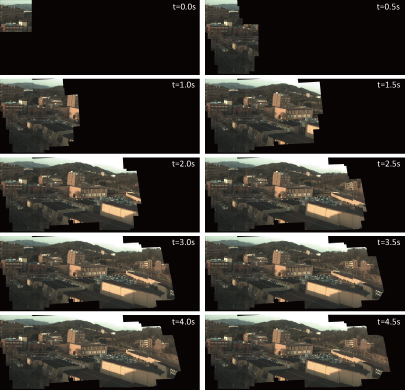

Synthesized panoramic images

Synthesized panoramic images- [1] R. Szeliskki, “Image alignment and stitching: A tutorial," Tech. Rep., MSR-TR-2004-92, Microsoft Research, 2006.

- [2] B. Zitova and J. Flusser, “Image registration methods: A survey," Image Vis. Comput., Vol.21, pp. 977-1000, 2003.

- [3] R. Szeliski and S. Kang, “Direct methods for visual scene reconstruction," Proc. IEEE Workshop on Representations of Visual Scenes, pp. 26-33, 1995.

- [4] H. S. Sawhney and R. Kumar, “True multi-image alignment and its application to mosaicing and lens distortion correction," IEEE Trans. Patt. Anal. Mach. Intelli., Vol.21, No.3, pp. 235-243, 1999.

- [5] H.-Y. Shum and R. Szeliski, “Systems and experiment paper: Construction of panoramic image Mosaics with global and local alignment," Int. J. Comput. Vis., Vol.36, No.2, pp. 101-130, 2000.

- [6] I. Zoghlami, O. Faugeras, and R. Deriche, “Using geometric corners to build a 2D mosaic from a set of images," Proc. Int. Conf. Comput. Vis. Patt. Recog., pp. 420-425, 1997.

- [7] P. McLauchlan and A. Jaenicke, “Image mosaicing using sequential bundle adjustment," Image Vis. Comput., Vol.20, No.9-10, pp. 751-759, 2002.

- [8] D. Capel and A. Zisserman, “Computer vision applied to super resolution," IEEE Signal Processing Magazine, Vol.20, No.3, pp. 75-86, 2003.

- [9] M. Brown and D. G. Lowe, “Automatic panoramic image stitching using invariant features," Int. J. Comput. Vis., Vol.74, No.1, pp. 59-73, 2007.

- [10] J. Shi and C. Tomasi, “Good features to track," Proc. IEEE Conf. Comput. Vis. Patt. Recog., pp. 593-600, 1994.

- [11] D. G. Lowe, “Distinctive image features from scale-invariant keypoints," Int. J. Comput. Vis., Vol.60, No.2, pp. 91-110, 2004.

- [12] M. Irani, P. Anandan, J. R. Bergen, R. Kumar, and S. Hsu, “Efficient representations of video sequences and their applications," Image Commu., Vol.8, No.4, pp. 327-351, 1996.

- [13] A. Bevilacqua and P. Azzari, “High-quality real time motion detection using ptz cameras," Proc. IEEE Int. Conf. Video and Signal Based Surveillance, p. 23, 2006.

- [14] A. Kelly, “Mobile robot localization from large-scale appearance mosaics," Int. J. Robotic Res.. Vol.19, No.11, pp. 1104-1125, 2000.

- [15] P. Azzari, L. Di Stefano, F. Tombari, and S. Mattoccia, “Markerless augmented reality using image mosaics," ICISP 2008, LNCS, Vol.5099. Springer, Heidelberg, 2008.

- [16] M. Kourogi, T. Kurata, J. Hoshino, and Y. Muraoka, “Real-time image mosaicing from a video sequence," Proc. Int. Conf. Image Proc., pp. 133-137, 1999.

- [17] J. Civera, A. J. Davison, J. A. Magallon, and J. M. M. Montiel, “Drift-free real-time sequential mosaicing," Int. J. Comput. Vis., Vol.81, pp. 128-137, 2009.

- [18] T. Botterill, S. Mills, and R. Green, “Real-time aerial image mosaicing," Proc. Int. Conf. Image Vis. Comput. New Zealand, pp. 1-8, 2010.

- [19] R. H. C. de Souza, M. Okutomi, and A. Torii, “Real-time image mosaicing using non-rigid registration," Proc. 5th Pacific Rim Conf. on Advances in Image and Video Technology, pp. 311-322, 2011.

- [20] T. Kuwa, Y. Watanabe, T. Komuro, and M. Ishikawa, “Wide range image sensing using a thrown-up camera," Proc. IEEE Int. Conf. Multimedia & Expo, pp. 878-883. 2010.

- [21] Y. Watanabe, T. Komuro, and M. Ishikawa, “955-fps real-time shape measurement of a moving/deforming object using high-speed vision for numerous-point analysis," Proc. IEEE Int. Conf. Robot. Autom., pp. 3192-3197, 2007.

- [22] S. Hirai, M. Zakoji, A. Masubuchi, and T. Tsuboi, “Realtime FPGA-based vision system," J. Robot. Mechat., Vol.17, pp. 401-409, 2005.

- [23] I. Ishii, R. Sukenobe, T. Taniguchi, and K. Yamamoto, “Development of high-speed and real-time vision platform, H³ Vision," Proc. IEEE/RSJ Int. Conf. Intelli. Rob. Sys., pp. 3671-3678, 2009.

- [24] I. Ishii, T. Taniguchi, K. Yamamoto, and T. Takaki, “High-frame-rate optical flow system," IEEE Trans. Circ. Sys. Video Tech., Vol.22, No.1, pp. 105-112, 2012.

- [25] I. Ishii, T. Tatebe, Q. Gu, Y. Moriue, T. Takaki, and K. Tajima, “2000 fps real-time vision system with high-frame-rate video recording,” Proc. IEEE Int. Conf. Robot. Autom., pp. 1536-1541, 2010.

- [26] I. Ishii, T. Tatebe, Q. Gu, and T. Takaki, “Color-histogram-based tracking at 2000 fps," J. Electronic Imaging, Vol.21, 013010-1, 2012.

- [27] Q. Gu, T. Takaki, and I. Ishii, “Fast FPGA-based multi-object feature extraction," IEEE Trans. Circ. Sys. Video Tech., Vol.23, No.1, pp. 30-45, 2013.

- [28] C. Harris and M. Stephens, “A combined corner and edge detector," Proc. the 4th Alvey Vis. Conf., pp. 147-151, 1988.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.