Research Paper:

A Multi-Scale Feature Fusion-Based Model for High-Accuracy and Efficient Vehicle Detection in Complex Scenarios

Ying Gao

School of Smart Manufacturing, Zhengzhou University of Economics and Business

No.2 Shuanghu Avenue, Yiju Education Park, Nanlonghu, Zhengzhou, Henan 451191, China

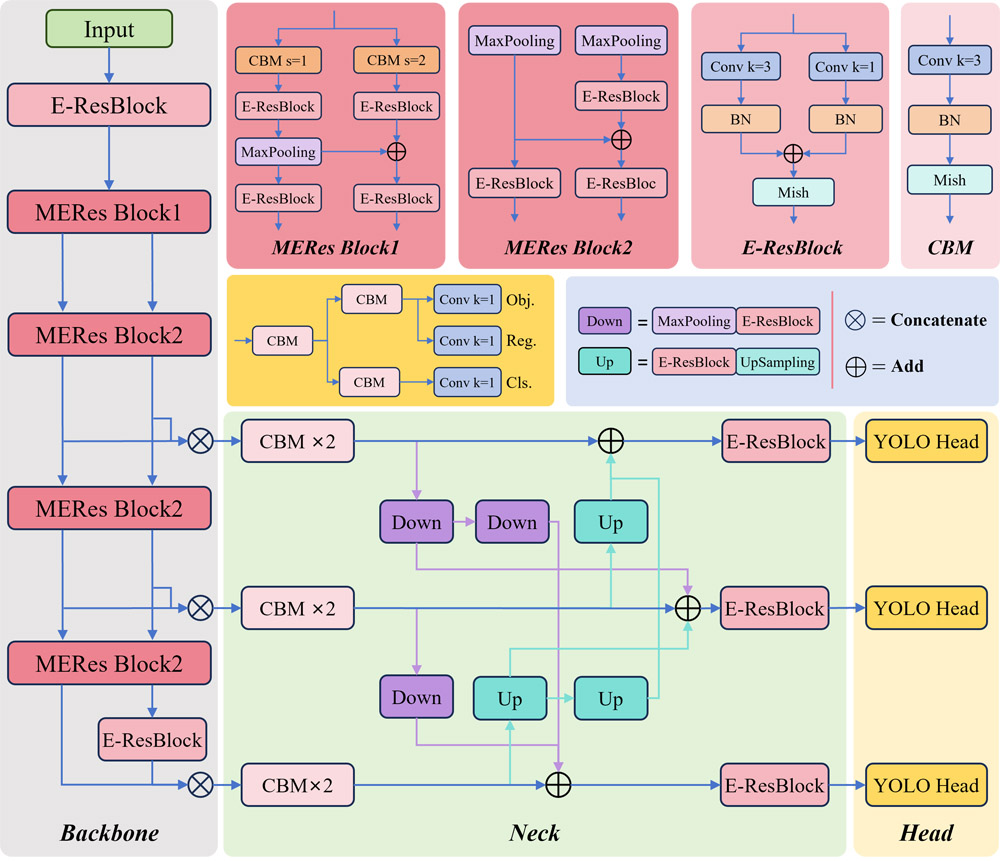

Vehicle detection is essential in smart cities and intelligent transportation systems. However, current models face challenges in balancing detection accuracy and model complexity, limiting their deployment on low-performance edge devices, especially in complex scenarios with multi-scale objects and environmental variations. To overcome these issues, YOLO-MER, a novel lightweight multi-scale feature fusion vehicle detection network is proposed. First, a multi-scale feature fusion convolutional architecture, MERes Block, is developed based on an enhanced ResBlock to reconstruct the YOLO backbone, enabling feature extraction and fusion across four scales. Additionally, a lightweight neck architecture is designed to integrate features across three dimensions, ensuring comprehensive utilization of multi-scale information. The proposed YOLO-MER is validated on two public datasets. On the Vehicle dataset, it achieved the highest detection accuracy with 79.05% mAP, the smallest model size with 6.94 MB, and the fewest parameters with 1.59M. On the Crack dataset, it achieved the best detection accuracy with 86.11% mAP.

YOLO-MER multi-scale fusion network

- [1] L. Liang et al., “Vehicle detection algorithms for autonomous driving: A review,” Sensors, Vol.24, No.10, Article No.3088, 2024. https://doi.org/10.3390/s24103088

- [2] J. Kim, S. Hong, and E. Kim, “Novel on-road vehicle detection system using multi-stage convolutional neural network,” IEEE Access, Vol.9, pp. 94371-94385, 2021. https://doi.org/10.1109/ACCESS.2021.3093698

- [3] N. U. A. Tahir, Z. Long, Z. Zhang, M. Asim, and M. El-Affendi, “PVswin-YOLOv8s: UAV-based pedestrian and vehicle detection for traffic management in smart cities using improved YOLOv8,” Drones, Vol.8, No.3, Article No.84, 2024. https://doi.org/10.3390/drones8030084

- [4] C. Ma and F. Xue, “A review of vehicle detection methods based on computer vision,” J. of Intelligent and Connected Vehicles, Vol.7, No.1, pp. 1-18, 2024. https://doi.org/10.26599/JICV.2023.9210019

- [5] C. Chen et al., “Edge intelligence empowered vehicle detection and image segmentation for autonomous vehicles,” IEEE Trans. on Intelligent Transportation Systems, Vol.24, No.11, pp. 13023-13034, 2023. https://doi.org/10.1109/TITS.2022.3232153

- [6] N. U. A. Tahir, Z. Zhang, M. Asim, J. Chen, and M. El-Affendi, “Object detection in autonomous vehicles under adverse weather: A review of traditional and deep learning approaches,” Algorithms, Vol.17, No.3, Article No.103, 2024. https://doi.org/10.3390/a17030103

- [7] Z. Sun, G. Bebis, and R. Miller, “On-road vehicle detection: A review,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.28, No.5, pp. 694-711, 2006. https://doi.org/10.1109/TPAMI.2006.104

- [8] M. Maity, S. Banerjee, and S. S. Chaudhuri, “Faster R-CNN and YOLO based vehicle detection: A survey,” 5th Int. Conf. on Computing Methodologies and Communication, pp. 1442-1447, 2021. https://doi.org/10.1109/ICCMC51019.2021.9418274

- [9] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards real-time object detection with region proposal networks,” arXiv:1506.01497, 2015. https://doi.org/10.48550/arXiv.1506.01497

- [10] K. Duan et al., “CenterNet: Keypoint triplets for object detection,” 2019 IEEE/CVF Int. Conf. on Computer Vision, pp. 6568-6577, 2019. https://doi.org/10.1109/ICCV.2019.00667

- [11] J. Terven, D.-M. Córdova-Esparza, and J.-A. Romero-González, “A comprehensive review of YOLO architectures in computer vision: From YOLOv1 to YOLOv8 and YOLO-NAS,” Machine Learning and Knowledge Extraction, Vol.5, No.4, pp. 1680-1716, 2023. https://doi.org/10.3390/make5040083

- [12] L. Guo, X. Zhou, Y. Zhao, and W. Wu, “Improved YOLOv7 algorithm incorporating InceptionNeXt and attention mechanism for vehicle detection under adverse lighting conditions,” Signal, Image and Video Processing, Vol.19, No.4, Article No.299, 2025. https://doi.org/10.1007/s11760-025-03868-4

- [13] Z. Wang et al., “A review of vehicle detection techniques for intelligent vehicles,” IEEE Trans. on Neural Networks and Learning Systems, Vol.34, No.8, pp. 3811-3831, 2023. https://doi.org/10.1109/TNNLS.2021.3128968

- [14] Y. Zhang et al., “Real-time vehicle detection based on improved YOLO v5,” Sustainability, Vol.14, No.19, Article No.12274, 2022. https://doi.org/10.3390/su141912274

- [15] N. Laopracha, K. Sunat, and S. Chiewchanwattana, “A novel feature selection in vehicle detection through the selection of dominant patterns of histograms of oriented gradients (DPHOG),” IEEE Access, Vol.7, pp. 20894-20919, 2019. https://doi.org/10.1109/ACCESS.2019.2893320

- [16] J.-Y. Choi, K.-S. Sung, and Y.-K. Yang, “Multiple vehicles detection and tracking based on scale-invariant feature transform,” 2007 IEEE Intelligent Transportation Systems Conf., pp. 528-533, 2007. https://doi.org/10.1109/ITSC.2007.4357684

- [17] S. Hamsa, A. Panthakkan, S. Al Mansoori, and H. Alahamed, “Automatic vehicle detection from aerial images using cascaded support vector machine and Gaussian mixture model,” 2018 Int. Conf. on Signal Processing and Information Security, 2018. https://doi.org/10.1109/CSPIS.2018.8642716

- [18] M. A. Berwo et al., “Deep learning techniques for vehicle detection and classification from images/videos: A survey,” Sensors, Vol.23, No.10, Article No.4832, 2023. https://doi.org/10.3390/s23104832

- [19] X. Dong, S. Yan, and C. Duan, “A lightweight vehicles detection network model based on YOLOv5,” Engineering Applications of Artificial Intelligence, Vol.113, Article No.104914, 2022. https://doi.org/10.1016/j.engappai.2022.104914

- [20] Y. Zhang, Y. Sun, Z. Wang, and Y. Jiang, “YOLOv7-RAR for urban vehicle detection,” Sensors, Vol.23, No.4, Article No.1801, 2023. https://doi.org/10.3390/s23041801

- [21] A. Ramachandran and A. K. Sangaiah, “A review on object detection in unmanned aerial vehicle surveillance,” Int. J. of Cognitive Computing in Engineering, Vol.2, pp. 215-228, 2021. https://doi.org/10.1016/j.ijcce.2021.11.005

- [22] Y. Zhou, “A YOLO-NL object detector for real-time detection,” Expert Systems with Applications, Vol.238, Part E, Article No.122256, 2024. https://doi.org/10.1016/j.eswa.2023.122256

- [23] J. Redmon and A. Farhadi, “YOLOv3: An incremental improvement,” arXiv:1804.02767, 2018. https://doi.org/10.48550/arXiv.1804.02767

- [24] A. Bochkovskiy, C.-Y. Wang, and H.-Y. M. Liao, “YOLOv4: Optimal speed and accuracy of object detection,” arXiv:2004.10934, 2020. https://doi.org/10.48550/arXiv.2004.10934

- [25] Z. Li et al., “Toward efficient safety helmet detection based on YoloV5 with hierarchical positive sample selection and box density filtering,” IEEE Trans. on Instrumentation and Measurement, Vol.71, Article No.2508314, 2022. https://doi.org/10.1109/TIM.2022.3169564

- [26] C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors,” 2023 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 7464-7475, 2023. https://doi.org/10.1109/CVPR52729.2023.00721

- [27] Z. Ge, S. Liu, F. Wang, Z. Li, and J. Sun, “YOLOX: Exceeding YOLO series in 2021,” arXiv:2107.08430, 2021. https://doi.org/10.48550/arXiv.2107.08430

- [28] S. Jain, “Vehicle Detection 8 Classes,” 2020. https://www.kaggle.com/datasets/sakshamjn/vehicle-detection-8-classes-object-detection [Accessed January 20, 2024]

- [29] New Workspace, “SDNET2018textbackslashDtextbackslashCDtextbackslashPUB Dataset,” 2022. https://universe.roboflow.com/new-workspace-nuxum/sdnet2018-d-cd-pub [Accessed January 30, 2024]

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.