Research Paper:

Generating Natural Language Sentences Explaining Trends and Relationships of Two Time-Series Data

Yukako Nakano

and Ichiro Kobayashi

and Ichiro Kobayashi

Graduate School of Humanities and Sciences, Ochanomizu University

2-1-1 Otsuka, Bunkyo-ku, Tokyo 112-8610, Japan

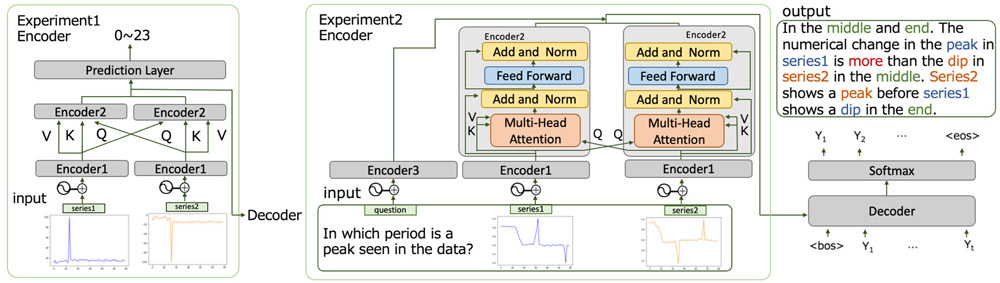

We propose a method for generating natural language explanations that describe trends and relationships between two time-series data. To address this task, it is essential to analyze the dynamic behavior of both time series and generate textual explanations based on the analytical outcomes. We developed a model that extended the vanilla Transformer architecture to better capture the temporal features relevant to explanation generation. To train the model, we constructed a synthetic, domain-agnostic dataset that simulated time-series patterns and interactions. We conducted two experiments to evaluate the effectiveness of the proposed approach using the synthesized datasets. The first experiment focused on generating explanations for the time-series trends. The results demonstrated that our model could generate accurate and coherent explanations with high accuracy. The second experiment addressed more complex scenarios in which the model was required to answer questions regarding the relationship between two interacting time-series. Although the model initially struggled to achieve high accuracy in this task, we observed that step-by-step training significantly improved its performance. These findings highlight both the potential and current limitations of Transformer-based approaches for interpretable time-series analysis.

Proposed model architecture

- [1] D. Ghosal, P. Nema, and A. Raghuveer, “Retag: Reasoning aware table to analytic text generation,” arXiv preprint, arXiv.2305.11826, 2023. https://doi.org/10.48550/arXiv.2305.11826

- [2] B. J. Tang, A. Boggust, and A. Satyanarayan, “Vistext: A benchmark for semantically rich chart captioning,” arXiv preprint, arXiv.2307.05356, 2023. https://doi.org/10.48550/arXiv.2307.05356

- [3] J. Obeid and E. Hoque, “Chart-to-text: Generating natural language descriptions for charts by adapting the transformer model,” arXiv preprint, arXiv.2010.09142, 2020. https://doi.org/10.48550/arXiv.2010.09142

- [4] S. Kantharaj, R. T. K. Leong, X. Lin, A. Masry, M. Thakkar, E. Hoque, and S. Joty, “Chart-to-text: A large-scale benchmark for chart summarization,” arXiv preprint, arXiv.2203.06486, 2022. https://doi.org/10.48550/arXiv.2203.06486

- [5] H. Jhamtani and T. Berg-Kirkpatrick, “Truth-conditional captions for time series data,” Proc. of the 2021 Conf. on Empirical Methods in Natural Language Processing, pp. 719-733, 2021. https://doi.org/10.18653/v1/2021.emnlp-main.55

- [6] S. Murakami, A. Watanabe, A. Miyazawa, K. Goshima, T. Yanase, H. Takamura, and Y. Miyao, “Learning to generate market comments from stock prices,” Proc. of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 1374-1384, 2017. https://doi.org/10.18653/v1/P17-1126

- [7] K. Aoki, A. Miyazawa, T. Ishigaki, T. Aoki, H. Noji, K. Goshima, I. Kobayashi, H. Takamura, and Y. Miyao, “Controlling contents in data-to-document generation with human-designed topic labels,” Proc. of the 12th Int. Conf. on Natural Language Generation, pp. 323-332, 2019. https://doi.org/10.18653/v1/W19-8640.

- [8] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, “Attention is all you need,” arXiv preprint, arXiv.1706.03762, 2023. https://doi.org/10.48550/arXiv.1706.03762

- [9] L. Gong, J. Crego, and J. Senellart, “Enhanced transformer model for data-to-text generation,” Proc. of the 3rd Workshop on Neural Generation and Translation, pp. 148-156, 2019. https://doi.org/10.18653/v1/D19-5615

- [10] L. Li, C. Ma, Y. Yue, and D. Hu, “Improving encoder by auxiliary supervision tasks for table-to-text generation,” Proc. of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th Int. Joint Conf. on Natural Language Processing (Volume 1: Long Papers), pp. 5979-5989, 2021. https://doi.org/10.18653/v1/2021.acl-long.466.

- [11] C. Rebuffel, L. Soulier, G. Scoutheeten, and P. Gallinari, “A hierarchical model for data-to-text generation,” arXiv preprint, arXiv.1912.10011, 2019. https://doi.org/10.48550/arXiv.1912.10011

- [12] S. Murakami, S. Tanaka, M. Hangyo, H. Kamigaito, K. Funakoshi, H. Takamura, and M. Okumura, “Generating weather comments from meteorological simulations,” Proc. of the 16th Conf. of the European Chapter of the Association for Computational Linguistics: Main Volume, pp. 1462-1473, 2021. https://doi.org/10.18653/v1/2021.eacl-main.125.

- [13] S. V. Mehta, J. Rao, Y. Tay, M. Kale, A. P. Parikh, and E. Strubell, “Improving compositional generalization with self-training for data-to-text generation,” arXiv preprint, arXiv.2110.08467, 2022. https://doi.org/10.48550/arXiv.2110.08467

- [14] J. Rao, K. Upasani, A. Balakrishnan, M. White, A. Kumar, and R. Subba, “A tree-to-sequence model for neural NLG in task-oriented dialog,” Proc. of the 12th Int. Conf. on Natural Language Generation, pp. 95-100, 2019. https://doi.org/10.18653/v1/W19-8611

- [15] T. Liu, F. Luo, Q. Xia, S. Ma, B. Chang, and Z. Sui, “Hierarchical encoder with auxiliary supervision for neural table-to-text generation: Learning better representation for tables,” Proc. of the Thirty-Third AAAI Conf. on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conf. and Ninth AAAI Symp. on Educational Advances in Artificial Intelligence, 2019. https://doi.org/10.1609/aaai.v33i01.33016786

- [16] P. Pasupat and P. Liang, “Compositional semantic parsing on semi-structured tables,” Proc. of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th Int. Joint Conf. on Natural Language Processing (Volume 1: Long Papers), pp. 1470-1480, arXiv preprint, arXiv.1508.00305, 2015. https://doi.org/10.48550/arXiv.1508.00305

- [17] H. Banaee, M. U. Ahmed, and A. Loutfi, “Towards NLG for physiological data monitoring with body area networks,” Proc. of the 14th European Workshop on Natural Language Generation, pp. 193-197, 2013.

- [18] A. Schneider, A. Mort, C. Mellish, E. Reiter, P. Wilson, and P.-L. Vaudry, “MIME – NLG in pre-hospital care,” Proc. of the 14th European Workshop on Natural Language Generation, pp. 152-156, 2013.

- [19] G. Moyse and M.-J. Lesot, “Linguistic summaries of locally periodic time series,” Fuzzy Sets and Systems, Vol.285, pp. 94-117, 2016. https://doi.org/10.1016/j.fss.2015.06.016.

- [20] H. Hatipoğlu, E. Boran, M. Avci, and D. Akay, “Linguistic summarization of europe brent spot price time series along with the interpretations from the perspective of turkey,” Int. J. of Intelligent Systems, Vol.29, No.10, 2014. https://doi.org/10.1002/int.21671

- [21] D. Sanchez-Valdes, A. Alvarez-Alvarez, and G. Trivino, “Dynamic linguistic descriptions of time series applied to self-track the physical activity,” Fuzzy Sets and Systems, Vol.285, pp. 162-181, 2015. https://doi.org/10.1016/j.fss.2015.06.018

- [22] J. F. Gaitán-Guerrero, J. L. López Ruiz, M. Espinilla, and C. Martínez-Cruz, “Toward an interpretable continuous glucose monitoring data modeling,” IEEE Internet of Things J., Vol.11, No.19, pp. 31080-31094, 2024. https://doi.org/10.1109/JIOT.2024.3419260

- [23] A. Cascallar-Fuentes, J. Gallego-Fernández, A. Ramos-Soto, A. Saunders-Estévez, and A. Bugarín-Diz, “Automatic generation of textual descriptions in data-to-text systems using a fuzzy temporal ontology: Application in air quality index data series,” Applied Soft Computing, Vol.119, Article No.108612, 2022. https://doi.org/10.1016/j.asoc.2022.108612

- [24] Y. Hamazono, Y. Uehara, H. Noji, Y. Miyao, H. Takamura, and I. Kobayashi, “Market comment generation from data with noisy alignments,” Proc. of the 13th Int. Conf. on Natural Language Generation, pp. 148-157, 2020. https://doi.org/10.18653/v1/2020.inlg-1.21

- [25] S. Tuli, G. Casale, and N. R. Jennings, “TranAD: Deep transformer networks for anomaly detection in multivariate time series data,” arXiv preprint, arXiv.2201.07284, 2022. https://doi.org/10.48550/arXiv.2201.07284

- [26] J. Shi, M. Jain, and G. Narasimhan, “Time series forecasting (TSF) using various deep learning models,” arXiv preprint, arXiv.2204.11115, 2022. https://doi.org/10.48550/arXiv.2204.11115

- [27] K. Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “BLEU: A method for automatic evaluation of machine translation,” Proc. of the 40th Annual Meeting of the Association for Computational Linguistics (ACL), pp. 311-318, 2022. https://doi.org/10.3115/1073083.1073135

- [28] C.-Y. Lin, “ROUGE: A package for automatic evaluation of summaries,” Text Summarization Branches Out, pp. 74-81, 2004.

- [29] T. Zhang, V. Kishore, F. Wu, K. Q. Weinberger, and Y. Artzi, “BERTScore: Evaluating text generation with BERT,” arXiv preprint, arXiv.1904.09675, 2020. https://doi.org/10.48550/arXiv.1904.09675

- [30] S. Banerjee and A. Lavie, “METEOR: An automatic metric for MT evaluation with improved correlation with human judgments,” Proc. of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, pp. 65-72, 2005.

- [31] J. Vig, “Visualizing attention in transformer-based language representation models,” arXiv preprint, arXiv.1904.02679, 2019. https://doi.org/10.48550/arXiv.1904.02679

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.