Research Paper:

Reducing Bandwidth Usage in CVSLAM: A Novel Approach to Map Point Selection and Efficient Data Compression

Weiqiang Zhang

, Lan Cheng†

, Lan Cheng†

, Xinying Xu

, Xinying Xu

, and Zhimin Hu

, and Zhimin Hu

College of Electrical and Power Engineering, Taiyuan University of Technology

No.79 West Street Yingze, Taiyuan, Shanxi 030024, China

†Corresponding author

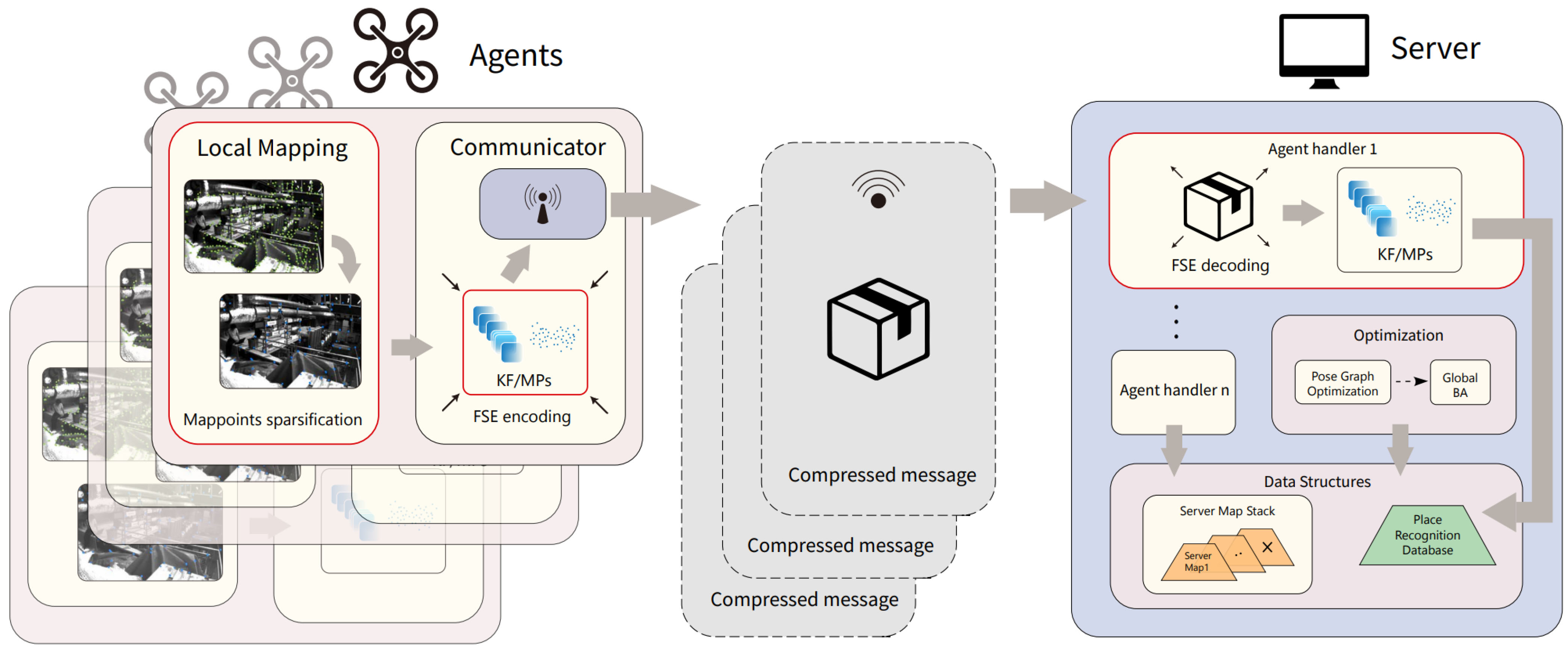

In the field of collaborative visual simultaneous localization and mapping (CVSLAM), efficient data communication poses a significant challenge, particularly in environments with limited bandwidth. To address this issue, we introduce a method aimed at reducing communication consumption. Our approach starts with a strategic culling of map points, aiming at maximizing pose-visibility and expanding spatial diversity to effectively eliminate redundant data in CVSLAM. We achieve this by formulating the problem of maximizing pose-visibility and spatial diversity as a minimum-cost maximum-flow graph optimization problem. Subsequently, we apply finite state entropy encoding for the compression of visual information, further alleviating bandwidth constraints. To verify the proposed method, we implement it within a centralized collaborative monocular simultaneous localization and mapping (SLAM) system. Our approach has been tested on publicly available datasets and in real-world scene. The results show a prominent reduction in bandwidth usage by 49% while maintaining mapping accuracy and without introducing additional latency, confirming its effectiveness in a multi-agent system setting.

Shematich ilustration

- [1] D. Zou and P. Tan, “CoSLAM: Collaborative visual SLAM in dynamic environments,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.35, No.2, pp. 354-366, 2013. https://doi.org/10.1109/TPAMI.2012.104

- [2] D. Van Opdenbosch, T. Aykut, N. Alt, and E. Steinbach, “Efficient map compression for collaborative visual SLAM,” 2018 IEEE Winter Conf. on Applications of Computer Vision (WACV), pp. 992-1000, 2018. https://doi.org/10.1109/WACV.2018.00114

- [3] L. Baroffio et al., “Coding local and global binary visual features extracted from video sequences,” IEEE Trans. on Image Processing, Vol.24, No.11, pp. 3546-3560, 2015. https://doi.org/10.1109/TIP.2015.2445294

- [4] D. Van Opdenbosch, M. Oelsch, A. Garcea, and E. Steinbach, “A joint compression scheme for local binary feature descriptors and their corresponding bag-of-words representation,” 2017 IEEE Visual Communications and Image Processing (VCIP), 2017. https://doi.org/10.1109/VCIP.2017.8305155

- [5] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM: A versatile and accurate monocular SLAM system,” IEEE Trans. on Robotics, Vol.31, No.5, pp. 1147-1163, 2015. https://doi.org/10.1109/TRO.2015.2463671

- [6] R. Mur-Artal and J. D. Tardós, “ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D cameras,” IEEE Trans. on Robotics, Vol.33, No.5, pp. 1255-1262, 2017. https://doi.org/10.1109/TRO.2017.2705103

- [7] T. Qin, P. Li, and S. Shen, “VINS-Mono: A robust and versatile monocular visual-inertial state estimator,” IEEE Trans. on Robotics, Vol.34, No.4, pp. 1004-1020, 2018. https://doi.org/10.1109/TRO.2018.2853729

- [8] C. Campos, R. Elvira, J. J. G. Rodríguez, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM3: An accurate open-source library for visual, visual–inertial, and multimap SLAM,” IEEE Trans. on Robotics, Vol.37, No.6, pp. 1874-1890, 2021. https://doi.org/10.1109/TRO.2021.3075644

- [9] C. Forster, Z. Zhang, M. Gassner, M. Werlberger, and D. Scaramuzza, “SVO: Semidirect visual odometry for monocular and multicamera systems,” IEEE Trans. on Robotics, Vol.33, No.2, pp. 249-265, 2017. https://doi.org/10.1109/TRO.2016.2623335

- [10] F. Li, S. Yang, X. Yi, and X. Yang, “CORB-SLAM: A collaborative visual SLAM system for multiple robots,” Proc. of the 13th Int. Conf. on Collaborative Computing: Networking, Applications, and Worksharing (CollaborateCom 2017), pp. 480-490, 2017. https://doi.org/10.1007/978-3-030-00916-8_45

- [11] P. Schmuck, T. Ziegler, M. Karrer, J. Perraudin, and M. Chli, “COVINS: Visual-inertial SLAM for centralized collaboration,” 2021 IEEE Int. Symp. on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), pp. 171-176, 2021. https://doi.org/10.1109/ISMAR-Adjunct54149.2021.00043

- [12] P. Schmuck and M. Chli, “CCM-SLAM: Robust and efficient centralized collaborative monocular simultaneous localization and mapping for robotic teams,” J. of Field Robotics, Vol.36, No.4, pp. 763-781, 2019. https://doi.org/10.1002/rob.21854

- [13] Q.-P. Liu, Z.-J. Wang, and Y.-F. Tan, “LCCD-SLAM: A low-bandwidth centralized collaborative direct monocular SLAM for multi-robot collaborative mapping,” Unmanned Systems, Vol.12, No.5, pp. 849-858, 2024. https://doi.org/10.1142/S2301385024500213

- [14] D. Van Opdenbosch and E. Steinbach, “Collaborative visual SLAM using compressed feature exchange,” IEEE Robotics and Automation Letters, Vol.4, No.1, pp. 57-64, 2019. https://doi.org/10.1109/LRA.2018.2878920

- [15] D. Van Opdenbosch, M. Oelsch, A. Garcea, T. Aykut, and E. Steinbach, “Selection and compression of local binary features for remote visual SLAM,” 2018 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 7270-7277, 2018. https://doi.org/10.1109/ICRA.2018.8463202

- [16] S. Gauglitz, L. Foschini, M. Turk, and T. Höllerer, “Efficiently selecting spatially distributed keypoints for visual tracking,” 2011 18th IEEE Int. Conf. on Image Processing, pp. 1869-1872, 2011. https://doi.org/10.1109/ICIP.2011.6115832

- [17] Y. Park and S. Bae, “Keeping less is more: Point sparsification for visual SLAM,” 2022 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 7936-7943, 2022. https://doi.org/10.1109/IROS47612.2022.9981694

- [18] W. Zhang, L. Cheng, X. Xu, and Z. Hu, “Reducing communication consumption in collaborative visual SLAM with map point selection and efficient data compression,” Proc. of the 8th Int. Workshop on Advanced Computational Intelligence and Intelligent Informatics (IWACIII 2023), pp. 15-23, 2023. https://doi.org/10.1007/978-981-99-7590-7_2

- [19] A. V. Goldberg, “An efficient implementation of a scaling minimum-cost flow algorithm,” J. of algorithms, Vol.22, No.1, pp. 1-29, 1997. https://doi.org/10.1006/jagm.1995.0805

- [20] J. Duda, “Asymmetric numeral systems: Entropy coding combining speed of Huffman coding with compression rate of arithmetic coding.” arXiv:1311.2540, 2013. https://doi.org/10.48550/arXiv.1311.2540

- [21] “Zstandard – Fast real-time compression algorithm.” http://github.com/facebook/zstd [Accessed August 28, 2024]

- [22] “Google operations research tools.” https://github.com/google/or-tools [Accessed August 28, 2024]

- [23] J. Sturm, N. Engelhard, F. Endres, W. Burgard, and D. Cremers, “A benchmark for the evaluation of RGB-D SLAM systems,” 2012 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 573-580, 2012. https://doi.org/10.1109/IROS.2012.6385773

- [24] M. Grupp, “evo: Python package for the evaluation of odometry and SLAM,” 2017. https://github.com/MichaelGrupp/evo [Accessed August 28, 2024]

- [25] M. Burri et al., “The EuRoC micro aerial vehicle datasets,” The Int. J. of Robotics Research, Vol.35, No.10, pp. 1157-1163, 2016. https://doi.org/10.1177/0278364915620033

- [26] D. van Opdenbosch and E. Steinach, “collab_orb_slam2: Collaborative visual SLAM pipeline as used in Collaborative Visual SLAM using Compressed Feature Exchange (RAL 2018),” 2018. https://github.com/d-vo/collab_orb_slam2 [Accessed August 28, 2024]

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.