Research Paper:

Research on Depression Recognition Based on University Students’ Facial Expressions and Actions with the Assistance of Artificial Intelligence

Xiaohong Cheng†

Students’ Affairs Office, Henan Institute of Technology

Xinxiang, Henan 453003, China

†Corresponding author

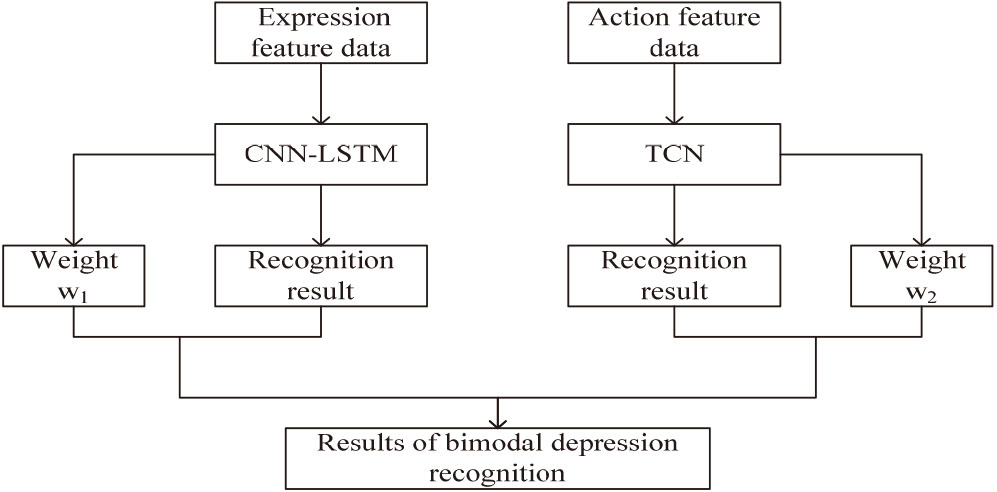

As artificial intelligence (AI) technology advances, its application in the field of psychology has witnessed significant advancements. In this paper, with the assistance of AI, 80 university students with depression and 80 university students with normal psychology were selected as the subjects. The facial expression feature data were extracted through OpenFace, and the action feature data were extracted based on a Kinect camera. Then, the convolutional neural network-long short-term memory (CNN-LSTM) and temporal convolutional neural network (TCN) approaches were designed for recognition. Finally, a weighted fusion recognition method was proposed. The results showed that compared with the support vector machine, back-propagation neural network, and other approaches, the CNN-LSTM and TCN methods showed better performance in the recognition of single feature data, and the accuracy reached 0.781 and 0.769, respectively. After weighted fusion, the accuracy reached the highest at 0.875. The results verify that the methods designed in this paper are effective in identifying depressive emotions through facial expressions and actions among university students and have the potential for practical application.

Recognition based on the weighted fusion of expression and action

- [1] Z. Liu, D. Wang, Z. Ding, and Q. Chen, “A Novel Bimodal Fusion-based Model for Depression Recognition,” 2020 IEEE Int. Conf. on E-health Networking, Application & Services (HEALTHCOM), Shenzhen, China, pp. 1-4, 2021. https://doi.org/10.1109/HEALTHCOM49281.2021.9399033

- [2] Y. Xing, Z. Liu, G. Li, Z. Ding, and B. Hu, “2-level hierarchical depression recognition method based on task-stimulated and integrated speech features,” Biomed. Signal Proces., Vol.72, pp. 1-10, 2022. https://doi.org/10.1016/j.bspc.2021.103287

- [3] J. Zhu, Z. Wang, S. Zeng, X. Li, B. Hu, X. Zhang, C. Xia, L. Zhang, and Z. Ding, “Toward Depression Recognition Using EEG and Eye Tracking: An Ensemble Classification Model CBEM,” 2019 IEEE Int. Conf. on Bioinformatics and Biomedicine (BIBM), San Diego, CA, USA, pp. 782-786, 2019. https://doi.org/10.1109/BIBM47256.2019.8983225

- [4] L. He, J. C. W. Chan, and Z. Wang, “Automatic depression recognition using CNN with attention mechanism from videos,” Neurocomputing, Vol.422, pp. 165-175, 2021. https://doi.org/10.1016/j.neucom.2020.10.015

- [5] Y. Fan, R. Yu, J. Li, J. Zhu, and X. Li, “EEG-based mild depression recognition using multi-kernel convolutional and spatial-temporal Feature,” 2020 IEEE Int. Conf. on Bioinformatics and Biomedicine (BIBM), Seoul, Korea (South), pp. 1777-1784, 2020. https://doi.org/10.1109/BIBM49941.2020.9313499

- [6] M. Yang, C. Cai, and B. Hu, “Clustering Based on Eye Tracking Data for Depression Recognition,” IEEE T. Cogn. Dev. Syst., Vol.15, pp. 1754-1764, 2013. https://doi.org/10.1109/TCDS.2022.3223128

- [7] H. Akbari, M. T. Sadiq, A. U. Rehman, M. Ghazvini, R. A. Naqvi, M. Payan, H. Bagheri, and H. Bagheri, “Depression recognition based on the reconstruction of phase space of EEG signals and geometrical features,” Appl. Acoust., Vol.179, pp. 1-17, 2021. https://doi.org/10.1016/j.apacoust.2021.108078

- [8] H. Cai, Z. Qu, Z. Li, Y. Zhang, X. Hu, and B. Hu, “Feature-level Fusion Approaches Based on Multimodal EEG Data for Depression Recognition,” Inform. Fusion, Vol.59, pp. 127-138, 2020. https://doi.org/10.1016/j.inffus.2020.01.008

- [9] B. Yamini, J. S. Glory, and S. Aravindkumar, “Intelligence Driven-Depression Identification of Facebook Users,” J. Comput. Theor. Nanos., Vol.17, pp. 3770-3775, 2020. https://doi.org/10.1166/jctn.2020.9318

- [10] E. Porter-Vignola, L. Booij, G. Bossé-Chartier, P. Garel, and C. M. Herba, “Emotional Facial Expression Recognition and Depression in Adolescent Girls: Associations with Clinical Features,” Psychiat. Res., Vol.298, pp. 1-8, 2021. https://doi.org/10.1016/j.psychres.2021.113777

- [11] U. Altmann, M. Brümmel, J. Meier, and B. Strauss, “Movement Synchrony and Facial Synchrony as Diagnostic Features of Depression: A Pilot Study,” J. Nerv. Ment. Dis., Vol.109, pp. 128-136, 2020. https://doi.org/10.1097/NMD.0000000000001268

- [12] W. Xie, L. Liang, Y. Lu, H. Luo, and X. Liu, “Deep 3D-CNN for depression diagnosis with facial video recording of self-rating depression scale questionnaire,” 2021 43rd Annual Int. Conf. of the IEEE Engineering in Medicine & Biology Society (EMBC), Mexico, pp. 2007-2010, 2021. https://doi.org/https://doi.org/10.1109/EMBC46164.2021.9630412

- [13] K. Kroenke, R. L. Spitzer, and J. B. Williams, “The PHQ-9: validity of a brief depression severity measure,” J. Gen. Intern. Med., Vol.16, pp. 606-613, 2001.

- [14] M. R. Mohammadi, E. Fatemizadeh, and M. H. Mahoor, “Intensity Estimation of Spontaneous Facial Action Units Based on Their Sparsity Properties,” IEEE Trans. Cybern., Vol.46, pp. 817-826, 2016. https://doi.org/10.1109/TCYB.2015.2416317

- [15] A. Baltoiu, L. Petrica, A. Dinculescu, and C. Vizitiu, “Framework for an embedded emotion assessment system for space science applications,” E-health & Bioengineering Conf., pp. 69-72, 2017. https://doi.org/10.1109/EHB.2017.7995363

- [16] M. Xing, W. Ding, T. Zhang, and H. Li, “STCGCN: a spatio-temporal complete graph convolutional network for remaining useful life prediction of power transformer,” Int. J. Web Inf. Syst., Vol.19, pp. 102-117, 2023. https://doi.org/10.1108/IJWIS-02-2023-0023

- [17] A. S. Ebenezer, E. B. Rajsingh, and B. Kaliaperumal, “Support vector machine-based proactive fault-tolerant scheduling for grid computing environment,” Int. J. Adv. Intell. Paradigms, Vol.16, pp. 381-403, 2020. https://doi.org/10.1504/IJAIP.2020.107539

- [18] Y. Qian, F. Hou, J. Fan, Q. Lv, X. Fan, and G. Zhang, “Design of a Fan-Out Panel-Level SiC MOSFET Power Module Using Ant Colony Optimization-Back Propagation Neural Network,” IEEE T. Electron Dev., Vol.68, pp. 3460-3467, 2021. https://doi.org/10.1109/TED.2021.3077209

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.