Research Paper:

Research on Multi-Modal Music Score Alignment Model for Online Music Education

Dexin Ren

Art College, Zhengzhou Railway Vocational & Technical College

298 Tonghui Road, Zhengdong New District, Zhengzhou City, Henan Province 450000, China

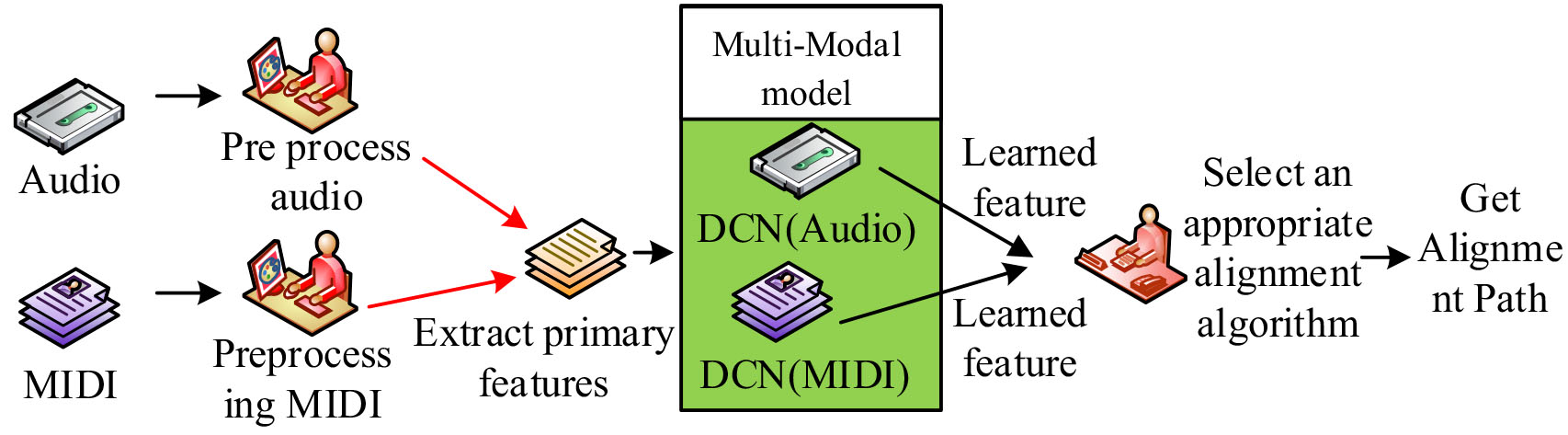

As music data storage becomes increasingly diverse in the era of big data, ensuring alignment of music works with the same semantics for online music education is crucial. To achieve this, a multi-modal music score alignment algorithm model based on deep learning was developed and optimized. Experimental results demonstrated that Note + DCO feature combination yielded the best MIDI input characteristics (mean value: 13.27 ms), whereas CQT feature comparison produced the best results for audio input (average: 12.85 ms). The ResNet-34 network was noted to have the most effective music score alignment effect with alignment errors averaging less than one frame. Compared with other algorithms, the proposed algorithm had the lowest average value of 9.28 ms, median value of 5.85 ms, and standard deviation of 20.17 ms. Actual music retrieval showed a Top-1 retrieval accuracy of 10.93% that was close to 11%. Overall, the proposed algorithm is significant for score alignment and music retrieval recognition in online music education.

Flow diagram of music score alignment algorithm based on multi-modal model

- [1] G. Barton and S. Riddle, “Culturally responsive and meaningful music education: Multimodality, meaning-making, and communication in diverse learning contexts,” Research Studies in Music Education, Vol.44, Issue 2, pp. 345-362, 2022.

- [2] J. S. Barrett, R. E. Schachter, D. Gilbert, and M. Fuerst, “Best practices for preschool music education: Supporting music-making throughout the day,” Early Childhood Education Journal, Vol.50, Issue 3, pp. 385-397, 2022.

- [3] O. Incognito, L. Scaccioni, and G. Pinto, “The impact of a music education program on meta-musical awareness, logical-mathematical, and notational skills in preschoolers,” Int. J. of Music Education, Vol.40, Issue 1, pp. 90-104, 2022.

- [4] L. Zhang, “Integration of Information Technology into Music Education to Cultivate Creative Thinking Among Middle School Students,” J. of Contemporary Educational Research, Vol.6, Issue 1, pp. 93-98, 2022.

- [5] M. V. Aikins and G. T. M. Akuffo, “Using ICT in the Teaching and Learning of Music in the Colleges of Education During a Pandemic Situation in Ghana,” Malaysian Online J. of Educational Technology, Vol.10, No.3, pp. 151-165, 2022.

- [6] D. A. Camlin and T. Lisboa, “The digital ‘turn’ in music education,” Music Education Research, Vol.23, Issue 2, pp. 129-138, 2021.

- [7] A. J. Muñoz-Montoro, J. J. Carabias-Orti, P. Cabañas-Molero, F. J. Cañadas-Quesada, and N. Ruiz-Reyes, “Multichannel Blind Music Source Separation Using Directivity-Aware MNMF with Harmonicity Constraints,” IEEE Access, Vol.10, pp. 17781-17795, 2022.

- [8] P. E. Savage, S. Passmore, G. Chiba, T. E. Currie, H. Suzuki, and Q. D. Atkinson, “Sequence alignment of folk song melodies reveals cross-cultural regularities of musical evolution,” Current Biology, Vol.32, Issue 6, pp. 1395-1402, 2022.

- [9] A. J. Muñoz-Montoro, R. Cortina, S. García-Galán, E. F. Combarro, and J. Ranilla, “A score identification parallel system based on audio-to-score alignment,” The J. of Supercomputing, Vol.76, No.11, pp. 8830-8844, 2020.

- [10] C. de la Fuente, J. J. Valero-Mas, F. J. Castellanos, and J. Calvo-Zaragoza, “Multimodal image and audio music transcription,” Int. J. of Multimedia Information Retrieval, Vol.11, No.1, pp. 77-84, 2022.

- [11] D. M. Weigl, T. Crawford, A. Gkiokas, W. Goebl, G. Emilia, N. Guti, and P. Santos, “FAIR Interconnection and Enrichment of Public-Domain Music Resources on the Web,” Empirical Musicology Review, Vol.16, No.1, pp. 16-33, 2021.

- [12] D. Jeong, T. Kwon, and J. Nam, “Note-intensity estimation of piano recordings using coarsely aligned midi score,” J. of the Audio Engineering Society, Vol.68, No.1/2, pp. 34-47, 2020.

- [13] Z. Sheng, K. Song, X. Tan, Y. Ren, W. Ye, S. Zhang, and T. Qin, “Songmass: Automatic song writing with pre-training and alignment constraint,” Proc. of the AAAI Conf. on Artificial Intelligence, Vol.35, No.15, pp. 13798-13805, 2021.

- [14] M. G. Bergomi and A. Baratè, “Homological persistence in time series: an application to music classification,” J. of Mathematics and Music, Vol.14, Issue 2, pp. 204-221, 2020.

- [15] M. J. Bergee and K. M. Weingarten, “Multilevel models of the relationship between music achievement and reading and math achievement,” J. of Research in Music Education, Vol.68, Issue 4, pp. 398-418, 2021.

- [16] X. Wang, S. Zhao, J. Liu, and L. Wang, “College music teaching and ideological and political education integration mode based on deep learning,” J. of Intelligent Systems, Vol.31, Issue 1, pp. 466-476, 2022.

- [17] U. Kumar, C. P. Legendre, L. Zhao, and B. F. Chao, “Dynamic Time Warping as an Alternative to Windowed Cross Correlation in Seismological Applications,” Seismological Society of America, Vol.93, No.3, pp. 1909-1921, 2022.

- [18] M. Gil-Martin, R. San-Segundo, A. Mateos, and J. Ferreiros-Lopez, “Human stress detection with wearable sensors using convolutional neural networks,” IEEE Aerospace and Electronic Systems Magazine, Vol.37, Issue 1, pp. 60-70, 2022.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.