Research Paper:

Bagging Algorithm Based on Possibilistic Data Interpolation for Brain-Computer Interface

Isao Hayashi*

, Honoka Irie**, and Shinji Tsuruse***

, Honoka Irie**, and Shinji Tsuruse***

*Graduate School of Informatics, Kansai University

2-1-1 Ryozenji-cho, Takatsuki, Osaka 569-1095, Japan

**School of Social Information Science, University of Hyogo

8-2-1 Gakuennishi-machi, Nishi-ku, Kobe, Hyogo 651-2197, Japan

***Panasonic Connect Co., Ltd.

8-21-1 Ginza, Chuo-ku, Tokyo 104-0061, Japan

Recently, brain-computer interfaces (BCIs) and brain-machine interfaces have garnered the attention of researchers. Based on connections with external devices, external computers and machines can be controlled by brain signals measured via near-infrared spectroscopy (NIRS) or electroencephalograph devices. Herein, we propose a novel bagging algorithm that generates interpolation data around misclassified data using a possibilistic function, to be applied to BCIs. In contrast to AdaBoost, which is a conventional ensemble learning method that increases the weight of misclassified data to incorporate them with high probability to the next datasets, we generate interpolation data using a membership function centered on misclassified data and incorporate them into the next datasets simultaneously. The interpolated data are known as virtual data herein. By adding the virtual data to the training data, the volume of the training data becomes sufficient for adjusting the discriminate boundary more accurately. Because the membership function is defined as a possibility distribution, this method is named the bagging algorithm based on the possibility distribution. Herein, we formulate a bagging-type ensemble learning based on the possibility distribution and discuss the usefulness of the proposed method for solving simple calculations using NIRS data.

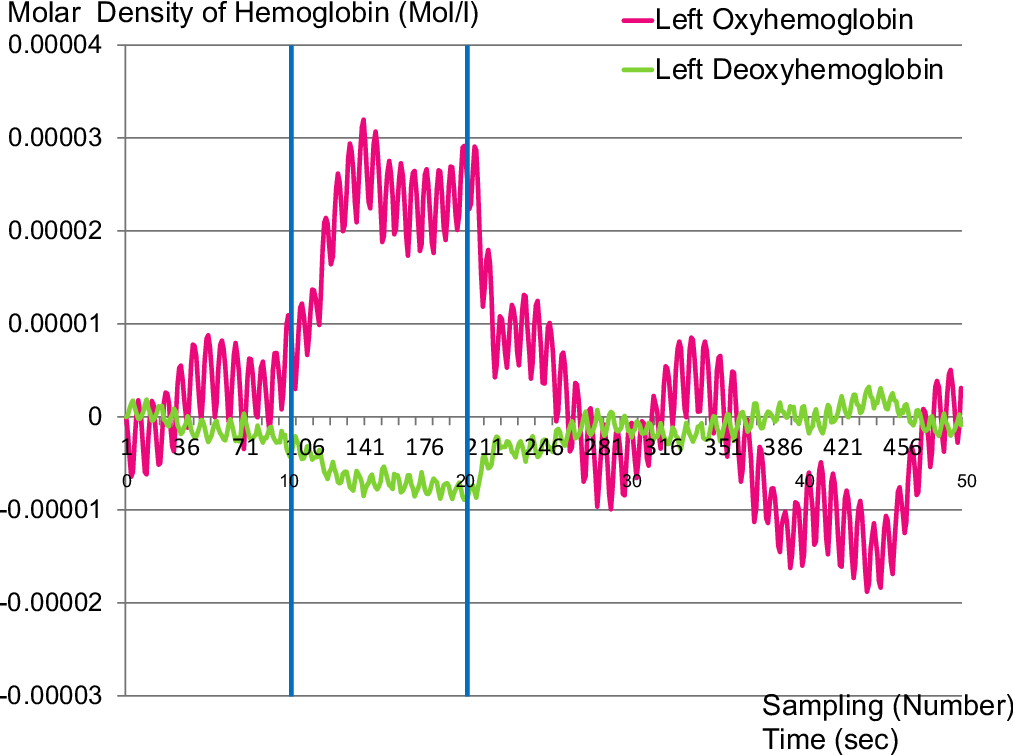

Cerebral blood flow change of left side electrode

- [1] M. A. Lebedev, J. M. Carmera, J. E. O’Doherty, M. Zacksenhouse, C. S. Henriquez, J. C. Principe, and M. A. L. Nicolelis, “Cortical ensemble adaptation to represent velocity of an artificial actuator controlled by a brain-machine interface,” J. of Neuroscience, Vol.25, No.19, pp. 4681-4693, 2005. https://doi.org/10.1523/JNEUROSCI.4088-04.2005

- [2] T. O. Zander, C. Kothe, S. Welke, and M. Roetting, “Enhancing Human-Machine Systems with Secondary Input from Passive Brain-Computer Interfaces,” Proc. of the 4th Int. BCI Workshop, pp. 44-49, 2008.

- [3] M. Wolf, G. Morren, D. Haensse, T. Karen, U. Wolf, J. C. Fauchere, and H. U. Bucher, “Near Infrared Spectroscopy to Study the Brain: An Overview,” Opto-Electronics Review, Vol.16, No.4, pp. 413-419, 2008. https://doi.org/10.2478/s11772-008-0042-z

- [4] R. Sitaram, H. Zhang, C. Guan, M. Thulasidas, Y. Hoshi, A. Ishikawa, K. Shimizu, and N. Birbaumer, “Temporal Classification of Multichannel Near-Infrared Spectroscopy Signals of Motor Imagery for Developing a Brain-Computer Interface,” Neuroimage, Vol.34, No.4, pp. 1416-1427, 2007. https://doi.org/10.1016/j.neuroimage.2006.11.005

- [5] W. Niide, T. Tsubone, and Y. Wada, “Discrimination of moving limb with near-infrared spectroscopy,” IEICE Technical Report, Neurocomputing, Vol.107, No.542, pp. 191-196, 2008 (in Japanese).

- [6] T. Yamaguchi, K. Nagata, Q. T. Pham, G. Pfurtscheller, and K. Inoue, “Pattern Recognition of EEG Signal During Motor Imagery by Using SOM,” Int. J. of Innovative Computing, Information and Control, Vol.4, No.10, pp. 2617-2630, 2008.

- [7] D. Opitz and R. Maclin, “Popular Ensemble Methods: An Empirical Study,” J. of Artificial Intelligence Research, Vol.11, pp. 169-198, 1999. https://doi.org/10.1613/jair.614

- [8] R. Polikar, “Ensemble Based Systems in Decision Making,” IEEE Circuits and Systems Magazine, Vol.6, No.3, pp. 21-45, 2006. https://doi.org/10.1109/MCAS.2006.1688199

- [9] L. Rokach, “Ensemble-Based Classifiers,” Artificial Intelligence Review, Vol.33, Nos.1-2, pp. 1-39, 2010. https://doi.org/10.1007/s10462-009-9124-7

- [10] L. Breiman, “Bagging Predictors,” Machine Learning, Vol.24, No.2, pp. 123-140, 1996. https://doi.org/10.1007/BF00058655

- [11] T. K. Ho, “The Random Subspace Method for Constructing Decision Forests,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.20, No.8, pp. 832-844, 1998. https://doi.org/10.1109/34.709601

- [12] Y. Freund, “Boosting a Weak Learning Algorithm by Majority,” Information and Computation, Vol.121, No.2, pp. 256-285, 1995. https://doi.org/10.1006/inco.1995.1136

- [13] Y. Freund and R. E. Schapire, “A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting,” J. of Computer and System Sciences, Vol.1, No.55, pp. 119-139, 1997. https://doi.org/10.1006/jcss.1997.1504

- [14] R. E. Schapire and Y. Singer, “Improved Boosting Algorithms Using Confidence-Rated Predictions,” Machine Learning, Vol.37, No.3, pp. 297-336, 1998. https://doi.org/10.1023/A:1007614523901

- [15] T. Kanamori, K. Hatano, and O. Watanabe, “Boosting,” Morikita Publishing, 2006 (in Japanese).

- [16] T. Nakashima and Y. Shoji, “The Effect of Data Partition in Constructing Fuzzy Ensemble Classifiers,” Proc. of the 25th Fuzzy System Symp., Session ID:3E2-01, 2009 (in Japanese). https://doi.org/10.14864/fss.25.0.213.0

- [17] R. E. Schapire, “The Boosting Approach to Machine Learning: An Overview,” D. D. Denison, M. H. Hansen, C. Holmes, B. Mallick, and B. Yu (Eds.), “Nonlinear Estimation and Classification,” pp. 149-171, Springer, 2003. https://doi.org/10.1007/978-0-387-21579-2_9

- [18] N. Murata, T. Kanamori, and T. Takenouchi, “Boosting a Learning Algorithm: Are “Three Heads” Better than One?,” IEICE Trans. on Information and Systems, Vol.88, No.9, pp. 724-729, 2005 (in Japanese).

- [19] D. Wolpert, “Stacked Generalization,” Neural Networks, Vol.5, No.2, pp. 241-259, 1992. https://doi.org/10.1016/S0893-6080(05)80023-1

- [20] L. Breiman, “Stacked Regression,” Machine Learning, Vol.24, pp. 49-64, 1996. https://doi.org/10.1007/BF00117832

- [21] M. Ozay, T. Fatos, and V. Yarman, “A New Fuzzy Stacked Generalization Technique and Analysis of its Performance,” arXiv:1204.0171, 2013. https://doi.org/10.48550/arXiv.1204.0171

- [22] A. G. Ivakhnenko, “The Group Method of Data Handling: A Rival of the Method of Stochastic Approximation,” Soviet Automatic Control, Vol.1, No.3, pp. 43-55, 1968.

- [23] A. G. Ivakhnenko, “Polynomial Theory of Complex Systems,” IEEE Trans. on Systems, Man, and Cybernetics, Vol.SMC-1, No.4, pp. 364-378, 1971. https://doi.org/10.1109/TSMC.1971.4308320

- [24] R. A. Jacobs, M. I. Jordan, S. J. Nowlan, and G. E. Hinton, “Adaptive Mixtures of Local Experts,” Neural Computation, Vol.3, No.1, pp. 79-87, 1991. https://doi.org/10.1162/neco.1991.3.1.79

- [25] C. E. Rasmussen and Z. Ghahramani, “Infinite Mixtures of Gaussian Process Experts,” Advances in Neural Information Processing Systems, Vol.14, pp. 881-888, 2002.

- [26] L. A. Zadeh, “Fuzzy Sets,” Information and Control, Vol.8, pp. 338-353, 1965. https://doi.org/10.1016/S0019-9958(65)90241-X

- [27] E. H. Mamdani, “Application of Fuzzy Algorithms for Control of Simple Dynamic Plant,” Proc. of the Institution of Electrical Engineers, Vol.121, No.12, pp. 1585-1588, 1974. https://doi.org/10.1049/piee.1974.0328

- [28] T. Takagi and M. Sugeno, “Fuzzy Identification of Systems and its Applications to Modeling and Control,” IEEE Trans. on Systems, Man, and Cybernetics, Vol.15, No.1, pp. 116-132, 1985. https://doi.org/10.1109/TSMC.1985.6313399

- [29] J. A. Hoeting, D. Madigan, A. E. Raftery, and C. T. Volinsky, “Bayesian Model Averaging: A Tutorial,” Statistical Science, Vol.14, No.4, pp. 382-401, 1999.

- [30] D. Haussler, M. Kearns, and R. E. Schapire, “Bounds on the Sample Complexity of Bayesian Learning Using information Theory and the VC Dimension,” Machine Learning, Vol.14, pp. 83-113, 1994. https://doi.org/10.1023/A:1022698821832

- [31] H. Irie and I. Hayashi, “Design Evaluation of Learning Type Fuzzy Inference Using Trapezoidal Membership Function,” J. of Japan Society for Fuzzy Theory and Intelligent Informatics, Vol.31, No.6, pp. 908-917, 2019 (in Japanese). https://doi.org/10.3156/jsoft.31.6_908

- [32] I. Hayashi and S. Tsuruse, “A Proposal of Boosting Algorithm for Brain-Computer Interface Using Probabilistic Data Interpolation,” IEICE Technical Report, Neurocomputing, Vol.109, No.461, pp. 303-308, 2010 (in Japanese).

- [33] I. Hayashi and S. Tsuruse, “A Proposal of Boosting Algorithm by Probabilistic Data Interpolation for Brain-Computer Interface,” Proc. of the 26th Fuzzy System Symp., pp. 288-291, 2010 (in Japanese). https://doi.org/10.14864/fss.26.0.65.0

- [34] I. Hayashi, S. Tsuruse, J. Suzuki, and R. T. Kozma, “A Proposal for Applying pdi-Boosting to Brain-Computer Interfaces,” Proc. of 2012 IEEE Int. Conf. on Fuzzy Systems (FUZZ-IEEE2012) in 2012 IEEE World Congress on Computational Intelligence (WCCI2012), pp. 635-640, 2012. https://doi.org/10.1109/FUZZ-IEEE.2012.6251152

- [35] H. Irie and I. Hayashi, “Performance Evaluation of pdi-Bagging by Generation of Correct-Error Virtual Data,” Proc. of the 29th Symp. on Fuzzy, Artificial Intelligence, Neural Networks and Computational Intelligence, Paper ID:A3-3, 2019 (in Japanese).

- [36] H. Irie and I. Hayashi, “Proposal of Class Determination Method for Generated Virtual Data in pdi-Bagging,” Proc. of the 34th Annual Conf. of the Japanese Society for Artificial Intelligence, Paper ID:103-GS-8-04, 2020 (in Japanese). https://doi.org/10.11517/pjsai.JSAI2020.0_1O3GS804

- [37] H. Irie, I. Hayashi, and T. Katada, “Vehicle Type Discrimination in Large-Scale Outdoor Parking Lot Using pdi-Bagging,” Proc. of the Intelligent Systems Symp. 2021, pp. 207-212, 2021 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.