Research Paper:

Style Migration Based on the Loss Function of Location Information

Tao Wang*,†

, Jie Chen*, and Xianqiang Gao**

, Jie Chen*, and Xianqiang Gao**

*Network Information Center, Xi’an Aeronautical Institute

No.259 West Second Ring Road, Lianhu District, Xi’an, Shaanxi 710077, China

†Corresponding author

**School of Information Engineering, Tarim University

1487 Tarim Avenue, Alar, Xinjiang 843300, China

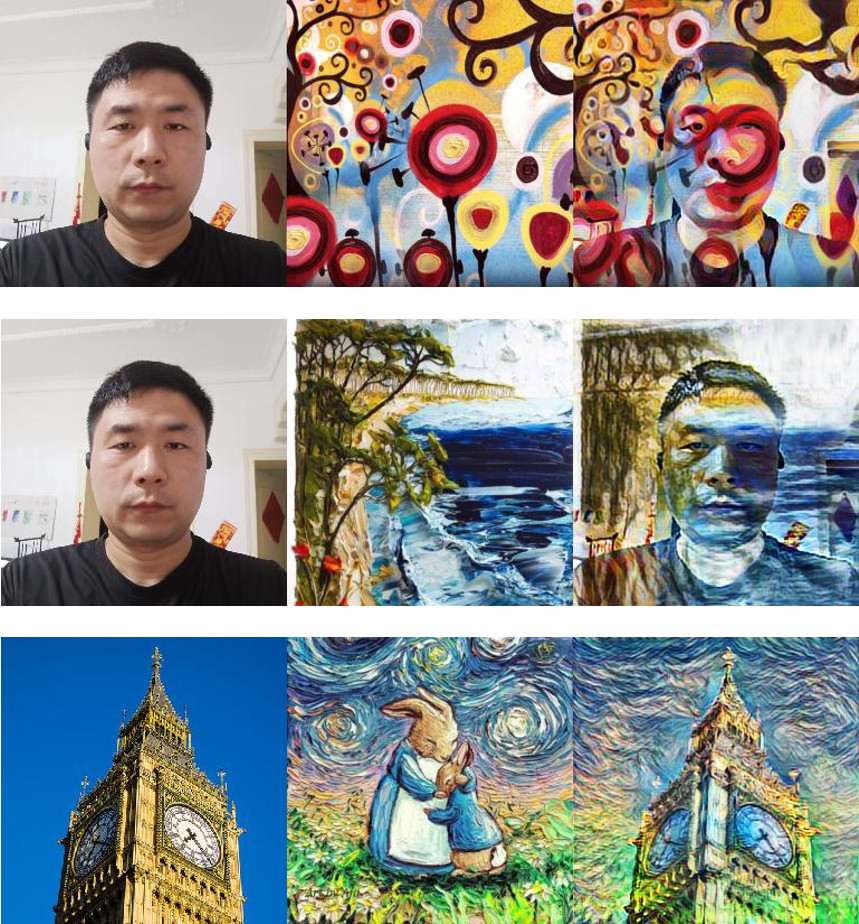

Using the improved Johnson et al.’s style migration network as a starting point, this paper proposes a new loss function based on the position information Gram matrix. The new method adds the chunked Gram matrix with position information, and simultaneously, the structural similarity between the style map and the resultant image is added to the style training. The style position information is given to the resultant image, and finally, the resolution of the resultant image is improved with the SRGAN. The new model can effectively migrate the texture structure as well as the color space of the style image, while the data of the content image are kept intact. The simulation results reveal that the image processing results of the new model improve those of the classical Johnson et al.’s method, Google Brain team method, and CCPL method, and the SSIM values of the resulting map and style image are all greater than 0.3. As a comparison, the SSIM values of Johnson et al., Google Brain team, and CCPL are 0.14, 0.11, and 0.12, respectively, which is an obvious improvement. Moreover, with deeper training, the new method can improve the similarity of certain resulting images and style images to more than 0.37256. In addition, training other arbitrary content images on the basis of the trained model can quickly yield satisfactory results.

Style transfer of oil paintings with location information

- [1] Y. Zhao and D. Xu, “Oil style image generation via fluid simulation,” J. of Software, Vol.17, No.7, pp. 1571-1579, 2006 (in Chinese).

- [2] C.-M. Wang and J.-S. Lee, “Using ILIC algorithm for an impressionist effect and stylized virtual environments,” J. of Visual Languages & Computing, Vol.14, No.3, pp. 255-274, 2003. https://doi.org/10.1016/S1045-926X(03)00018-1

- [3] T. Wang and Q. Zhang, “A texture fusion-based multi-scale anisotropic Vincent van Gogh oil painting rendering method,” Control Engineering of China, Vol.27, No.6, pp. 1018-1024, 2020 (in Chinese). https://doi.org/10.14107/j.cnki.kzgc.20190192

- [4] L. A. Gatys, A. S. Ecker, and M. Bethge, “Image style transfer using convolutional neural networks,” 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2414-2423, 2016. https://doi.org/10.1109/CVPR.2016.265

- [5] J. Johnson, A. Alahi, and F.-F. Li, “Perceptual losses for real-time style transfer and super-resolution,” Proc. of the 14th European Conf. on Computer Vision (ECCV 2016), Part 2, pp. 694-711, 2016. https://doi.org/10.1007/978-3-319-46475-6_43

- [6] V. Dumoulin, J. Shlens, and M. Kudlur, “A learned representation for artistic style,” 5th Int. Conf. on Learning Representations (ICLR), 2017.

- [7] Y. Jing et al., “Dynamic instance normalization for arbitrary style transfer,” Proc. of the AAAI Conf. on Artificial Intelligence, Vol.34, No.4, pp. 4369-4376, 2020. https://doi.org/10.1609/aaai.v34i04.5862

- [8] D. Chen et al., “StyleBank: An explicit representation for neural image style transfer,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2770-2779, 2017. https://doi.org/10.1109/CVPR.2017.296

- [9] X. Xia et al., “Joint bilateral learning for real-time universal photorealistic style transfer,” Proc. of the 16th European Conf. on Computer Vision (ECCV 2020), Part 8, pp. 327-342, 2020. https://doi.org/10.1007/978-3-030-58598-3_20

- [10] S. Liu and T. Zhu, “Structure-guided arbitrary style transfer for artistic image and video,” IEEE Trans. on Multimedia, Vol.24, pp. 1299-1312, 2022. https://doi.org/10.1109/TMM.2021.3063605

- [11] Z. Wu et al., “CCPL: Contrastive coherence preserving loss for versatile style transfer,” Proc. of the 17th European Conf. on Computer Vision (ECCV 2022), Part 16, pp. 189-206, 2022. https://doi.org/10.1007/978-3-031-19787-1_11

- [12] G. Ghiasi et al., “Exploring the structure of a real-time, arbitrary neural artistic stylization network,” Proc. of the 28th British Machine Vision Conf. (BMVC), Article No.114, 2017. https://doi.org/10.5244/C.31.114

- [13] Y. Shen et al., “Image style transfer with clear target edges,” Laser & Optoelectronics Progress, Vol.58, No.12, pp. 241-253, 2021 (in Chinese).

- [14] Y. Tan and F. Zeng, “Image style transfer algorithm based on new style loss function and brightness information,” J. of Optoelectronics - Laser, Vol.32, No.8, pp. 879-887, 2021 (in Chinese). https://doi.org/10.16136/j.joel.2021.08.0030

- [15] Y. Qian and M. Wang, “Method of image style transfer based on new style loss function,” Electronic Measurement Technology, Vol.42, No.4, pp. 70-73, 2019 (in Chinese). https://doi.org/10.19651/j.cnki.emt.1802112

- [16] X. Zhuang, C. Li, and P. Li, “Style transfer based on cross-layer correlation perceptual loss,” Acta Scientiarum Naturalium Universitatis Sunyatseni, Vol.59, No.6, pp. 126-135, 2020 (in Chinese). https://doi.org/10.13471/j.cnki.acta.snus.2019.10.11.2019a079

- [17] F. Shen, S. Yan, and G. Zeng, “Neural style transfer via meta networks,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 8061-8069, 2018. https://doi.org/10.1109/CVPR.2018.00841

- [18] Y. Liu et al., “Improved algorithm for fast arbitrary style transfer meta network,” J. of Frontiers of Computer Science and Technology, Vol.14, No.5, pp. 861-869, 2020 (in Chinese).

- [19] H. Yang, Y. Han, and Y. Guo, “Deep learning algorithm for image style transfer based on luminance and color channels,” J. of Chongqing University of Technology (Natural Science), Vol.33, No.7, pp. 145-151+159, 2019 (in Chinese).

- [20] S. Gu et al., “Arbitrary style transfer with deep feature reshuffle,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 8222-8231, 2018. https://doi.org/10.1109/CVPR.2018.00858

- [21] Z. Luo, “Design and implementation of image style transfer method based on deep learning,” Master’s thesis, Hunan University, pp. 25-30, 2019 (in Chinese).

- [22] X. Huang and S. Belongie, “Arbitrary style transfer in real-time with adaptive instance normalization,” 2017 IEEE Int. Conf. on Computer Vision (ICCV), pp. 1510-1519, 2017. https://doi.org/10.1109/ICCV.2017.167

- [23] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv:1412.6980, 2014. https://doi.org/10.48550/arXiv.1412.6980

- [24] Y. Qian and M. Wang, “Method of image style transfer based on new style loss function,” Electronic Measurement Technology, Vol.42, No.4, pp. 70-73, 2019 (in Chinese). https://doi.org/10.19651/j.cnki.emt.1802112

- [25] J. Sun and X. Liu, “Local style migration method based on residual neural network,” Laser & Optoelectronics Progress, Vol.57, No.8, pp. 120-127, 2020 (in Chinese).

- [26] Z. Hui, X. Wang, and X. Gao, “Fast and accurate single image super-resolution via information distillation network,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 723-731, 2018. https://doi.org/10.1109/CVPR.2018.00082

- [27] J. Bruna, P. Sprechmann, and L. Yann, “Super-resolution with deep convolutional sufficient statistics,” 4th Int. Conf. on Learning Representations (ICLR), 2016.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.