Research Paper:

Basketball Sports Posture Recognition Technology Based on Improved Graph Convolutional Neural Network

Jinmao Tong* and Fei Wang**,†

*College of General Education, Fujian Chuanzheng Communications College

No.80 Shoushan Road, Cangshan District, Fuzhou, Fujian 350007, China

**Ministry of Sports, Xiamen Institute of Technology

No.1251 Sunban South Road, Jimei District, Xiamen, Fujian 361021, China

†Corresponding author

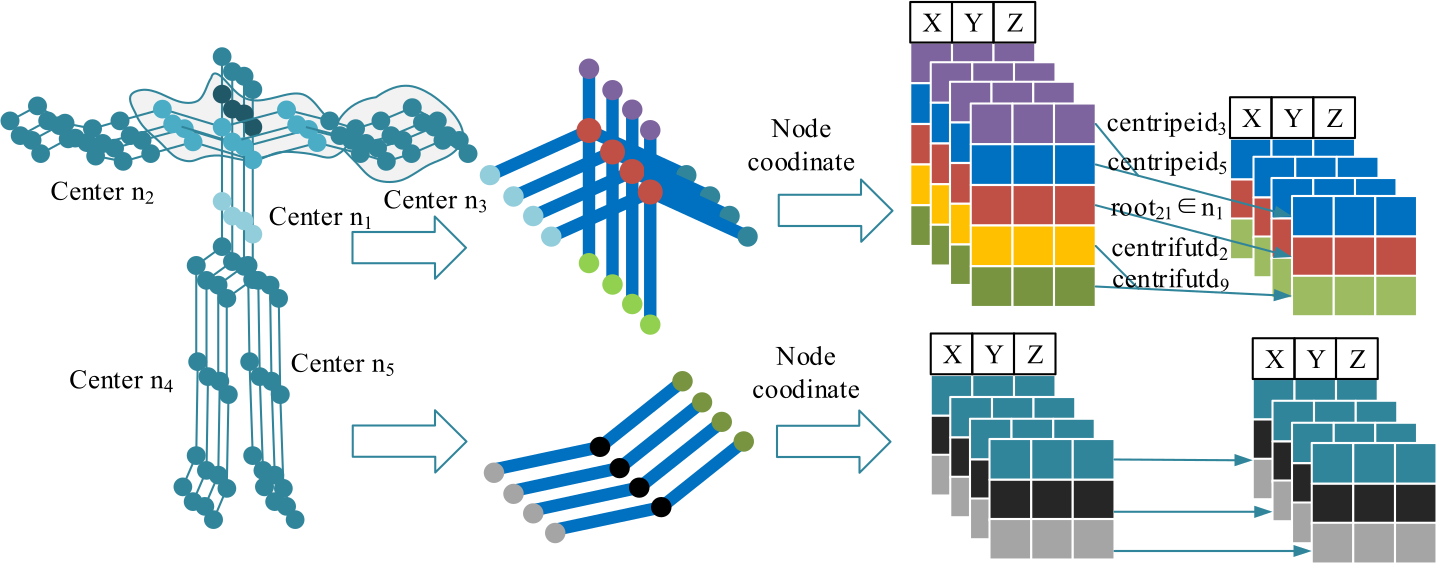

Basketball has rapidly developed in recent years. Analysis of various moves in basketball can provide technical references for professional players and assist referees in judging games. Traditional technology can no longer provide modern basketball players with theoretical support. Therefore, using intelligent methods to recognize human body postures in basketball was a relatively innovative approach. To be able to recognize the basketball sports posture of players more accurately, the experiment proposes a basketball stance recognition model based on enhanced graph convolutional networks (GCN), that is, the basketball stance recognition model based on enhanced GCN and spatial temporal graph convolutional network (ST-GCN) model. This model combines the respective advantages of the GCN and temporal convolutional network and can handle graph-structured data with time-series relationships. The ST-GCN can be further deduced by realizing the convolution operation of the graph structure and establishing a spatiotemporal graph convolution model for the posture sequence of a person’s body. A dataset of technical basketball actions is constructed to verify the effectiveness of the ST-GCN model. The final experimental findings indicated that the final recognition accuracy of the ST-GCN model for basketball postures was approximately 95.58%, whereas the final recognition accuracy of the long short term memory + multiview re-observation skeleton action recognition (LSTM+MV+AC) model was about 93.65%.

A basketball posture recognition model

- [1] F. Gama et al., “Graphs, convolutions, and neural networks: From graph filters to graph neural networks,” IEEE Signal Processing Magazine, Vol.37, No.6, pp. 128-138, 2020. https://doi.org/10.1109/MSP.2020.3016143

- [2] K. Guo et al., “Optimized graph convolution recurrent neural network for traffic prediction,” IEEE Trans. on Intelligent Transportation Systems, Vol.22, No.2, pp. 1138-1149, 2021. https://doi.org/10.1109/TITS.2019.2963722

- [3] C. Dhiman and D. K. Vishwakarma, “View-invariant deep architecture for human action recognition using two-stream motion and shape temporal dynamics,” IEEE Trans. on Image Processing, Vol.29, pp. 3835-3844, 2020. https://doi.org/10.1109/TIP.2020.2965299

- [4] T. L. Munea et al., “The progress of human pose estimation: A survey and taxonomy of models applied in 2D human pose estimation,” IEEE Access, Vol.8, pp. 133330-133348, 2020. https://doi.org/10.1109/ACCESS.2020.3010248

- [5] J. Wang et al., “Deep high-resolution representation learning for visual recognition,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.43, No.10, pp. 3349-3364, 2021. https://doi.org/10.1109/TPAMI.2020.2983686

- [6] D. C. Luvizon, D. Picard, and H. Tabia, “Multi-task deep learning for real-time 3D human pose estimation and action recognition,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.43, No.8, pp. 2752-2764, 2021. https://doi.org/10.1109/TPAMI.2020.2976014

- [7] T. Chen et al., “Anatomy-aware 3D human pose estimation with bone-based pose decomposition,” IEEE Trans. on Circuits and Systems for Video Technology, Vol.32, No.1, pp. 198-209, 2022. https://doi.org/10.1109/TCSVT.2021.3057267

- [8] Q. Hu, X. Tang, and W. Tang, “A smart chair sitting posture recognition system using flex sensors and FPGA implemented artificial neural network,” IEEE Sensors J., Vol.20, No.14, pp. 8007-8016, 2020. https://doi.org/10.1109/JSEN.2020.2980207

- [9] I. Sárándi et al., “MeTRAbs: Metric-scale truncation-robust heatmaps for absolute 3D human pose estimation,” IEEE Trans. on Biometrics, Behavior, and Identity Science, Vol.3, No.1, pp. 16-30, 2021. https://doi.org/10.1109/TBIOM.2020.3037257

- [10] A. Ali, Y. Zhu, and M. Zakarya, “Exploiting dynamic spatio-temporal graph convolutional neural networks for citywide traffic flows prediction,” Neural Networks, Vol.145, pp. 233-247, 2022. https://doi.org/10.1016/j.neunet.2021.10.021

- [11] D. Zhu et al., “Understanding place characteristics in geographic contexts through graph convolutional neural networks,” Annals of the American Association of Geographers, Vol.110, No.2, pp. 408-420, 2020. https://doi.org/10.1080/24694452.2019.1694403

- [12] B. Yu, Y. Lee, and K. Sohn, “Forecasting road traffic speeds by considering area-wide spatio-temporal dependencies based on a graph convolutional neural network (GCN),” Transportation Research Part C: Emerging Technologies, Vol.114, pp. 189-204, 2020. https://doi.org/10.1016/j.trc.2020.02.013

- [13] T. Li et al., “Multireceptive field graph convolutional networks for machine fault diagnosis,” IEEE Trans. on Industrial Electronics, Vol.68, No.12, pp. 12739-12749, 2021. https://doi.org/10.1109/TIE.2020.3040669

- [14] H. Xia and X. Gao, “Multi-scale mixed dense graph convolution network for skeleton-based action recognition,” IEEE Access, Vol.9, pp. 36475-36484, 2021. https://doi.org/10.1109/ACCESS.2020.3049029

- [15] A. Jalal, I. Akhtar, and K. Kim, “Human posture estimation and sustainable events classification via pseudo-2D stick model and K-ary tree hashing,” Sustainability, Vol.12, No.23, Article No.9814, 2020. https://doi.org/10.3390/su12239814

- [16] M. Ota et al., “Verification of reliability and validity of motion analysis systems during bilateral squat using human pose tracking algorithm,” Gait & Posture, Vol.80, pp. 62-67, 2020. https://doi.org/10.1016/j.gaitpost.2020.05.027

- [17] M. Woźniak et al., “Body pose prediction based on motion sensor data and recurrent neural network,” IEEE Trans. on Industrial Informatics, Vol.17, No.3, pp. 2101-2111, 2021. https://doi.org/10.1109/TII.2020.3015934

- [18] T. Huynh-The et al., “Image representation of pose-transition feature for 3D skeleton-based action recognition,” Information Sciences, Vol.513, pp. 112-126, 2020. https://doi.org/10.1016/j.ins.2019.10.047

- [19] G. Varol et al., “Synthetic humans for action recognition from unseen viewpoints,” Int. J. of Computer Vision, Vol.129, No.7, pp. 2264-2287, 2021. https://doi.org/10.1007/s11263-021-01467-7

- [20] T. Zhao et al., “Identifying drug–target interactions based on graph convolutional network and deep neural network,” Briefings in Bioinformatics, Vol.22, No.2, pp. 2141-2150, 2021. https://doi.org/10.1093/bib/bbaa044

- [21] L. Mou et al., “Nonlocal graph convolutional networks for hyperspectral image classification,” IEEE Trans. on Geoscience and Remote Sensing, Vol.58, No.12, pp. 8246-8257, 2020. https://doi.org/10.1109/TGRS.2020.2973363

- [22] D. Zou and G. Lerman, “Graph convolutional neural networks via scattering,” Applied and Computational Harmonic Analysis, Vol.49, No.3, pp. 1046-1074, 2020. https://doi.org/10.1016/j.acha.2019.06.003

- [23] I. Spinelli, S. Scardapane, and A. Uncini, “Adaptive propagation graph convolutional network,” IEEE Trans. on Neural Networks and Learning Systems, Vol.32, No.10, pp. 4755-4760, 2021. https://doi.org/10.1109/TNNLS.2020.3025110

- [24] M. T. Kejani, F. Dornaika, and H. Talebi, “Graph Convolution Networks with manifold regularization for semi-supervised learning,” Neural Networks, Vol.127, pp. 160-167, 2020. https://doi.org/10.1016/j.neunet.2020.04.016

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.