Research Paper:

Overcoming Data Limitations in Thai Herb Classification with Data Augmentation and Transfer Learning

Sittiphong Pornudomthap

, Ronnagorn Rattanatamma

, Ronnagorn Rattanatamma

, and Patsorn Sangkloy

, and Patsorn Sangkloy

Faculty of Science and Technology, Phranakhon Rajabhat University

9 Changwattana Road, Bangkhen, Bangkok 10220, Thailand

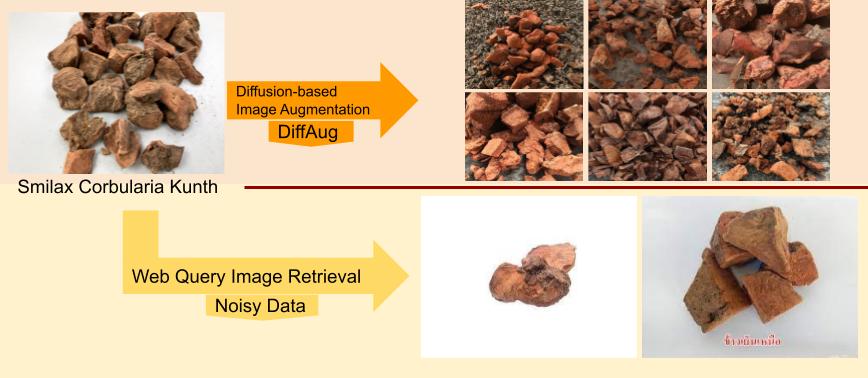

Despite the medicinal significance of traditional Thai herbs, their accurate identification on digital platforms is a challenge due to the vast diversity among species and the limited scope of existing digital databases. In response, this paper introduces the Thai traditional herb classifier that uniquely combines transfer learning, innovative data augmentation strategies, and the inclusion of noisy data to tackle this issue. Our novel contributions encompass the creation of a curated dataset spanning 20 distinct Thai herb categories, a robust deep learning architecture that intricately combines transfer learning with tailored data augmentation techniques, and the development of an Android application tailored for real-world herb recognition scenarios. Preliminary results of our method indicate its potential to revolutionize the way Thai herbs are digitally identified, holding promise for advancements in natural medicine and computer-assisted herb recognition.

Example of our data augmentations

- [1] J. Ho, A. Jain, and P. Abbeel, “Diffusion probabilistic models,” Advances in Neural Information Processing Systems, Vol.33, pp. 6840-6851, 2020.

- [2] C. Pornpanomchai, S. Rimdusit, P. Tanasap, and C. Chaiyod, “Thai herb leaf image recognition system (THLIRS),” Agriculture and Natural Resources, Vol.45, No.3, pp. 551-562, 2011.

- [3] L. Mookdarsanit and P. Mookdarsanit, “Thai Herb Identification with Medicinal Properties Using Convolutional Neural Network,” Suan Sunandha Science and Technology J., Vol.6, No.2, pp. 34-40, 2019.

- [4] A. Visavakitcharoen, S. Ratanasanya, and J. Polvichai, “Improving Thai Herb Image Classification Using Convolutional Neural Networks with Boost Up Features,” Proc. of 2019 34th Int. Technical Conf. on Circuits/Systems, Computers and Communications (ITC-CSCC), 2019. https://doi.org/10.1109/ITC-CSCC.2019.8793352

- [5] S. Temsiririrkkul, P. Siritanawan, and R. Temsiririrkkul, “Which one is Kaphrao? Identify Thai herbs with similar leaf structure using transfer learning of deep convolutional neural networks,” Proc. of TENCON 2021-2021 IEEE Region 10 Conf. (TENCON), pp. 738-743, 2021. https://doi.org/10.1109/TENCON54134.2021.9707324

- [6] A. Mimi et al., “Identifying selected diseases of leaves using deep learning and transfer learning models,” Machine Graphics and Vision, Vol.32, No.1, 2023. https://doi.org/10.22630/MGV.2023.32.1.3

- [7] S. Chaivivatrakul, J. Moonrinta, and S. Chaiwiwatrakul, “Convolutional neural networks for herb identification: Plain background and natural environment,” Int. J. on Advanced Science, Engineering and Information Technology (IJASEIT), Vol.12, No.3, pp. 1244-1252, 2022. http://dx.doi.org/10.18517/ijaseit.12.3.15348

- [8] G. Batchuluun, S. H. Nam, and K. R. Park, “Deep learning-based plant-image classification using a small training dataset,” Mathematics, Vol.10, No.17, Article No.3091, 2022. https://doi.org/10.3390/math10173091

- [9] N. A. Roslan et al., “Automatic plant recognition using convolutional neural network on Malaysian medicinal herbs: The value of data augmentation,” Int. J. of Advances in Intelligent Informatics, Vol.9, No.1, pp. 136-147, 2023. https://doi.org/10.26555/ijain.v9i1.1076

- [10] J. Deng et al., “ImageNet: A large-scale hierarchical image database,” Proc. of 2009 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 248-255, 2009. https://doi.org/10.1109/CVPR.2009.5206848

- [11] K. Simonyan and A. Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition,” arXiv:1409.1556, 2014. https://doi.org/10.48550/arXiv.1409.1556

- [12] C. Szegedy et al., “Rethinking the inception architecture for computer vision,” Proc. of 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2818-2826, 2016. https://doi.org/10.1109/CVPR.2016.308

- [13] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 770-778, 2016. https://doi.org/10.1109/CVPR.2016.90

- [14] S. Xie et al., “Aggregated residual transformations for deep neural networks,” Proc. of 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 5987-5995, 2017. https://doi.org/10.1109/CVPR.2017.634

- [15] G. Huang, Z. Liu, L. van der Maaten, and K. Q. Weinberger, “Densely connected convolutional networks,” Proc. of 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2261-2269, 2017. https://doi.org/10.1109/CVPR.2017.243

- [16] A. Dosovitskiy et al., “An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale,” ICLR, 2021.

- [17] R. Rombach et al., “High-resolution image synthesis with latent diffusion models,” Proc. of the IEEE/CVF Conf. On Computer Vision and Pattern Recognition, pp. 10684-10695, 2022.

- [18] A. Radford et al., “Learning transferable visual models from natural language supervision,” Proc. of the 38th Int. Conf. on Machine Learning (PMLR), pp. 8748-8763, 2021.

- [19] A. Krizhevsky, “One weird trick for parallelizing convolutional neural networks,” arXiv:1404.5997, 2014. https://doi.org/10.48550/arXiv.1404.5997

- [20] F. N. Iandola et al., “SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <1MB model size,” arXiv:1602.07360, 2016. https://doi.org/10.48550/arXiv.1602.07360

- [21] C. Szegedy et al., “Going deeper with convolutions,” Proc. of 2015 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2014. https://doi.org/10.1109/CVPR.2015.7298594

- [22] N. Ma, X. Zhang, H.-T. Zheng, and J. Sun, “ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design,” arXiv:1807.11164, 2018. https://doi.org/10.48550/arXiv.1807.11164

- [23] M. Sandler et al., “MobileNetV2: Inverted Residuals and Linear Bottlenecks,” Proc. of 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 4510-4520, 2018. https://doi.org/10.1109/CVPR.2018.00474

- [24] S. Zagoruyko and N. Komodakis, “Wide Residual Networks,” arXiv:1605.07146, 2016. https://doi.org/10.48550/arXiv.1605.07146

- [25] M. Tan et al., “MnasNet: Platform-Aware Neural Architecture Search for Mobile,” Proc. of 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2815-2823, 2018. https://doi.org/10.1109/CVPR.2019.00293

- [26] M. Tan and Q. V. Le, “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks,” arXiv:1905.11946, 2019. https://doi.org/10.48550/arXiv.1905.11946

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.