Research Paper:

A Deep Learning Approach for Surgical Instruments Detection System in Total Knee Arthroplasty—Automatic Creation of Training Data and Reduction of Training Time—

Ryusei Kasai†

and Kouki Nagamune

and Kouki Nagamune

Graduate School Engineering, University of Fukui

3-9-1 Bunkyo, Fukui, Fukui 910-8507, Japan

†Corresponding author

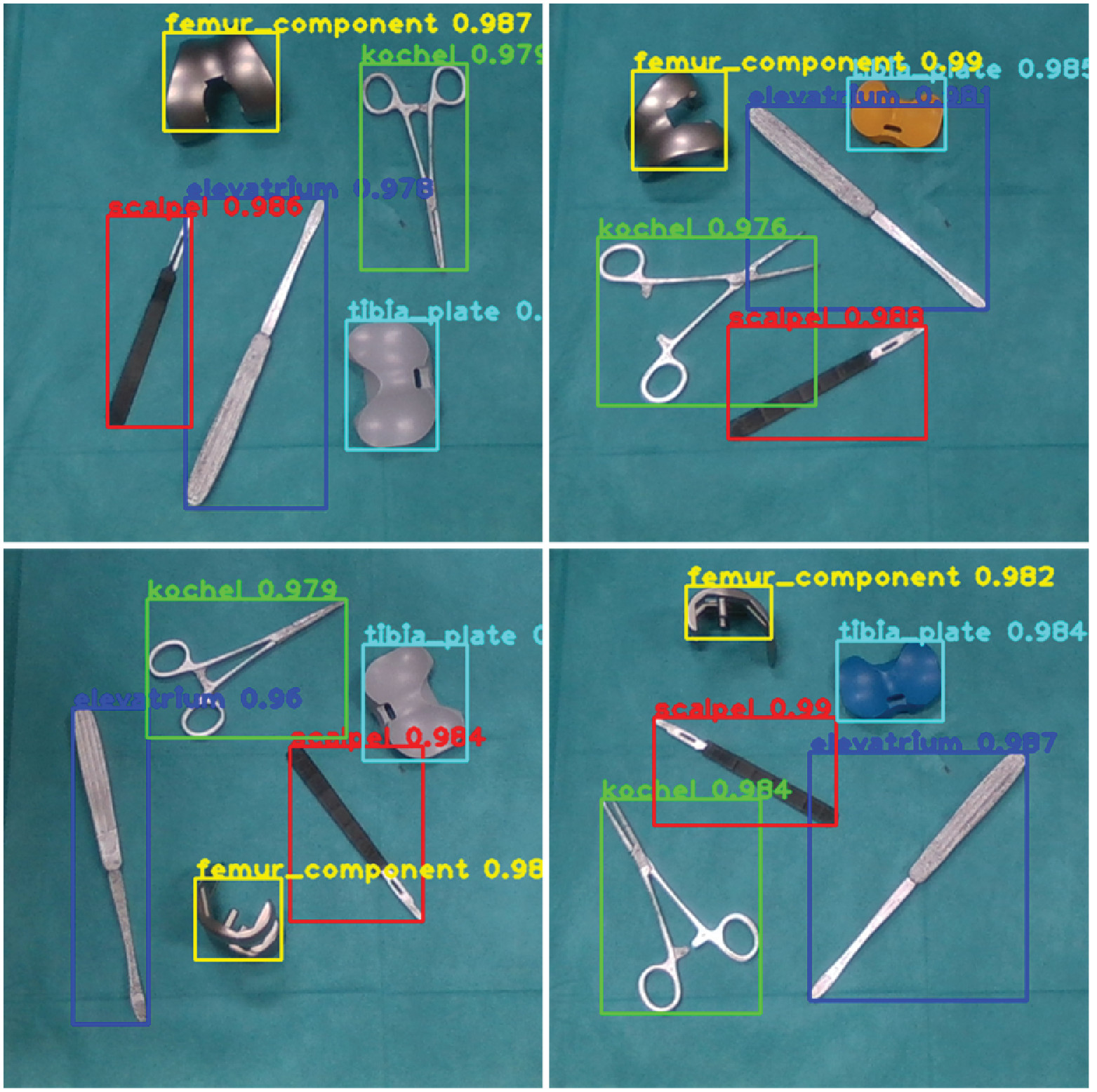

In total knee arthroplasty (TKA), many surgical instruments are available. Many of these surgical instruments are similar in shape and size. For this reason, there have been accidents due to incorrect selection of implants. Furthermore, a shortage of nurses is expected worldwide. There will also be a shortage of scrub nurses, which will result in an increased burden on each scrub nurse. For these reasons, we have developed a surgical instrument detection system for TKA to reduce the burden on scrub nurses and the number of accidents, such as implant selection errors. This study also focuses on automating the acquisition of data for training. We also develop a method to reduce the additional training time when the number of detection targets increases. In this study, YOLOv5 is used as the object detection method. In experiments, we examine the accuracy of the training data automatically acquired and the accuracy of object detection for surgical instruments. In object detection, several training files are created and compared. The results show that the training data is sufficiently effective, and high accuracy is obtained in object detection. Object detection is performed in several cases, and one of the results shows an IoU of 0.865 and an F-measure of 0.930.

Surgical instruments detection system in TKA

- [1] T. Kamiya et al., “A Development of Force Distribution Measurement System in Total Knee Arthroplasty and Reporting of the PS Type Measurement,” Proc. of the 29th Fuzzy System Symp., pp. 238-241, 2013 (in Japanese). https://doi.org/10.14864/fss.29.0_54

- [2] M. Sanna et al., “Surgical Approaches in Total Knee Arthroplasty,” Joints, Vol.1, No.2, pp. 34-44, 2013.

- [3] M. P. Ast et al., “Can We Avoid Implant-Selection Errors in Total Joint Arthroplasty?,” Clinical Orthopaedics and Related Research, Vol.477, No.1, pp. 130-133, 2019. https://doi.org/10.1097/CORR.0000000000000536

- [4] M. Marć et al., “A nursing shortage – a prospect of global and local policies,” Int. Nursing Review, Vol.66, No.1, pp. 9-16, 2019. https://doi.org/10.1111/inr.12473

- [5] C. Perz-Vidal et al., “Steps in the Development of a Robotic Scrub Nurse,” Robotics and Autonomous Systems, Vol.60, No.6, pp. 901-911, 2012. https://doi.org/10.1016/j.robot.2012.01.005

- [6] M. Hoeckelmann et al., “Current Capabilities and Development Potential in Surgical Robotics,” Int. J. of Advanced Robotic Systems, Vol.12, No.5, Article No.61, 2014. https://doi.org/10.5772/60133

- [7] P. Koukourikis and K. H. Rha, “Robotic surgical systems in urology: What is surrently available?,” Investigative and Clinical Urology, Vol.62, No.1, pp. 14-22, 2021. https://doi.org/10.4111/icu.20200387

- [8] A. Nakano and K. Nagamune, “A Development of Robotic Scrub Nurse System – Detection for Surgical Instruments Using Faster Region-Based Convolutional Neural Network –,” J. Adv. Comput. Intell. Intell. Inform., Vol.26, No.1, pp. 74-82, 2022. https://doi.org/10.20965/jaciii.2022.p0074

- [9] R. Girshick, “Fast R-CNN,” 2015 IEEE Int. Conf. on Computer Vision (ICCV), pp. 1440-1448, 2015. https://doi.org/10.1109/ICCV.2015.169

- [10] S. Ren et al., “Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks,” Proc. of the 28th Int. Conf. on Neural Information Processing Systems (NIPS’15), Vol.1, pp. 91-99, 2015.

- [11] J. Redmon et al., “You Only Look Once: Unified, Real-Time Object Detection,” 2016 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 779-788, 2016. https://doi.org/10.1109/CVPR.2016.91

- [12] W. Liu et al., “SSD: Single Shot MultiBox Detector,” Proc. of the 14th European Conf. on Computer Vision (ECCV), Part 1, pp. 21-37, 2016. https://doi.org/10.1007/978-3-319-46448-0_2

- [13] B. Yan et al., “A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5,” Remote Sensing, Vol.13, No.9, Article No.1619, 2021. https://doi.org/10.3390/rs13091619

- [14] M. Sozzi et al., “Automatic Bunch Detection in White Grape Varieties Using YOLOv3, YOLOv4, and YOLOv5 Deep Learning Algorithms,” Agronomy, Vol.12, No.2, Article No.319, 2022. https://doi.org/10.3390/agronomy12020319

- [15] L. Keselman et al., “Intel® RealSense™ Stereoscopic Depth Cameras,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 1267-1276, 2017. https://doi.org/10.1109/CVPRW.2017.167

- [16] Tzutalin, “LabelImg,” 2015. https://github.com/heartexlabs/labelImg [Accessed June 1, 2023]

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.