Research Paper:

Human Motion Capture and Recognition Based on Sparse Inertial Sensor

Huailiang Xia*1, Xiaoyan Zhao*1,*2,†, Yan Chen*2, Tianyao Zhang*2, Yuguo Yin*3, and Zhaohui Zhang*1,*2,*4

*1Shunde Innovation Institute, University of Science and Technology Beijing

Fo Shan 528399, China

*2School of Automation and Electrical Engineering, University of Science and Technology Beijing

30# Xueyuan Road, Haidian District, Beijing 100083, China

*3Shandong Start Measurement and Control Equipment Co., Ltd.

No.600 Xinyi Road, Weifang, Shandong 261101, China

*4Beijing Engineering Research Center of Industrial Spectrum Imaging, University of Science and Technology Beijing

30# Xueyuan Road, Haidian District, Beijing 100083, China

†Corresponding author

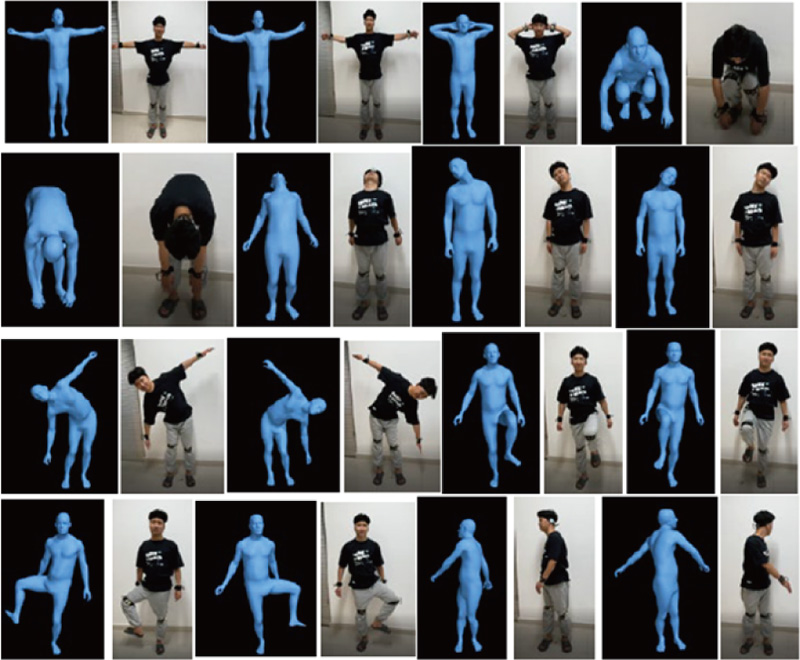

The field of human motion capture technology represents an emergent and multifaceted domain that encapsulates various disciplines, including but not limited to computer graphics, ergonomics, and communication technology. A distinct network platform within its domain has been established to ensure the reliability and stability of data transmission. Moreover, a sink node has been configured to facilitate sensor data reception through two distinct channels. Notably, the simplicity of the measurement system is directly proportional to the limited number of sensors used. This study focuses on accurately estimating uncertain human 3D movements via a sparse arrangement of wearable inertial sensors, utilizing only six sensors within the system. The methodology is based on a time series sequence throughout the motion process, wherein a series of discontinuous actions constitute the sequential motion. Deep learning methodologies, specifically recurrent neural networks, were employed to refine the regression parameters. Our approach integrated both historical and present sensor data to forecast future sensor data. These data were amalgamated into a superposed input vector, which was fed back into a shallow neural network to estimate human motion. Our experimental results demonstrate the viability of this approach: the six sensors could accurately replicate representative poses. This finding carries significant implications for advancing and applying wearable devices within the realm of motion capture, offering the potential for widespread adoption and implementation.

Real-time human motion capture

- [1] H. T. Butt, B. Taetz, M. Musahl, M. A. Sanchez, P. Murthy, and D. Stricker, “Magnetometer robust deep human pose regression with uncertainty prediction using sparse body worn magnetic inertial measurement units,” IEEE Access, Vol.9, pp. 36657-36673, 2021. https://doi.org/10.1109/ACCESS.2021.3062545

- [2] L. A. Schwarz, D. Mateus, and N. Navab, “Multiple-activity human body tracking in unconstrained environments,” 2010 Int. Conf. on Articulated Motion and Deformable Objects (AMDO), 2010. https://doi.org/10.1007/978-3-642-14061-7_19

- [3] X. Hu, C. Yao, and G. S. Soh, “Performance evaluation of lower limb ambulatory measurement using reduced inertial measurement units and 3R gait model,” 2015 IEEE Int. Conf. on Rehabilitation Robotics (ICORR), pp. 549-554, 2015. https://doi.org/10.1109/ICORR.2015.7281257

- [4] D. Holden, J. Saito, and T. Komura, “A deep learning framework for character motion synthesis and editing,” ACM Trans. on Graphics, Vol.35, No.4, Article No.138, 2016. https://doi.org/10.1145/2897824.2925975

- [5] S. Vlutters, “Long short-term memory networks for body movement estimation,” Master theses, University of Twente, 2016.

- [6] X. Zhao, J. Li, R. Chen, C. Li, Y. Chen, T. Zhang, and Z. Zhang, “Design and Implementation of Environmental Monitoring System Based on Multi-Protocol Fusion Internet of Things,” J. Adv. Comput. Intell. Intell. Inform., Vol.26, No.5, pp. 715-721, 2022. https://doi.org/10.20965/jaciii.2022.p0715

- [7] R. Wen, K. Torkkola, B. Narayanaswamy, and D. Madeka, “A multi-horizon quantile recurrent forecaster,” arXiv:1711.11053, 2017. https://doi.org/10.48550/arXiv.1711.11053

- [8] G. Ligorio, E. Bergamini, L. Truppa, M. Guaitolini, M. Raggi, A. Mannini, A. M. Sabatini, G. Vannozzi, and P. Garofalo, “A wearable magnetometer-free motion capture system: Innovative solutions for real-world applications,” IEEE Sensors J., Vol.20, No.15, pp. 8844-8857, 2020. https://doi.org/10.1109/JSEN.2020.2983695

- [9] Y. Huang, M. Kaufmann, E. Aksan, M. J. Black, O. Hilliges, and G. Pons-Moll, “Deep inertial poser: Learning to reconstruct human pose from sparse inertial measurements in real time,” ACM Trans. on Graphics, Vol.37, No.6, Article No.185, 2018. https://doi.org/10.1145/3272127.3275108

- [10] H. T. Butt, M. Pancholi, M. Musahl, P. Murthy, M. A. Sanchez, and D. Stricker, “Inertial motion capture using adaptive sensor fusion and joint angle drift correction,” 2019 22th Int. Conf. on Information Fusion (FUSION), 2019. https://doi.org/10.23919/FUSION43075.2019.9011359

- [11] Y. Zhou, C. Barnes, J. Lu, J. Yang, and H. Li, “On the continuity of rotation representations in neural networks,” Proc. of the 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 5745-5753, 2019. https://doi.org/10.1109/CVPR.2019.00589

- [12] J. B. Kim, Y. Park, and I. H. Suh, “Tracking human-like natural motion by combining two deep recurrent neural networks with Kalman filter,” Intelligent Service Robotics, Vol.11, pp. 313-322, 2018. https://doi.org/10.1007/s11370-018-0255-z

- [13] T. von Marcard, B. Rosenhahn, M. J. Black, and G. Pons-Moll, “Sparse inertial poser: Automatic 3d human pose estimation from sparse IMUs,” Computer Graphics Forum, Vol.36, No.2, pp. 349-360, 2017. https://doi.org/10.1111/cgf.13131

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.