Research Paper:

Application of Convolutional Neural Network to Gripping Comfort Evaluation Using Gripping Posture Image

Kazuki Hokari*1,†

, Makoto Ikarashi*2, Jonas A. Pramudita*3

, Makoto Ikarashi*2, Jonas A. Pramudita*3

, Kazuya Okada*4, Masato Ito*4, and Yuji Tanabe*5

, Kazuya Okada*4, Masato Ito*4, and Yuji Tanabe*5

*1Department of Mechanical and Electrical Engineering, School of Engineering, Nippon Bunri University

1727 Ichigi, Oita, Oita 870-0397, Japan

†Corresponding author

*2Graduate School of Science and Technology, Niigata University

8050 Ikarashi 2-no-cho, Nishi-ku, Niigata, Niigata 950-2181, Japan

*3Department of Mechanical Engineering, College of Engineering, Nihon University

1 Nakagawara, Tokusada, Tamuramachi, Koriyama, Fukushima 963-8642, Japan

*4Product Analysis Center, Panasonic Holdings Corporation

1048 Kadoma, Kadoma, Osaka 571-8686, Japan

*5Management Strategy Section, President Office, Niigata University

8050 Ikarashi 2-no-cho, Nishi-ku, Niigata-shi, Niigata 950-2181, Japan

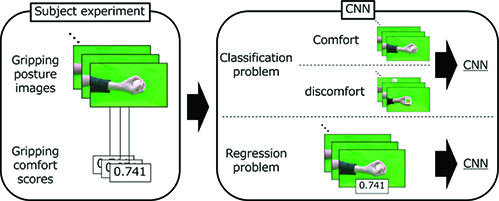

Gripping comfort evaluation was crucial for designing a product with good gripping comfort. In this study, a novel evaluation method using gripping posture image was constructed based on convolutional neural network (CNN). Human subject experiment was conducted to acquire gripping comfort scores and gripping posture images while gripping seven objects with simple shape and eleven manufactured products. The scores and the images were used as training set and validation set for CNN. Classification problem was employed to classify gripping posture images as comfort or discomfort. As a result, accuracies were 91.4% for simple shape objects and 76.2% for manufactured products. Regression problem was utilized to predict gripping comfort scores from gripping posture images while gripping cylindrical object. Gripping posture images of radial and dorsal sides in direction of hand were used to investigate effect of direction of hand on prediction accuracy. Consequently, mean absolute errors (MAE) of gripping comfort scores were 0.132 for radial side and 0.157 for dorsal side in direction of hand. In both problems, the results indicated that these evaluation methods were useful to evaluate gripping comfort. The evaluation methods help designers to evaluate products and enhance gripping comfort.

Gripping comfort evaluation based on CNN

- [1] V. Gracia-Ibáñez, J. L. Sancho-Bru, and M. Vergara, “Relevance of grasp types to assess functionality for personal autonomy,” J. Hand. Ther., Vol.31, No.1, pp. 102-110, 2018. https://doi.org/10.1016/j.jht.2017.02.003

- [2] M. Nagamachi, “Kansei Engineering: A new ergonomic consumer-oriented technology for product development,” Int. J. Ind. Ergon., Vol.15, No.1, pp. 3-11, 1995. https://doi.org/10.1016/0169-8141(94)00052-5

- [3] L. F. M. Kuijt-Evers et al., “Identifying factors of comfort in using hand tools,” Appl. Ergon., Vol.35, No.5, pp. 453-458, 2004. https://doi.org/10.1016/j.apergo.2004.04.001

- [4] K. Hokari et al., “The relationships of gripping comfort to contact pressure and hand posture during gripping,” Int. J. Ind. Ergon., Vol.70, pp. 84-91, 2019. https://doi.org/10.1016/j.ergon.2019.01.010

- [5] Y.-K. Kong and B. D. Lowe, “Optimal cylindrical handle diameter for grip force tasks,” Int. J. Ind. Ergon., Vol.35, No.6, pp. 495-507, 2005. https://doi.org/10.1016/j.ergon.2004.11.003

- [6] Y.-K. Kong et al., “Evaluation of handle shapes for screwdriving,” Appl. Ergon., Vol.39, No.2, pp. 191-198, 2008. https://doi.org/10.1016/j.apergo.2007.05.003

- [7] J.-H. Lin and R. W. McGorry, “Predicting subjective perceptions of powered tool torque reactions,” Appl. Ergon., Vol.40, No.1, pp. 47-55, 2009. https://doi.org/10.1016/j.apergo.2008.01.020

- [8] G. A. Mirka, S. Jin, and J. Hoyle, “An evaluation of arborist handsaws,” Appl. Ergon., Vol.40, No.1, pp. 8-14, 2009. https://doi.org/10.1016/j.apergo.2008.02.011

- [9] T. Yakou et al., “Sensory evaluation of grip using cylindrical objects,” JSME Int. J. C Mech. Syst. Mach. Elem. Manuf., Vol.40, No.4, pp. 730-735, 1997. https://doi.org/10.1299/jsmec.40.730

- [10] T. Bazański, “Metacarpophalangeal joint kinematics during a grip of everyday objects using the three-dimensional motion analysis system,” Acta Bioeng Biomech., Vol.12, No.2, pp. 79-85, 2010.

- [11] K.-S. Lee and M.-C. Jung, “Three-dimensional finger joint angles by hand posture and object properties,” Ergonomics, Vol.59, No.7, pp. 890-900, 2016. https://doi.org/10.1080/00140139.2015.1108458

- [12] C. R. Mason, J. E. Gomez, and T. J. Ebner, “Hand synergies during reach-to-grasp,” J. Neurophysiol., Vol.86, No.6, pp. 2896-2910, 2001. https://doi.org/10.1152/jn.2001.86.6.2896

- [13] A. P. Sangole and M. F. Levin, “Arches of the hand in reach to grasp,” J. Biomech., Vol.41, No.4, pp. 829-837, 2008. https://doi.org/10.1016/j.jbiomech.2007.11.006

- [14] V. Gracia-Ibáñez et al., “Sharing of hand kinematic synergies across subjects in daily living activities,” Sci. Rep., Vol.10, Article No.6116, 2020. https://doi.org/10.1038/s41598-020-63092-7

- [15] J. Gülke et al., “Motion coordination patterns during cylinder grip analyzed with a sensor glove,” J. Hand Surg., Vol.35, No.5, pp. 797-806, 2010. https://doi.org/10.1016/j.jhsa.2009.12.031

- [16] N. J. Jarque-Bou, M. Atzori, and H. Müller, “A large calibrated database of hand movements and grasps kinematics,” Sci. Data, Vol.7, No.1, Article No.12, 2020. https://doi.org/10.1038/s41597-019-0349-2

- [17] J. H. Buffi, J. J. Crisco, and W. M. Murray, “A method for defining carpometacarpal joint kinematics from three-dimensional rotations of the metacarpal bones captured in vivo using computed tomography,” J. Biomech., Vol.46, No.12, pp. 2104-2108, 2013. https://doi.org/10.1016/j.jbiomech.2013.05.019

- [18] S. Shimawaki et al., “Flexion angles of finger joints in two-finger tip pinching using 3D bone models constructed from X-ray computed tomography (CT) images,” Appl. Bionics Biomech., Vol.2020, Article No.8883866, 2020. https://doi.org/10.1155/2020/8883866

- [19] S. Hoshino and K. Niimura, “Robot vision system for human detection and action recognition,” J. Adv. Comput. Intell. Intell. Inform., Vol.24, No.3, pp. 346-356, 2020. https://doi.org/10.20965/jaciii.2020.p0346

- [20] J. Lu, L. Tan, and H. Jiang, “Review on convolutional neural network (CNN) applied to plant leaf disease classification,” Agriculture, Vol.11, No.8, Article No.707, 2021. https://doi.org/10.3390/agriculture11080707

- [21] H. Liao et al., “Age estimation of face images based on CNN and divide-and-rule strategy,” Math. Probl. Eng., Vol.2018, Article No.1712686, 2018. https://doi.org/10.1155/2018/1712686

- [22] H. Suzuki et al., “An automatic modeling method of Kansei evaluation from product data using a CNN model expressing the relationship between impressions and physical features,” C. Stephanidis (Ed.), “HCI International 2019 – Posters,” pp. 86-94, Springer, 2019. https://doi.org/10.1007/978-3-030-23522-2_12

- [23] Q. Fu et al., “Optimal design of virtual reality visualization interface based on Kansei engineering image space research,” Symmetry, Vol.12, No.10, Article No.1722, 2020. https://doi.org/10.3390/sym12101722

- [24] M. Kouchi, “AIST Japanese hand dimensions data,” (in Japanese) https://www.airc.aist.go.jp/dhrt/hand/index.html [Accessed June 20, 2022]

- [25] Y.-K. Kong et al., “Comparison of comfort, discomfort, and continuum ratings of force levels and hand regions during gripping exertions,” Appl. Ergon., Vol.43, No.2, pp. 283-289, 2012. https://doi.org/10.1016/j.apergo.2011.06.003

- [26] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” 3rd Int. Conf. Learn. Represent. (ICLR 2015), 2015.

- [27] M. A. H. Akhand et al., “Facial emotion recognition using transfer learning in the deep CNN,” Electronics, Vol.10, No.9, Article No.1036, 2021. https://doi.org/10.3390/electronics10091036

- [28] S. M. Hassan et al., “Identification of plant-leaf diseases using CNN and transfer-learning approach,” Electronics, Vol.10, No.12, Article No.1388, 2021. https://doi.org/10.3390/electronics10121388

- [29] T. Rahman et al., “Transfer learning with deep convolutional neural network (CNN) for pneumonia detection using chest X-ray,” Appl. Sci., Vol.10, No.9, Article No.3233, 2020. https://doi.org/10.3390/app10093233

- [30] S. Tammina, “Transfer learning using VGG-16 with deep convolutional neural network for classifying images,” Int. J. Sci. Res. Publ., Vol.9, No.10, pp. 143-150, 2019. https://doi.org/10.29322/IJSRP.9.10.2019.p9420

- [31] J. Pardede et al., “Implementation of transfer learning using VGG16 on fruit ripeness detection,” Int. J. Intell. Syst. Appl., Vol.13, No.2, pp. 52-61, 2021. https://doi.org/10.5815/ijisa.2021.02.04

- [32] N. Michel et al., “Banner click through rate classification using deep convolutional neural network,” Proc. 32nd Annu. Conf. JSAI, Article No.1O1-01, 2018. https://doi.org/10.11517/pjsai.JSAI2018.0_1O101

- [33] K. Hokari et al., “The factors that affect gripping comfort score, palmar contact pressure and fingers posture during gripping columnar objects,” Mech. Eng. J., Vol.8, No.1, Article No.20-00406, 2021. https://doi.org/10.1299/mej.20-00406

- [34] K.-S. Lee and M.-C. Jung, “Common patterns of voluntary grasp types according to object shape, size, and direction,” Int. J. Ind. Ergon., Vol.44, No.5, pp. 761-768, 2014. https://doi.org/10.1016/j.ergon.2014.08.005

- [35] T. Iizuka, M. Fukasawa, and M. Kameyama, “Deep-learning-based imaging-classification identified cingulate island sign in dementia with Lewy bodies,” Sci. Rep., Vol.9, Article No.8944, 2019. https://doi.org/10.1038/s41598-019-45415-5

- [36] S. Shambhu et al., “Binary classification of COVID-19 CT images using CNN: COVID diagnosis using CT,” Int. J. E-Health Med. Commun., Vol.13, No.2, 2022. https://doi.org/10.4018/IJEHMC.20220701.oa4

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.